- java.lang.Object

-

- reactor.core.publisher.Flux<T>

-

- Type Parameters:

T- the element type of this Reactive StreamsPublisher

- All Implemented Interfaces:

- Publisher<T>

- Direct Known Subclasses:

- ConnectableFlux, FluxOperator, FluxProcessor, GroupedFlux

public abstract class Flux<T> extends Object implements Publisher<T>

A Reactive StreamsPublisherwith rx operators that emits 0 to N elements, and then completes (successfully or with an error).

It is intended to be used in implementations and return types. Input parameters should keep using raw

Publisheras much as possible.If it is known that the underlying

Publisherwill emit 0 or 1 element,Monoshould be used instead.Note that using state in the

java.util.function/ lambdas used within Flux operators should be avoided, as these may be shared between severalSubscribers.subscribe(CoreSubscriber)is an internal extension tosubscribe(Subscriber)used internally forContextpassing. User providedSubscribermay be passed to this "subscribe" extension but will loose the available per-subscribe @link Hooks#onLastOperator}.- Author:

- Sebastien Deleuze, Stephane Maldini, David Karnok, Simon Baslé

- See Also:

Mono

-

-

Constructor Summary

Constructors Constructor and Description Flux()

-

Method Summary

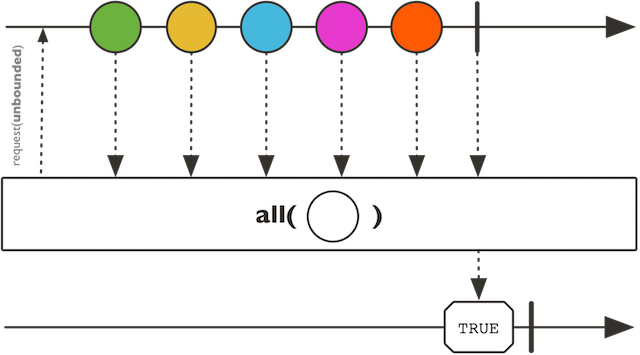

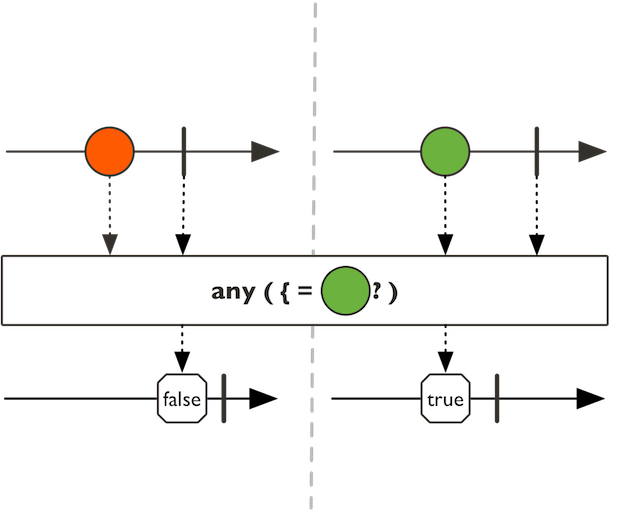

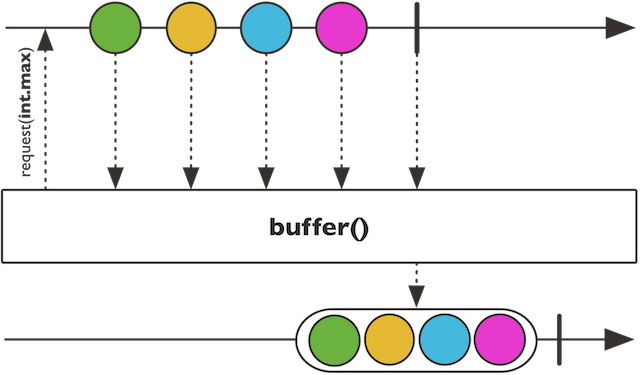

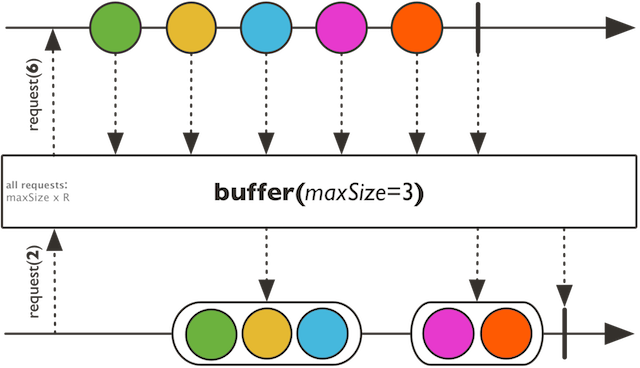

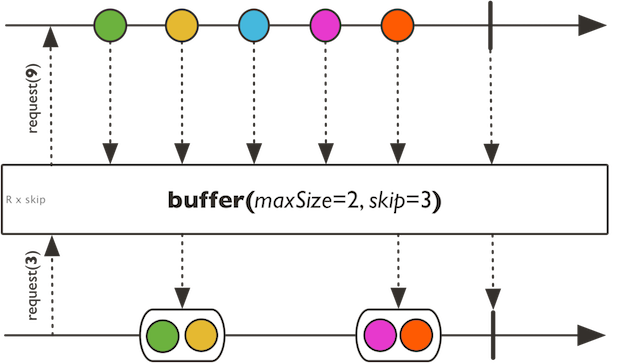

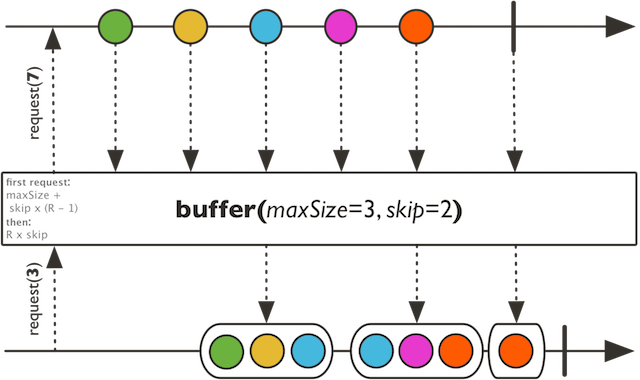

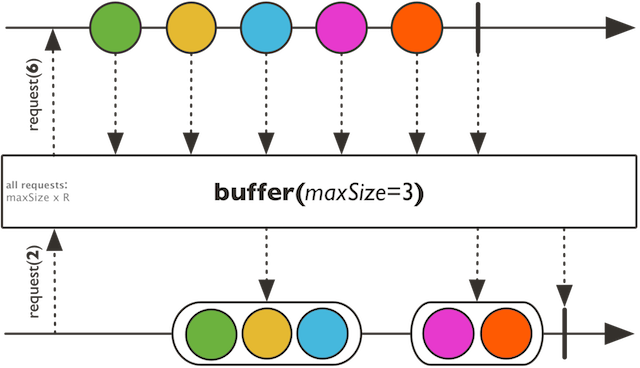

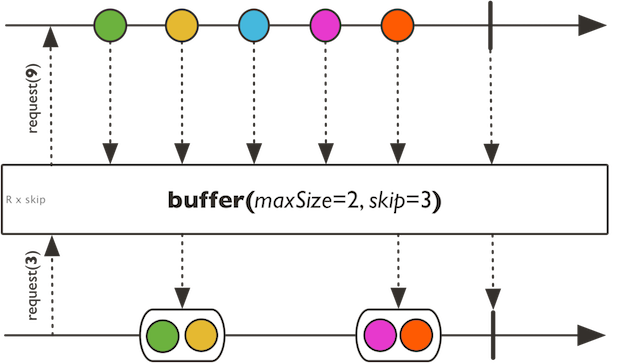

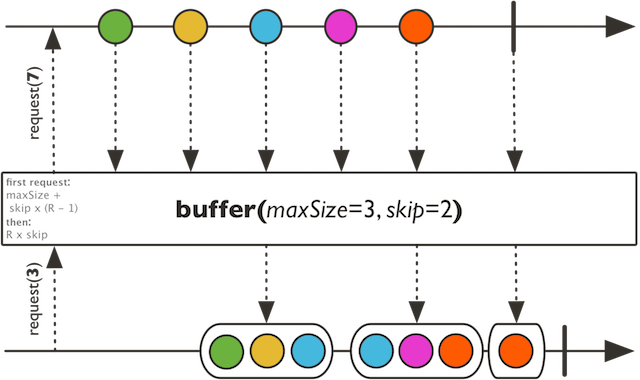

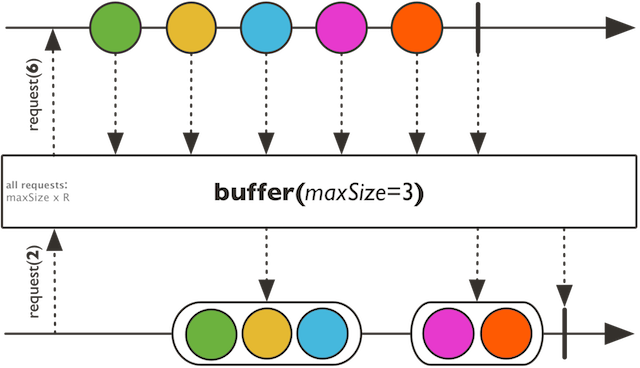

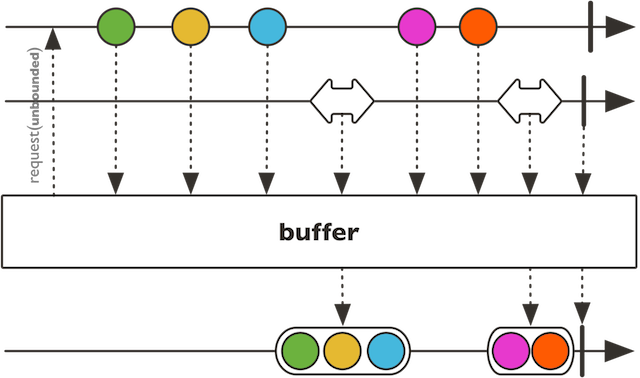

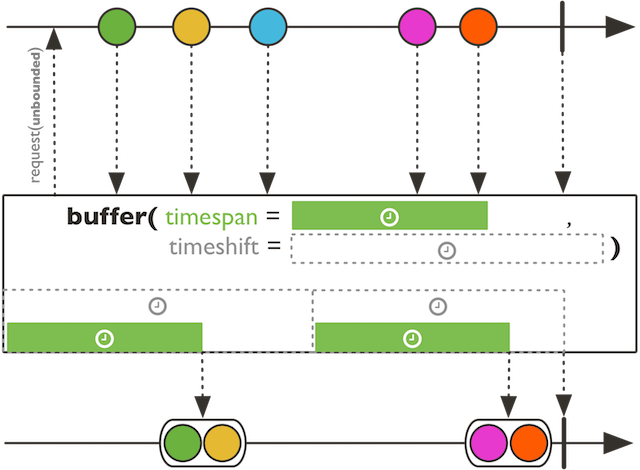

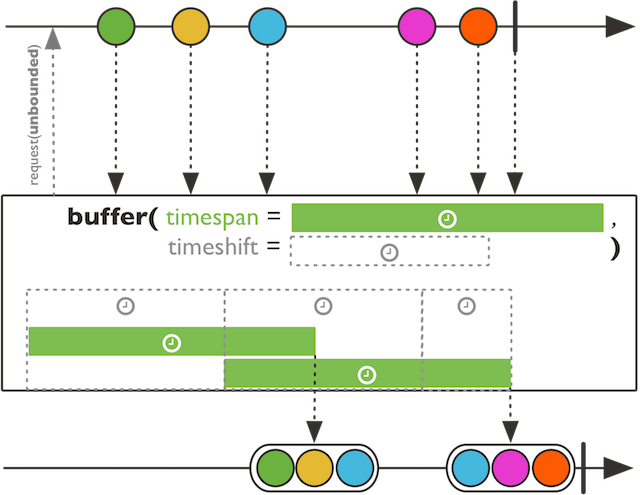

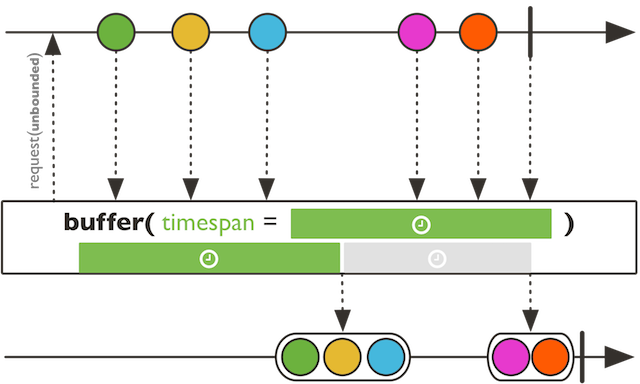

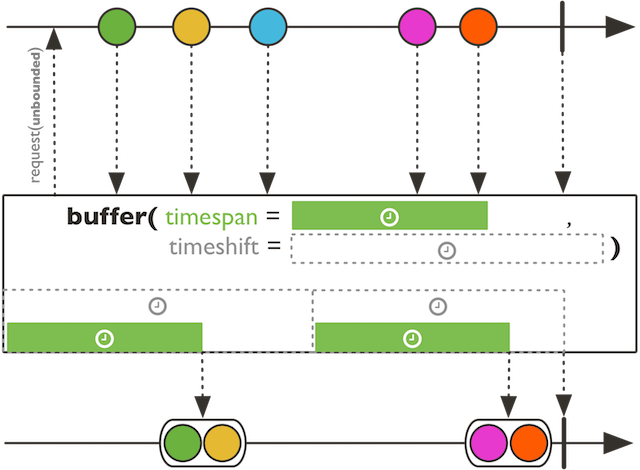

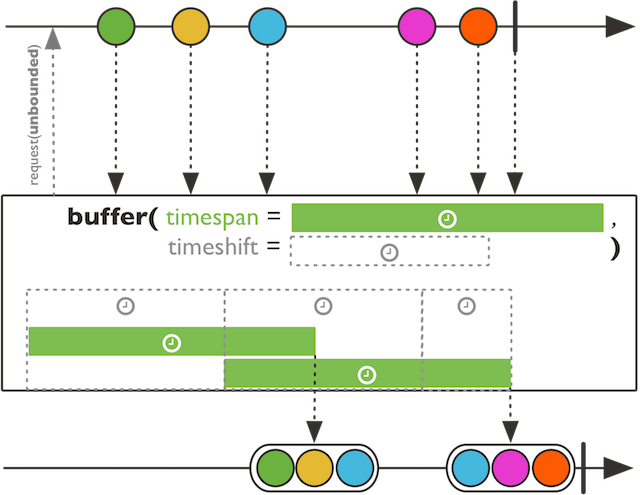

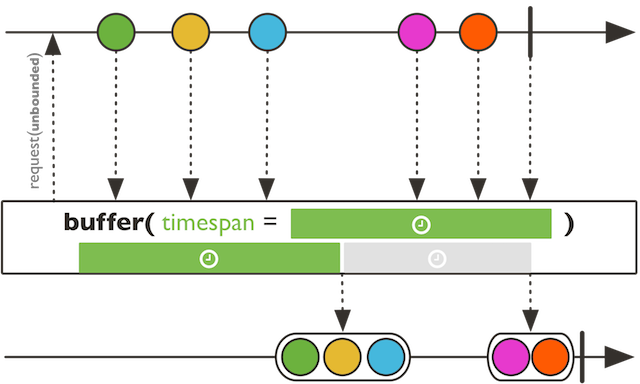

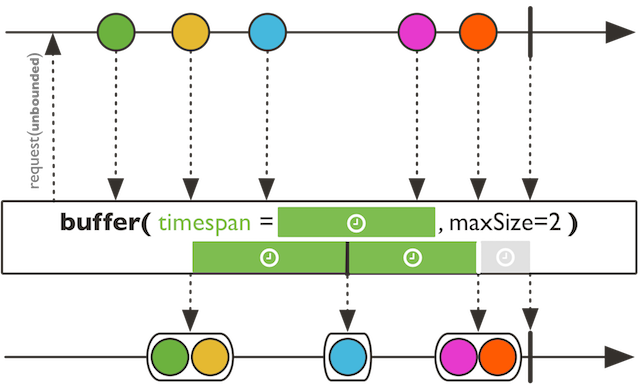

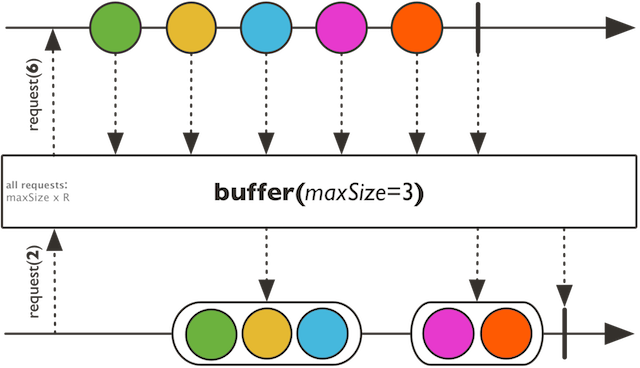

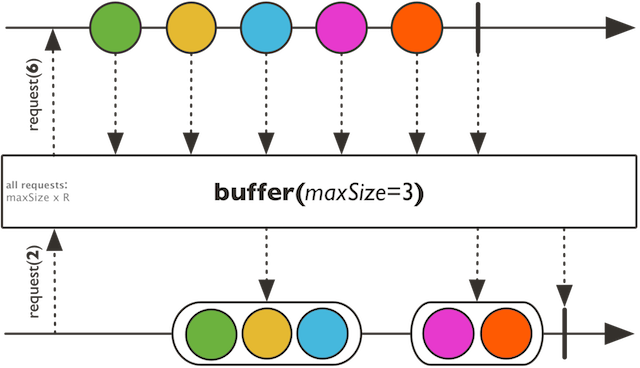

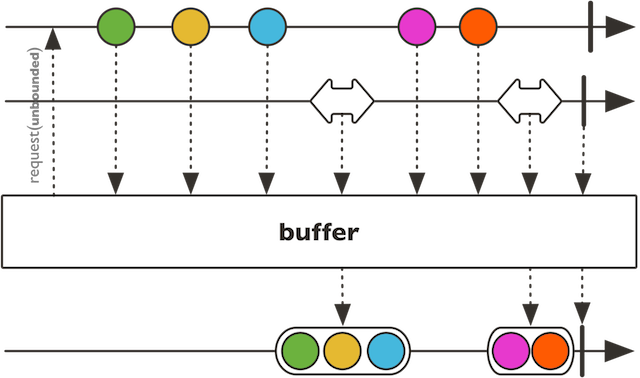

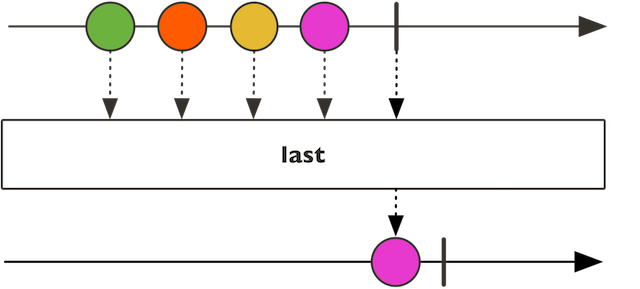

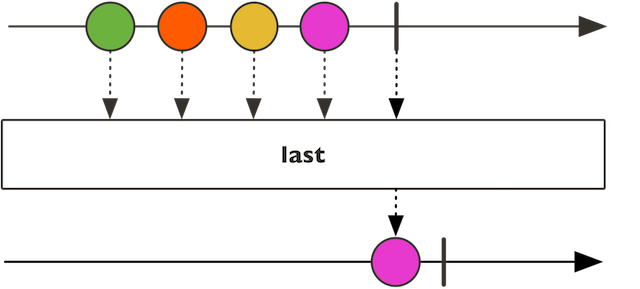

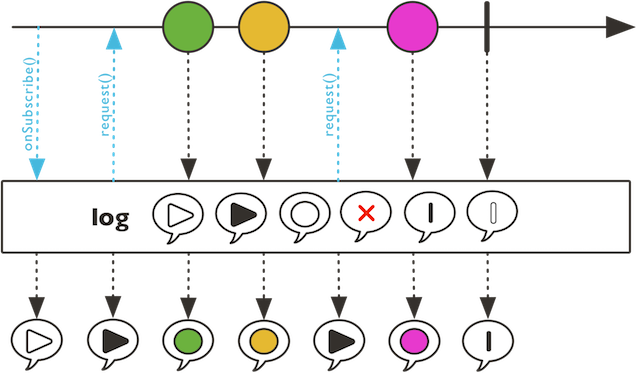

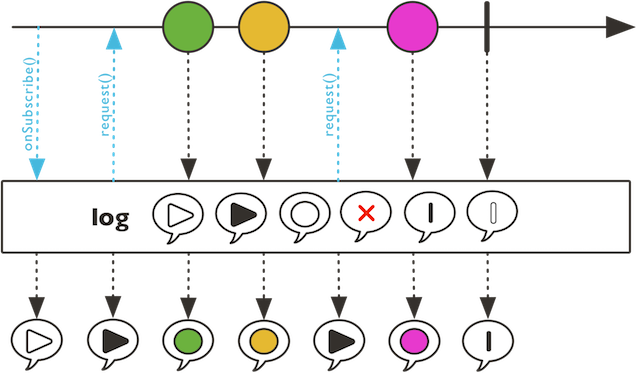

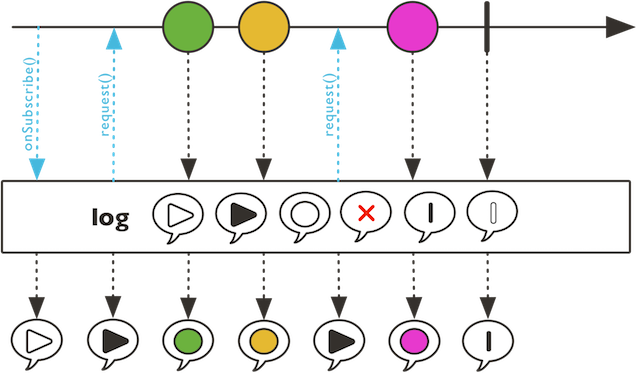

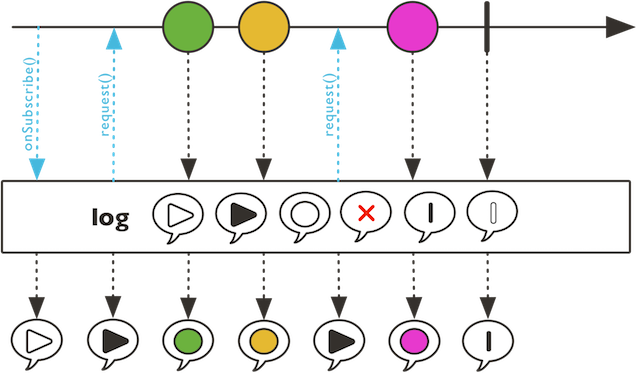

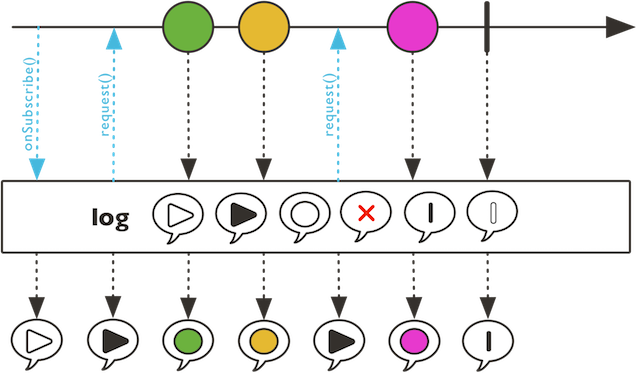

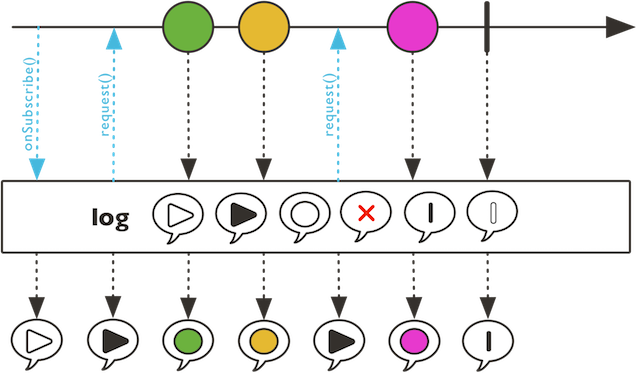

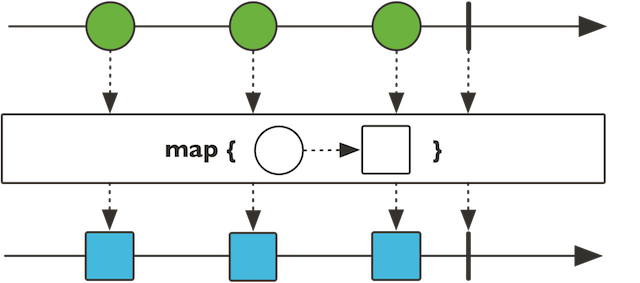

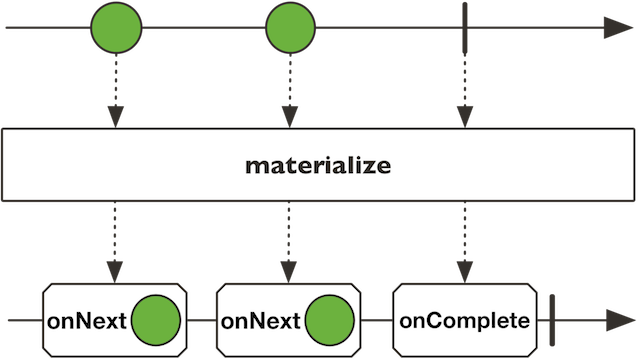

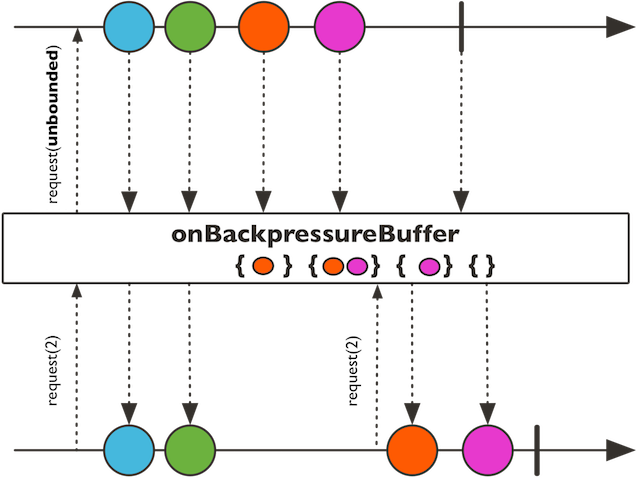

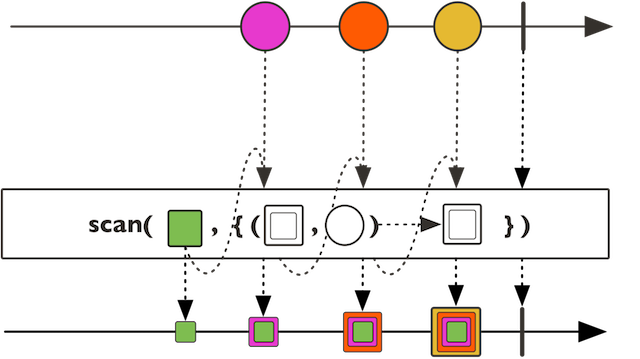

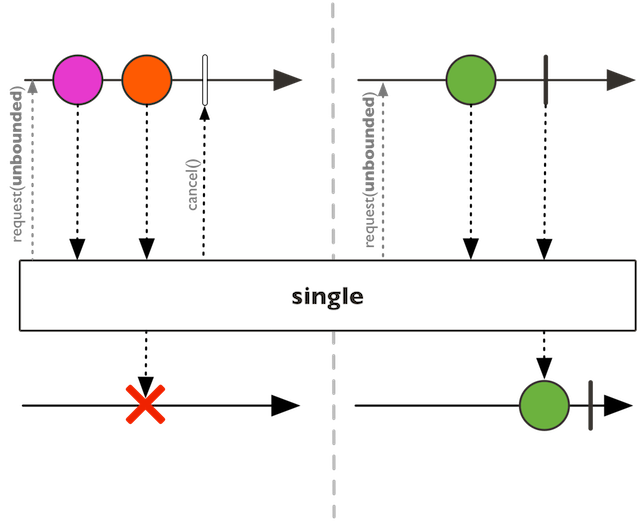

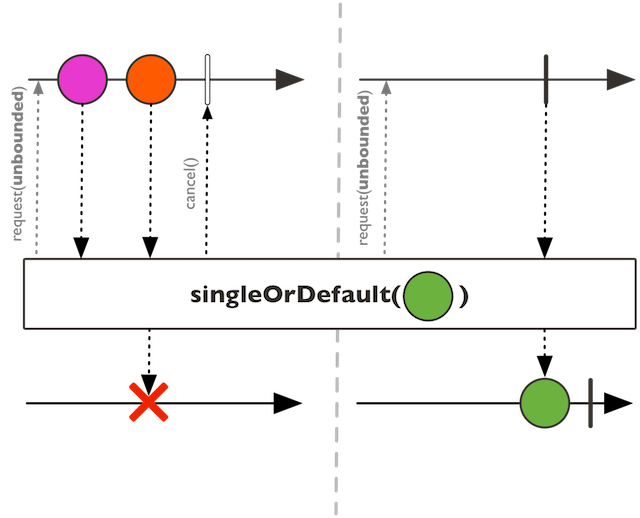

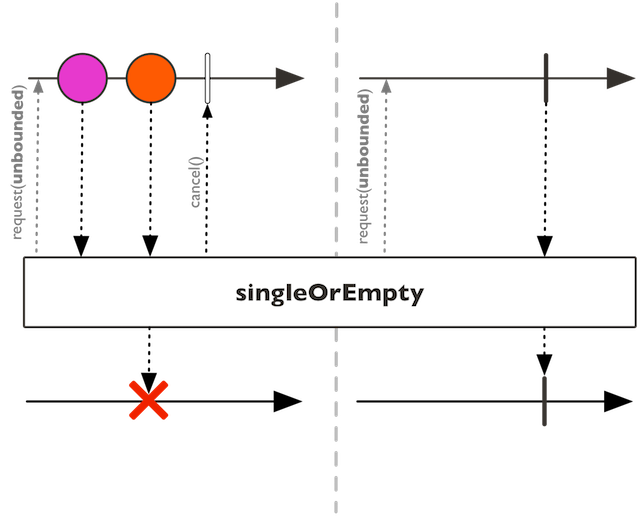

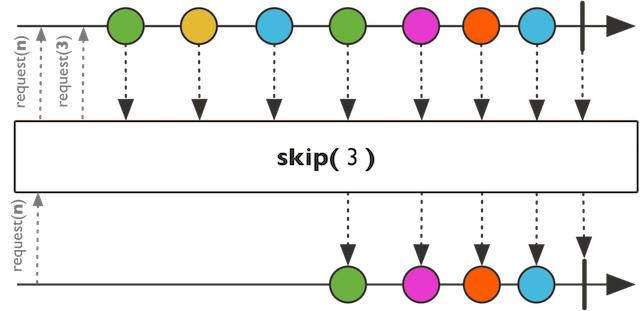

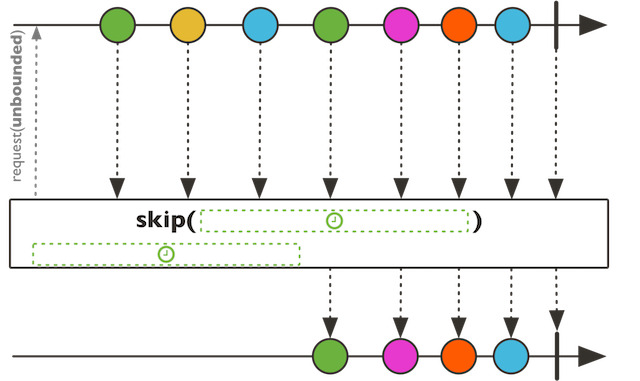

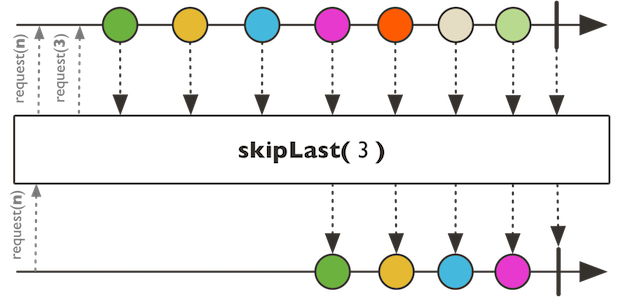

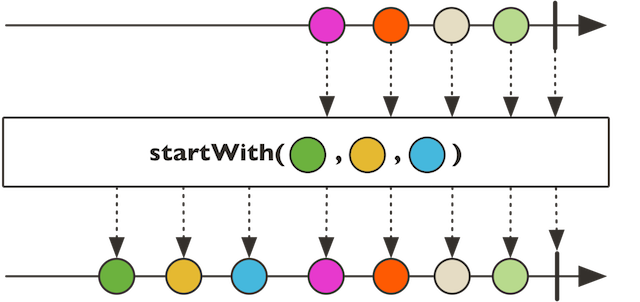

All Methods Static Methods Instance Methods Abstract Methods Concrete Methods Modifier and Type Method and Description Mono<Boolean>all(Predicate<? super T> predicate)Emit a single boolean true if all values of this sequence match thePredicate.Mono<Boolean>any(Predicate<? super T> predicate)Emit a single boolean true if any of the values of thisFluxsequence match the predicate.<P> Pas(Function<? super Flux<T>,P> transformer)Transform thisFluxinto a target type.TblockFirst()Subscribe to thisFluxand block indefinitely until the upstream signals its first value or completes.TblockFirst(Duration timeout)Subscribe to thisFluxand block until the upstream signals its first value, completes or a timeout expires.TblockLast()Subscribe to thisFluxand block indefinitely until the upstream signals its last value or completes.TblockLast(Duration timeout)Subscribe to thisFluxand block until the upstream signals its last value, completes or a timeout expires.Flux<List<T>>buffer()Flux<List<T>>buffer(Duration timespan)Flux<List<T>>buffer(Duration timespan, Duration timeshift)Collect incoming values into multipleListbuffers created at a giventimeshiftperiod.Flux<List<T>>buffer(Duration timespan, Duration timeshift, Scheduler timer)Flux<List<T>>buffer(Duration timespan, Scheduler timer)Flux<List<T>>buffer(int maxSize)Flux<List<T>>buffer(int maxSize, int skip)<C extends Collection<? super T>>

Flux<C>buffer(int maxSize, int skip, Supplier<C> bufferSupplier)Collect incoming values into multiple user-definedCollectionbuffers that will be emitted by the returnedFluxeach time the given max size is reached or once this Flux completes.<C extends Collection<? super T>>

Flux<C>buffer(int maxSize, Supplier<C> bufferSupplier)Collect incoming values into multiple user-definedCollectionbuffers that will be emitted by the returnedFluxeach time the given max size is reached or once this Flux completes.Flux<List<T>>buffer(Publisher<?> other)<C extends Collection<? super T>>

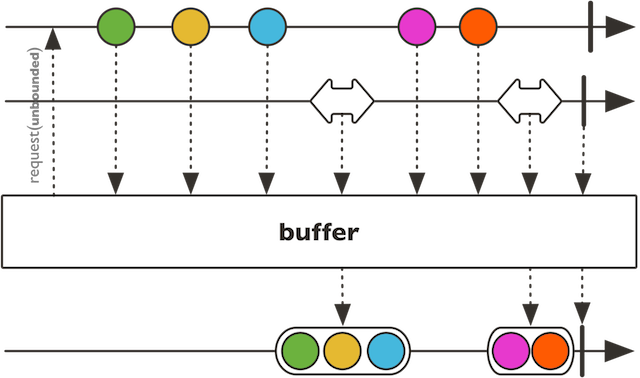

Flux<C>buffer(Publisher<?> other, Supplier<C> bufferSupplier)Collect incoming values into multiple user-definedCollectionbuffers, as delimited by the signals of a companionPublisherthis operator will subscribe to.Flux<List<T>>bufferTimeout(int maxSize, Duration timespan)Flux<List<T>>bufferTimeout(int maxSize, Duration timespan, Scheduler timer)<C extends Collection<? super T>>

Flux<C>bufferTimeout(int maxSize, Duration timespan, Scheduler timer, Supplier<C> bufferSupplier)Collect incoming values into multiple user-definedCollectionbuffers that will be emitted by the returnedFluxeach time the buffer reaches a maximum size OR the timespanDurationelapses, as measured on the providedScheduler.<C extends Collection<? super T>>

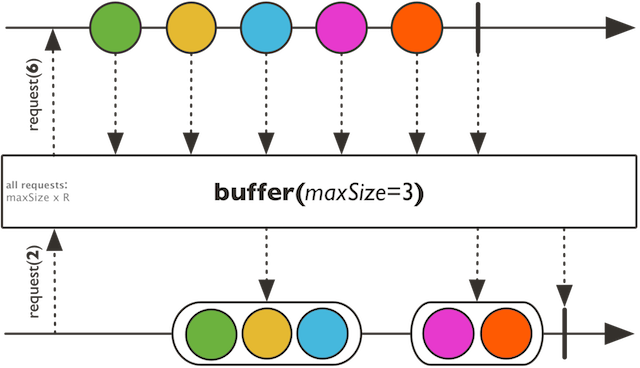

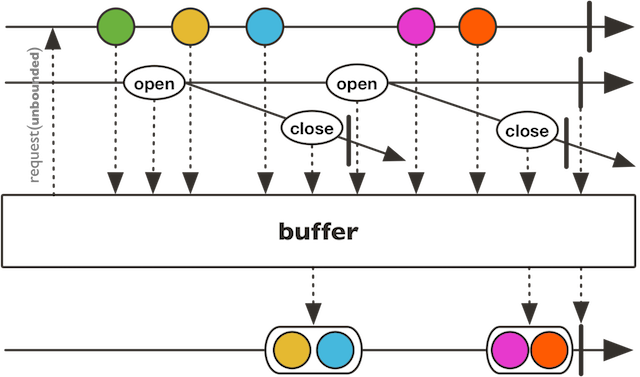

Flux<C>bufferTimeout(int maxSize, Duration timespan, Supplier<C> bufferSupplier)Collect incoming values into multiple user-definedCollectionbuffers that will be emitted by the returnedFluxeach time the buffer reaches a maximum size OR the timespanDurationelapses.Flux<List<T>>bufferUntil(Predicate<? super T> predicate)Flux<List<T>>bufferUntil(Predicate<? super T> predicate, boolean cutBefore)<U,V> Flux<List<T>>bufferWhen(Publisher<U> bucketOpening, Function<? super U,? extends Publisher<V>> closeSelector)<U,V,C extends Collection<? super T>>

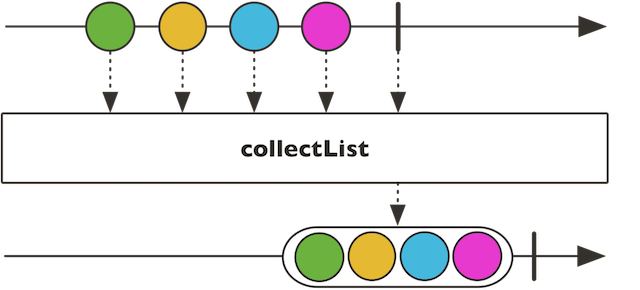

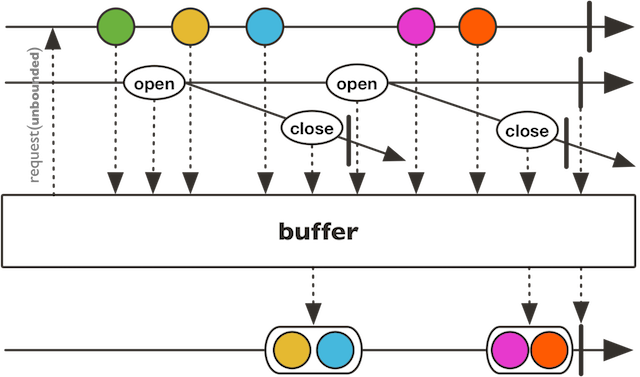

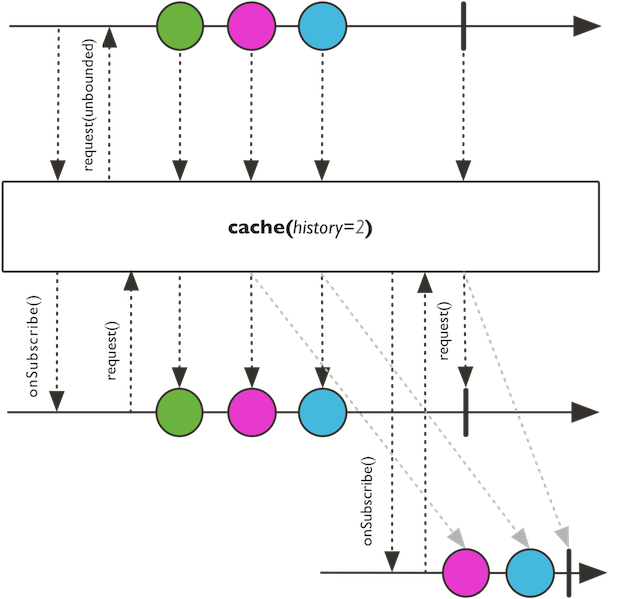

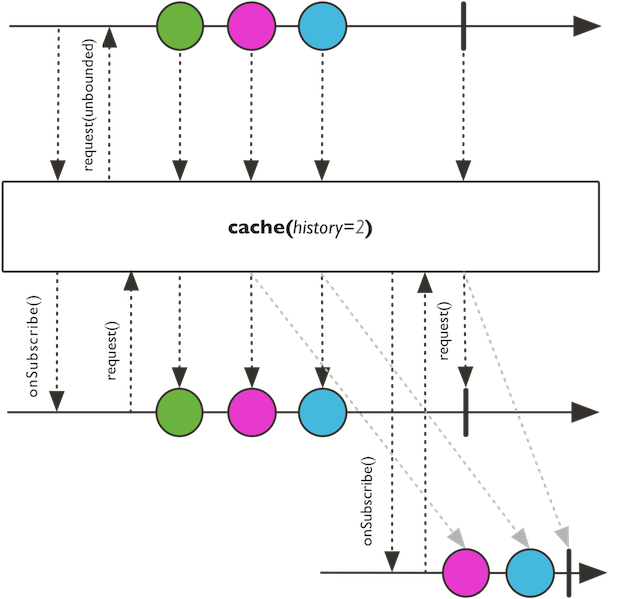

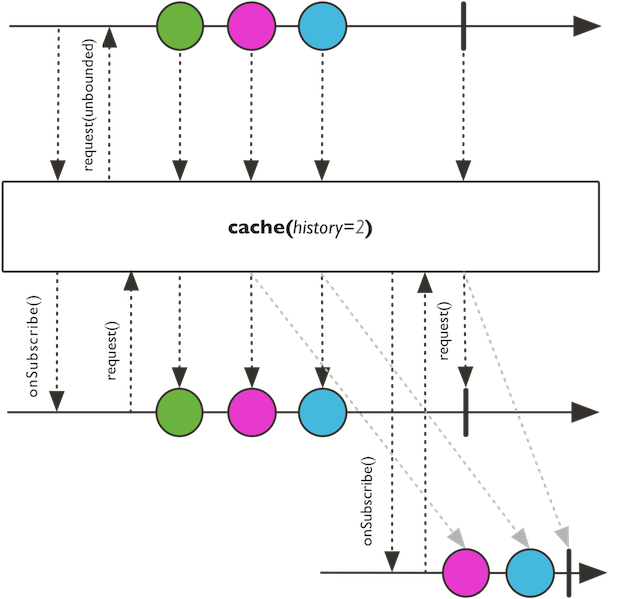

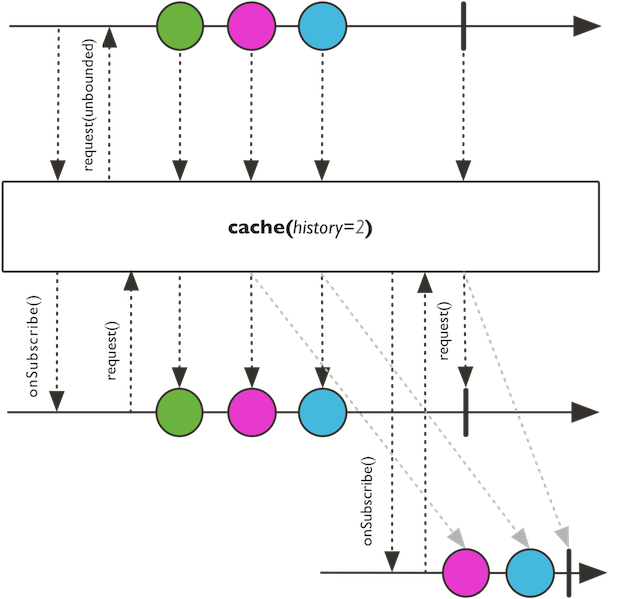

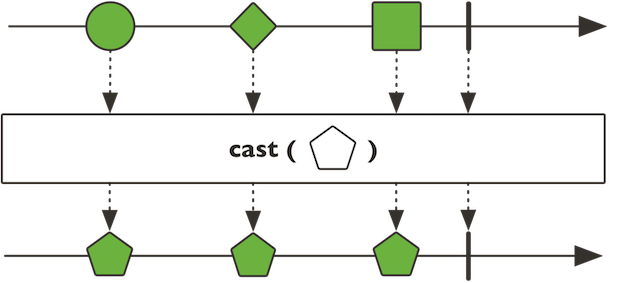

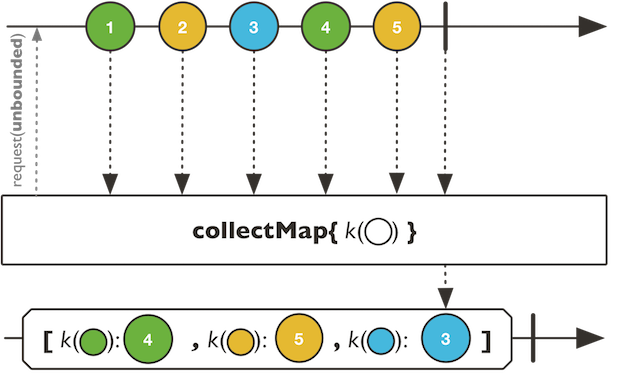

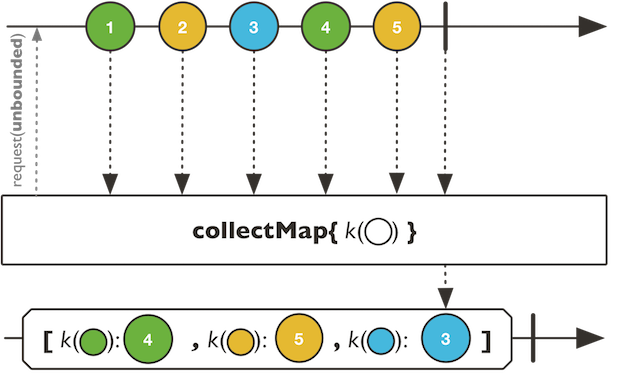

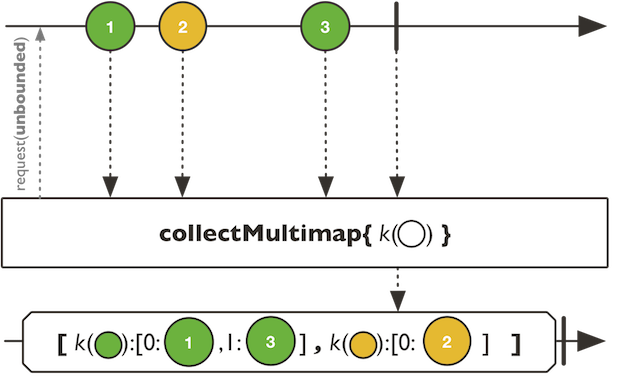

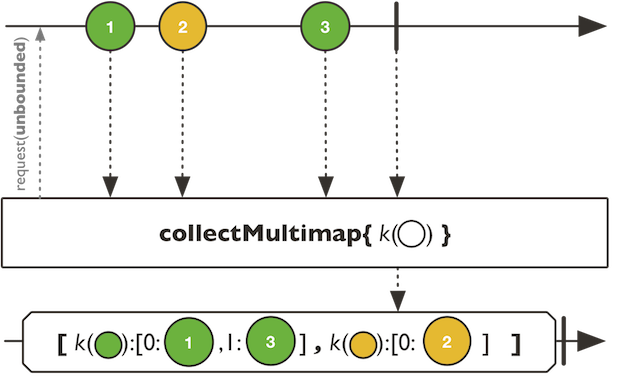

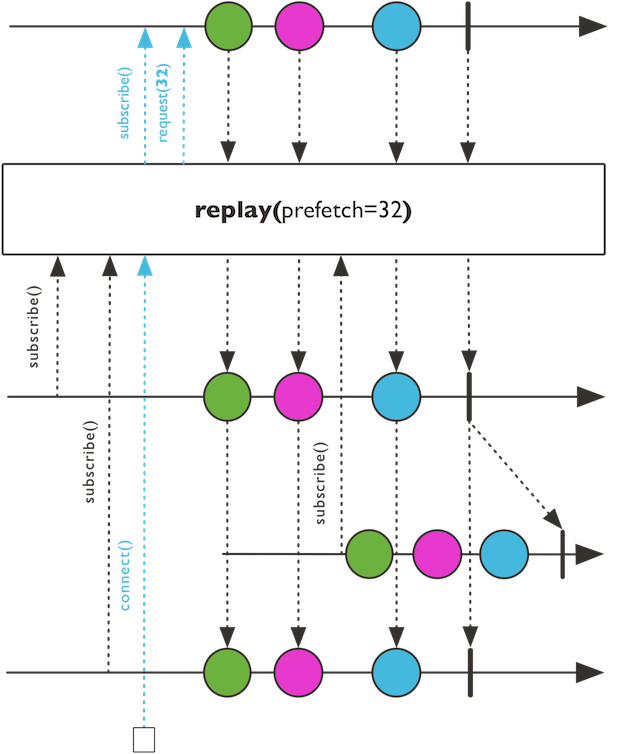

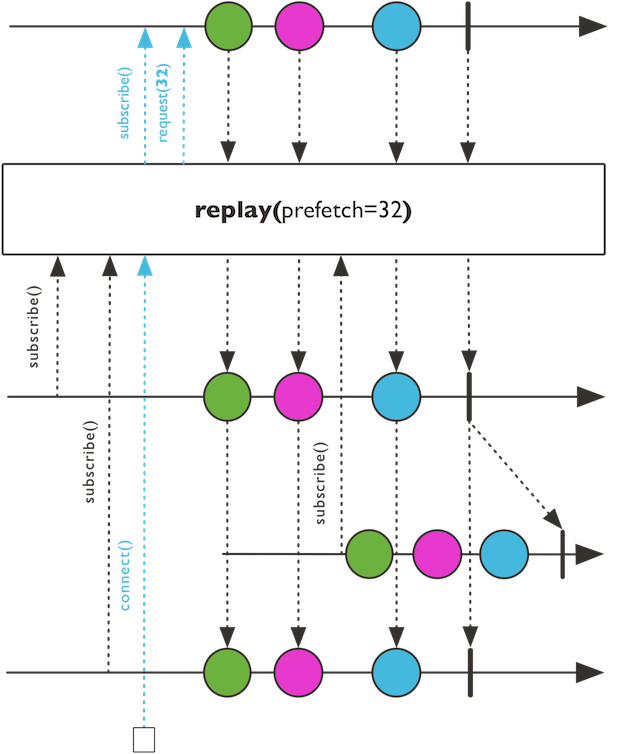

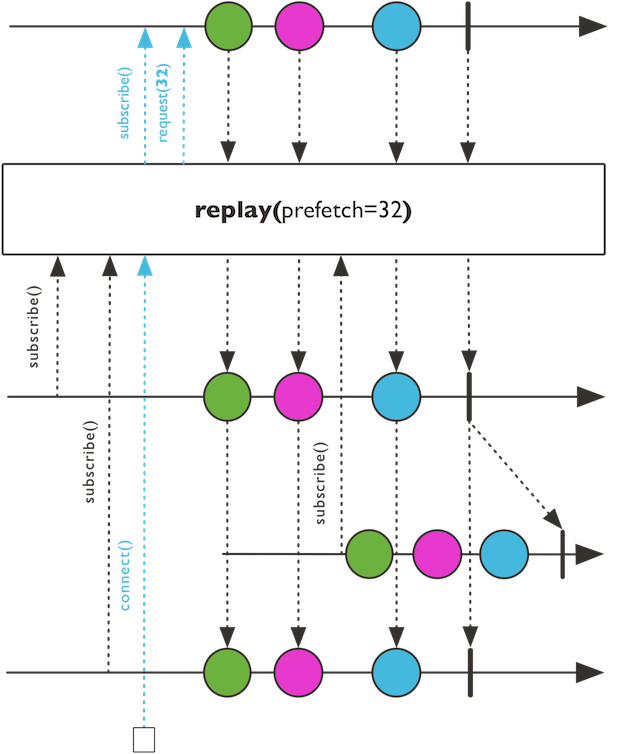

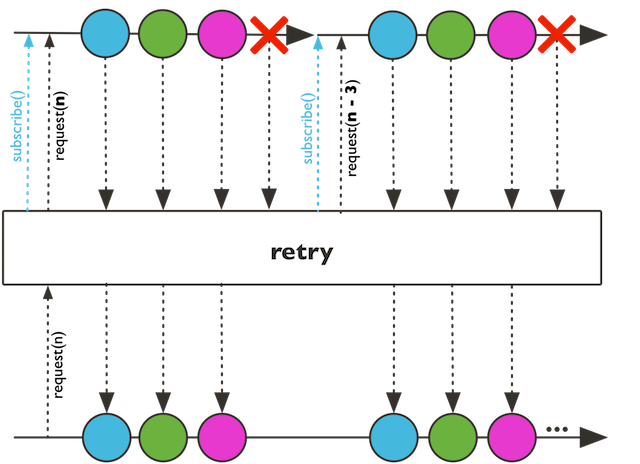

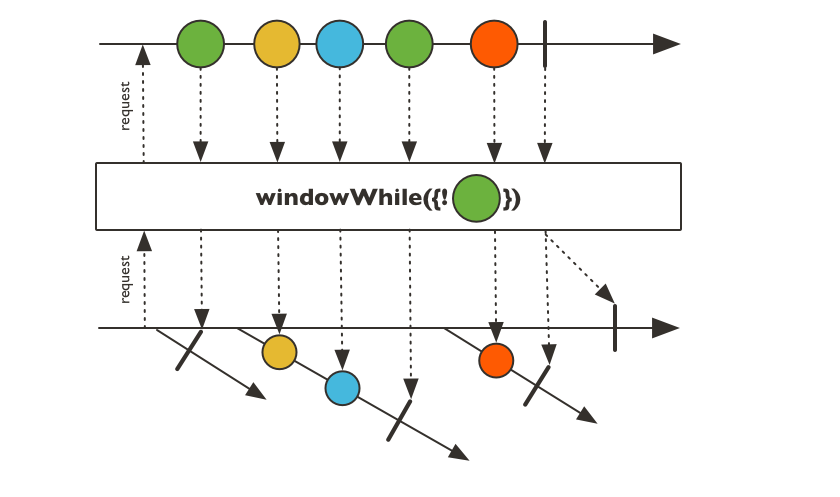

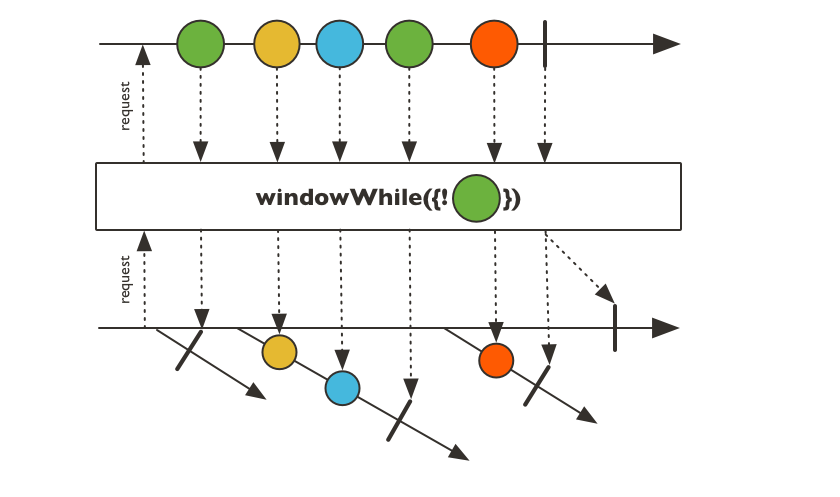

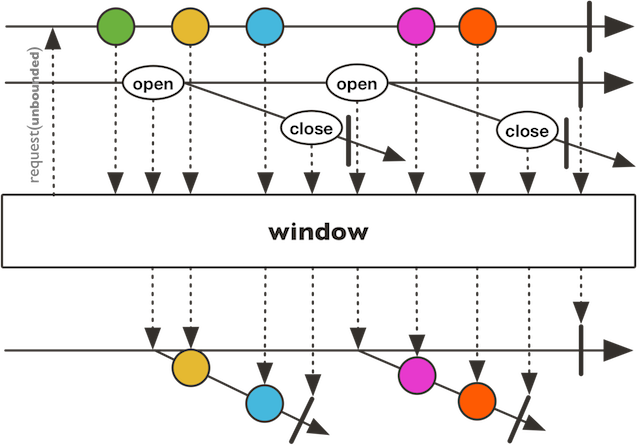

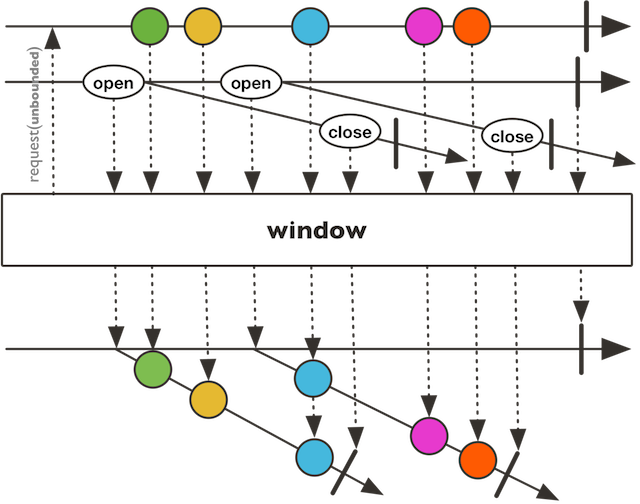

Flux<C>bufferWhen(Publisher<U> bucketOpening, Function<? super U,? extends Publisher<V>> closeSelector, Supplier<C> bufferSupplier)Collect incoming values into multiple user-definedCollectionbuffers started each time an opening companionPublisheremits.Flux<List<T>>bufferWhile(Predicate<? super T> predicate)Flux<T>cache()Turn thisFluxinto a hot source and cache last emitted signals for furtherSubscriber.Flux<T>cache(Duration ttl)Turn thisFluxinto a hot source and cache last emitted signals for furtherSubscriber.Flux<T>cache(int history)Turn thisFluxinto a hot source and cache last emitted signals for furtherSubscriber.Flux<T>cache(int history, Duration ttl)Turn thisFluxinto a hot source and cache last emitted signals for furtherSubscriber.Flux<T>cancelOn(Scheduler scheduler)<E> Flux<E>cast(Class<E> clazz)Cast the currentFluxproduced type into a target produced type.Flux<T>checkpoint()Activate assembly tracing for this particularFlux, in case of an error upstream of the checkpoint.Flux<T>checkpoint(String description)Activate assembly marker for this particularFluxby giving it a description that will be reflected in the assembly traceback in case of an error upstream of the checkpoint.Flux<T>checkpoint(String description, boolean forceStackTrace)Activate assembly tracing or the lighter assembly marking depending on theforceStackTraceoption.<R,A> Mono<R>collect(Collector<? super T,A,? extends R> collector)<E> Mono<E>collect(Supplier<E> containerSupplier, BiConsumer<E,? super T> collector)Collect all elements emitted by thisFluxinto a user-defined container, by applying a collectorBiConsumertaking the container and each element.Mono<List<T>>collectList()<K> Mono<Map<K,T>>collectMap(Function<? super T,? extends K> keyExtractor)<K,V> Mono<Map<K,V>>collectMap(Function<? super T,? extends K> keyExtractor, Function<? super T,? extends V> valueExtractor)<K,V> Mono<Map<K,V>>collectMap(Function<? super T,? extends K> keyExtractor, Function<? super T,? extends V> valueExtractor, Supplier<Map<K,V>> mapSupplier)<K> Mono<Map<K,Collection<T>>>collectMultimap(Function<? super T,? extends K> keyExtractor)<K,V> Mono<Map<K,Collection<V>>>collectMultimap(Function<? super T,? extends K> keyExtractor, Function<? super T,? extends V> valueExtractor)<K,V> Mono<Map<K,Collection<V>>>collectMultimap(Function<? super T,? extends K> keyExtractor, Function<? super T,? extends V> valueExtractor, Supplier<Map<K,Collection<V>>> mapSupplier)Mono<List<T>>collectSortedList()Mono<List<T>>collectSortedList(Comparator<? super T> comparator)Collect all elements emitted by thisFluxuntil this sequence completes, and then sort them using aComparatorinto aListthat is emitted by the resultingMono.static <T,V> Flux<V>combineLatest(Function<Object[],V> combinator, int prefetch, Publisher<? extends T>... sources)static <T,V> Flux<V>combineLatest(Function<Object[],V> combinator, Publisher<? extends T>... sources)static <T,V> Flux<V>combineLatest(Iterable<? extends Publisher<? extends T>> sources, Function<Object[],V> combinator)static <T,V> Flux<V>combineLatest(Iterable<? extends Publisher<? extends T>> sources, int prefetch, Function<Object[],V> combinator)static <T1,T2,V> Flux<V>combineLatest(Publisher<? extends T1> source1, Publisher<? extends T2> source2, BiFunction<? super T1,? super T2,? extends V> combinator)static <T1,T2,T3,V>

Flux<V>combineLatest(Publisher<? extends T1> source1, Publisher<? extends T2> source2, Publisher<? extends T3> source3, Function<Object[],V> combinator)static <T1,T2,T3,T4,V>

Flux<V>combineLatest(Publisher<? extends T1> source1, Publisher<? extends T2> source2, Publisher<? extends T3> source3, Publisher<? extends T4> source4, Function<Object[],V> combinator)static <T1,T2,T3,T4,T5,V>

Flux<V>combineLatest(Publisher<? extends T1> source1, Publisher<? extends T2> source2, Publisher<? extends T3> source3, Publisher<? extends T4> source4, Publisher<? extends T5> source5, Function<Object[],V> combinator)static <T1,T2,T3,T4,T5,T6,V>

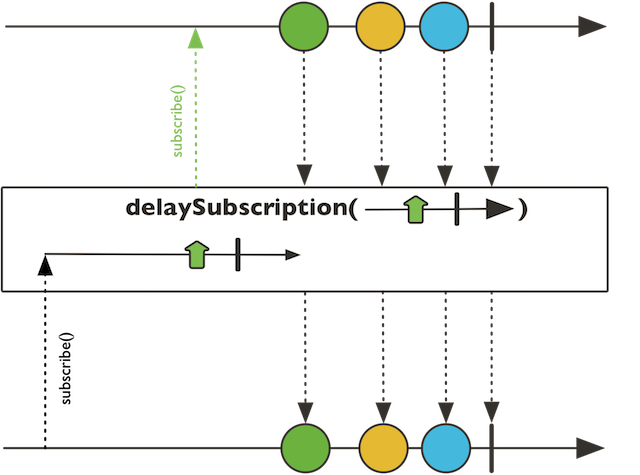

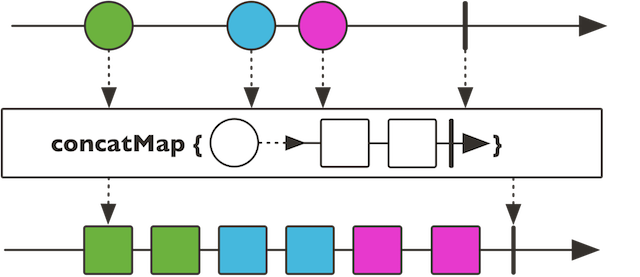

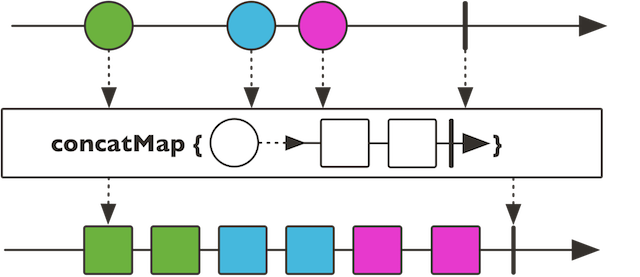

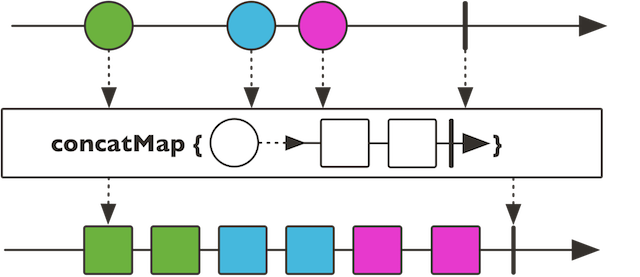

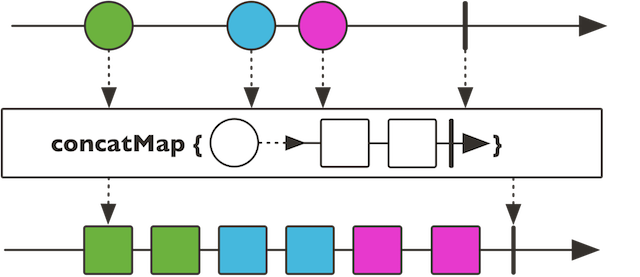

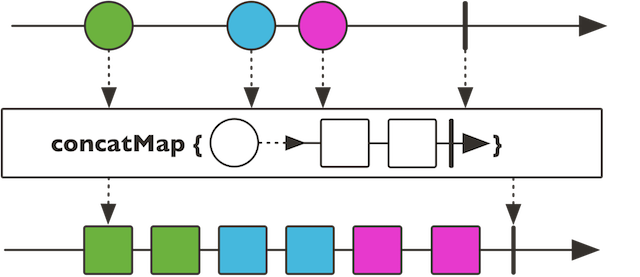

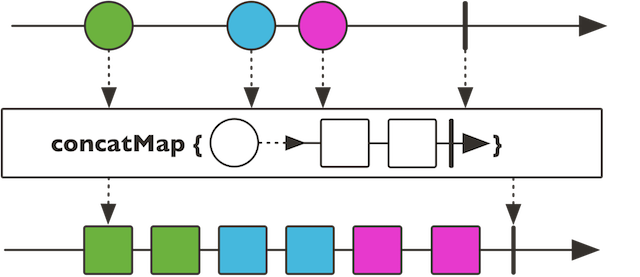

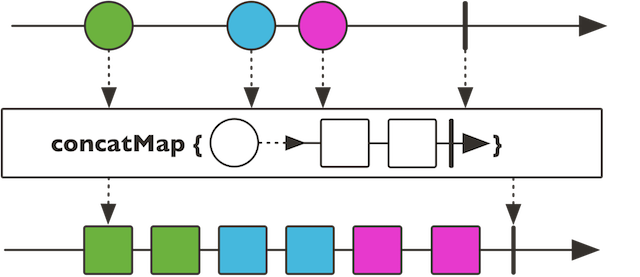

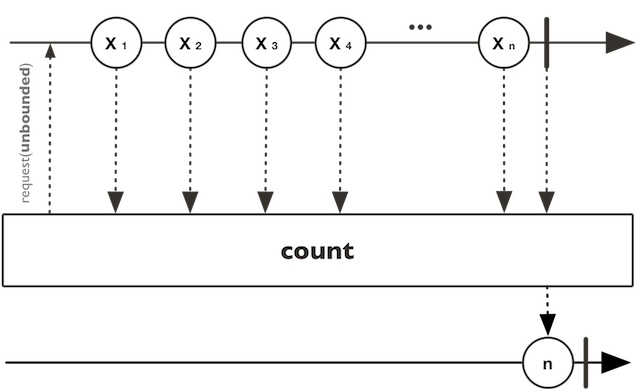

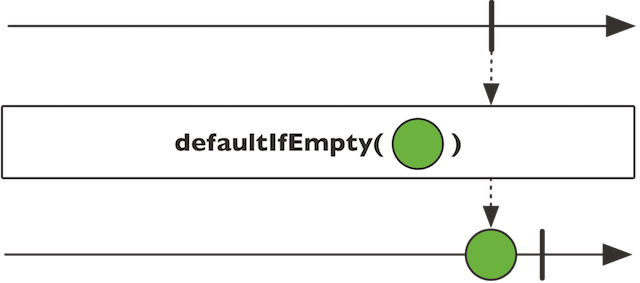

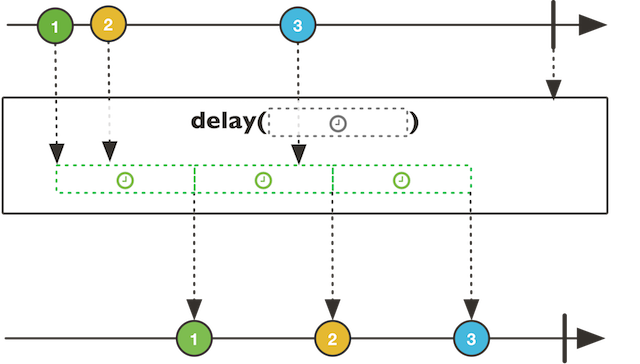

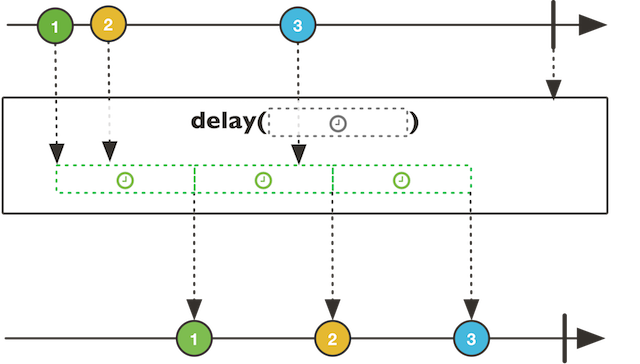

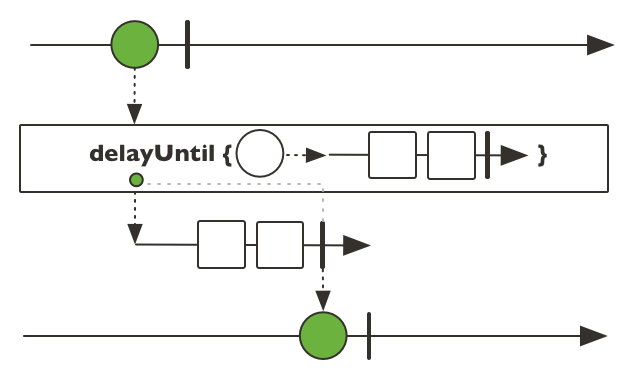

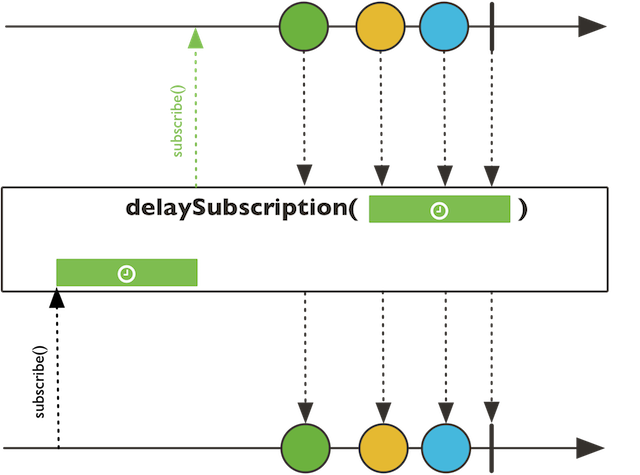

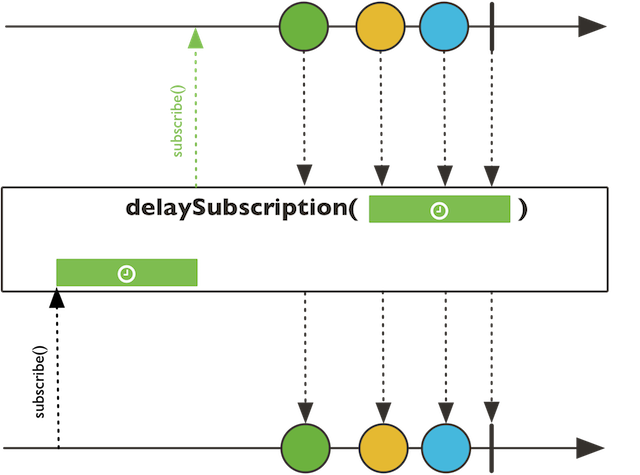

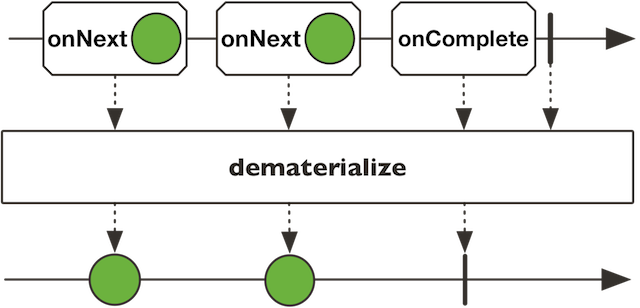

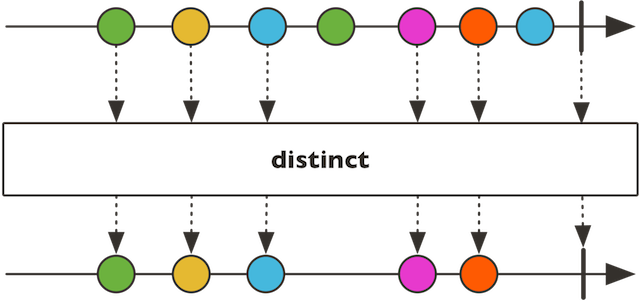

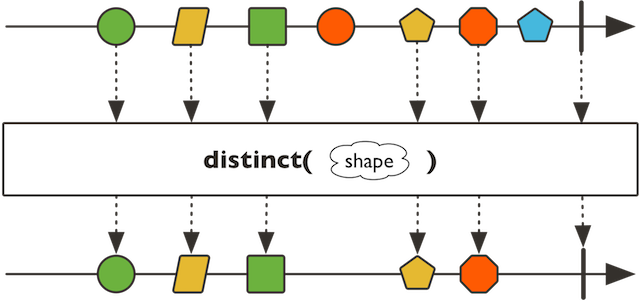

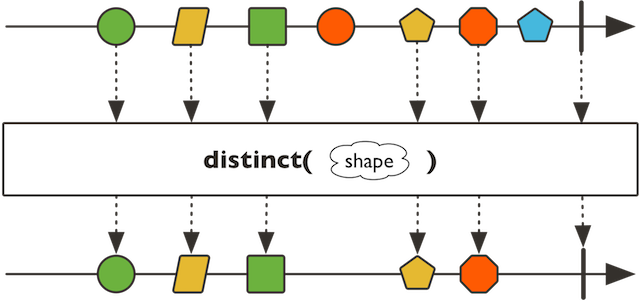

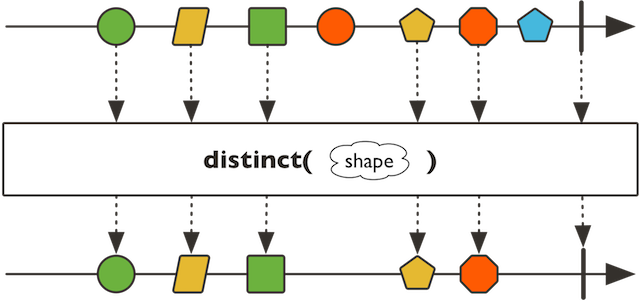

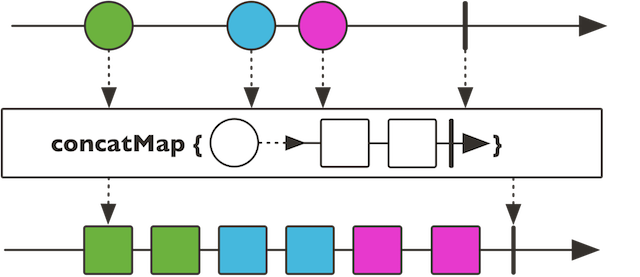

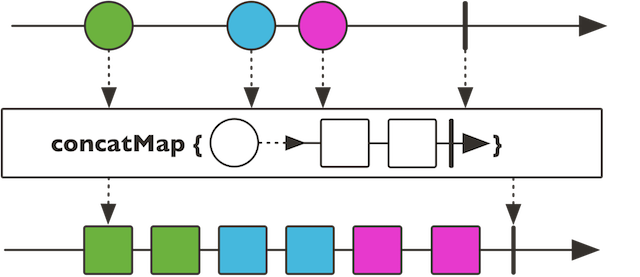

Flux<V>combineLatest(Publisher<? extends T1> source1, Publisher<? extends T2> source2, Publisher<? extends T3> source3, Publisher<? extends T4> source4, Publisher<? extends T5> source5, Publisher<? extends T6> source6, Function<Object[],V> combinator)<V> Flux<V>compose(Function<? super Flux<T>,? extends Publisher<V>> transformer)static <T> Flux<T>concat(Iterable<? extends Publisher<? extends T>> sources)Concatenate all sources provided in anIterable, forwarding elements emitted by the sources downstream.static <T> Flux<T>concat(Publisher<? extends Publisher<? extends T>> sources)Concatenate all sources emitted as an onNext signal from a parentPublisher, forwarding elements emitted by the sources downstream.static <T> Flux<T>concat(Publisher<? extends Publisher<? extends T>> sources, int prefetch)Concatenate all sources emitted as an onNext signal from a parentPublisher, forwarding elements emitted by the sources downstream.static <T> Flux<T>concat(Publisher<? extends T>... sources)Concatenate all sources provided as a vararg, forwarding elements emitted by the sources downstream.static <T> Flux<T>concatDelayError(Publisher<? extends Publisher<? extends T>> sources)Concatenate all sources emitted as an onNext signal from a parentPublisher, forwarding elements emitted by the sources downstream.static <T> Flux<T>concatDelayError(Publisher<? extends Publisher<? extends T>> sources, boolean delayUntilEnd, int prefetch)Concatenate all sources emitted as an onNext signal from a parentPublisher, forwarding elements emitted by the sources downstream.static <T> Flux<T>concatDelayError(Publisher<? extends Publisher<? extends T>> sources, int prefetch)Concatenate all sources emitted as an onNext signal from a parentPublisher, forwarding elements emitted by the sources downstream.static <T> Flux<T>concatDelayError(Publisher<? extends T>... sources)Concatenate all sources provided as a vararg, forwarding elements emitted by the sources downstream.<V> Flux<V>concatMap(Function<? super T,? extends Publisher<? extends V>> mapper)<V> Flux<V>concatMap(Function<? super T,? extends Publisher<? extends V>> mapper, int prefetch)<V> Flux<V>concatMapDelayError(Function<? super T,? extends Publisher<? extends V>> mapper, boolean delayUntilEnd, int prefetch)<V> Flux<V>concatMapDelayError(Function<? super T,? extends Publisher<? extends V>> mapper, int prefetch)<V> Flux<V>concatMapDelayError(Function<? super T,Publisher<? extends V>> mapper)<R> Flux<R>concatMapIterable(Function<? super T,? extends Iterable<? extends R>> mapper)<R> Flux<R>concatMapIterable(Function<? super T,? extends Iterable<? extends R>> mapper, int prefetch)Flux<T>concatWith(Publisher<? extends T> other)Flux<T>concatWithValues(T... values)Concatenates the values to the end of theFluxMono<Long>count()Counts the number of values in thisFlux.static <T> Flux<T>create(Consumer<? super FluxSink<T>> emitter)static <T> Flux<T>create(Consumer<? super FluxSink<T>> emitter, FluxSink.OverflowStrategy backpressure)Flux<T>defaultIfEmpty(T defaultV)Provide a default unique value if this sequence is completed without any datastatic <T> Flux<T>defer(Supplier<? extends Publisher<T>> supplier)Lazily supply aPublisherevery time aSubscriptionis made on the resultingFlux, so the actual source instantiation is deferred until each subscribe and theSuppliercan create a subscriber-specific instance.Flux<T>delayElements(Duration delay)Flux<T>delayElements(Duration delay, Scheduler timer)Flux<T>delaySequence(Duration delay)Flux<T>delaySequence(Duration delay, Scheduler timer)Flux<T>delaySubscription(Duration delay)Delay thesubscriptionto thisFluxsource until the given period elapses.Flux<T>delaySubscription(Duration delay, Scheduler timer)Delay thesubscriptionto thisFluxsource until the given period elapses, as measured on the user-providedScheduler.<U> Flux<T>delaySubscription(Publisher<U> subscriptionDelay)Flux<T>delayUntil(Function<? super T,? extends Publisher<?>> triggerProvider)<X> Flux<X>dematerialize()An operator working only if thisFluxemits onNext, onError or onCompleteSignalinstances, transforming thesematerializedsignals into real signals on theSubscriber.Flux<T>distinct()For eachSubscriber, track elements from thisFluxthat have been seen and filter out duplicates.<V> Flux<T>distinct(Function<? super T,? extends V> keySelector)For eachSubscriber, track elements from thisFluxthat have been seen and filter out duplicates, as compared by a key extracted through the user providedFunction.<V,C extends Collection<? super V>>

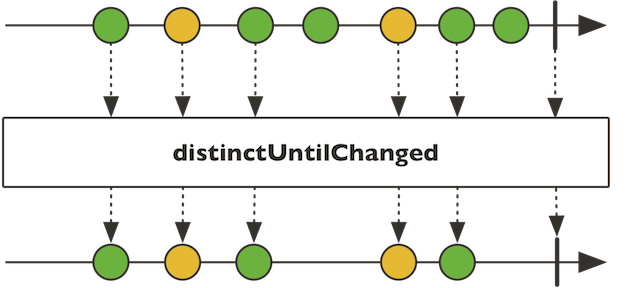

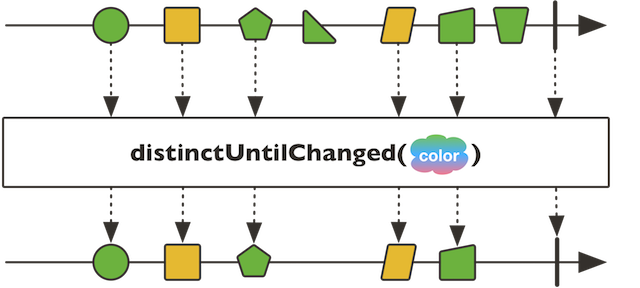

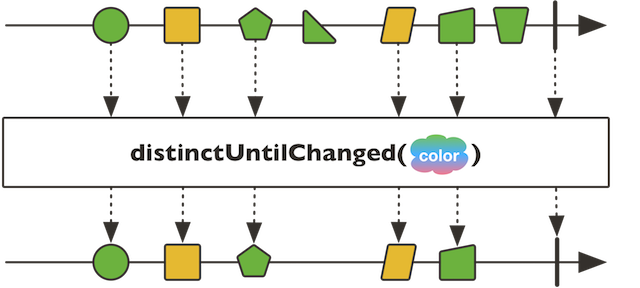

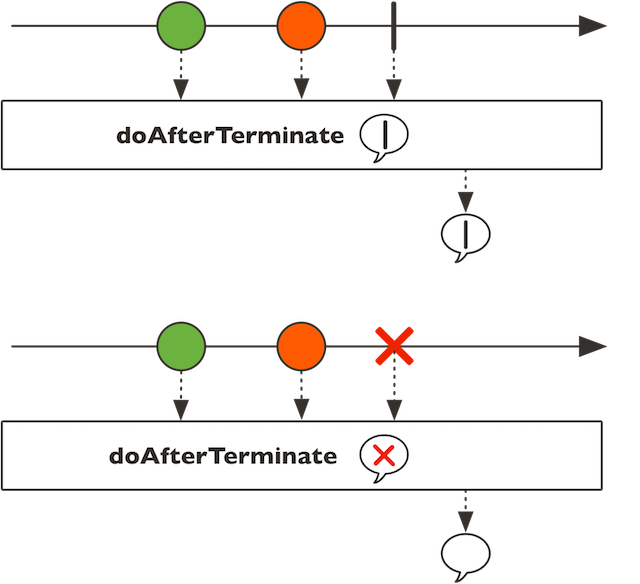

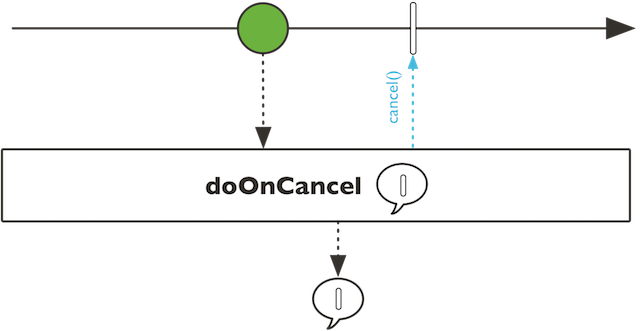

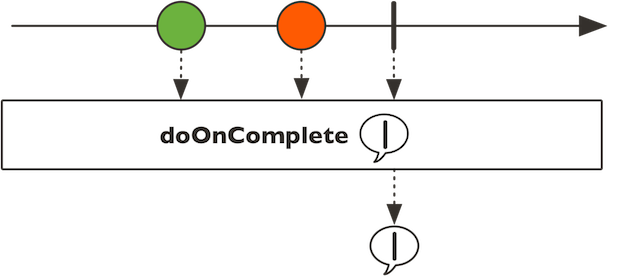

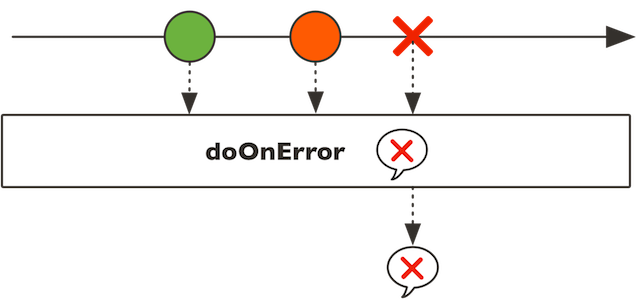

Flux<T>distinct(Function<? super T,? extends V> keySelector, Supplier<C> distinctCollectionSupplier)For eachSubscriber, track elements from thisFluxthat have been seen and filter out duplicates, as compared by a key extracted through the user providedFunctionand by theadd methodof theCollectionsupplied (typically aSet).<V,C> Flux<T>distinct(Function<? super T,? extends V> keySelector, Supplier<C> distinctStoreSupplier, BiPredicate<C,V> distinctPredicate, Consumer<C> cleanup)For eachSubscriber, track elements from thisFluxthat have been seen and filter out duplicates, as compared by applying aBiPredicateon an arbitrary user-supplied<C>store and a key extracted through the user providedFunction.Flux<T>distinctUntilChanged()Filter out subsequent repetitions of an element (that is, if they arrive right after one another).<V> Flux<T>distinctUntilChanged(Function<? super T,? extends V> keySelector)Filter out subsequent repetitions of an element (that is, if they arrive right after one another), as compared by a key extracted through the user providedFunctionusing equality.<V> Flux<T>distinctUntilChanged(Function<? super T,? extends V> keySelector, BiPredicate<? super V,? super V> keyComparator)Filter out subsequent repetitions of an element (that is, if they arrive right after one another), as compared by a key extracted through the user providedFunctionand then comparing keys with the suppliedBiPredicate.Flux<T>doAfterTerminate(Runnable afterTerminate)Add behavior (side-effect) triggered after theFluxterminates, either by completing downstream successfully or with an error.Flux<T>doFinally(Consumer<SignalType> onFinally)Add behavior (side-effect) triggered after theFluxterminates for any reason, including cancellation.Flux<T>doOnCancel(Runnable onCancel)Add behavior (side-effect) triggered when theFluxis cancelled.Flux<T>doOnComplete(Runnable onComplete)Add behavior (side-effect) triggered when theFluxcompletes successfully.Flux<T>doOnEach(Consumer<? super Signal<T>> signalConsumer)Add behavior (side-effects) triggered when theFluxemits an item, fails with an error or completes successfully.<E extends Throwable>

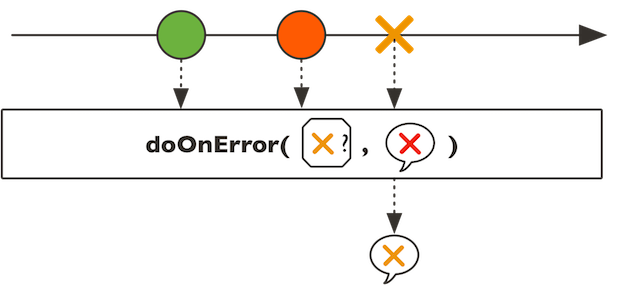

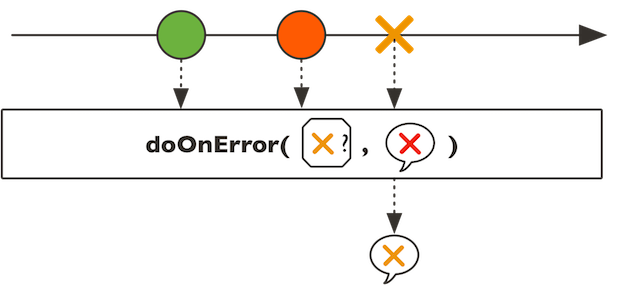

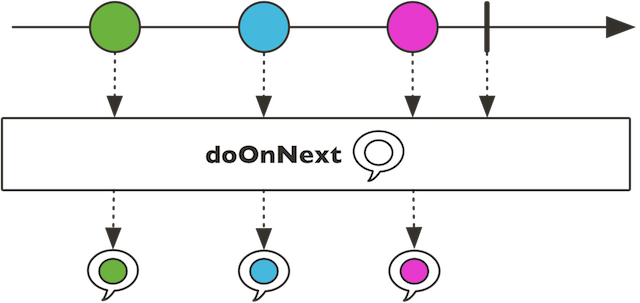

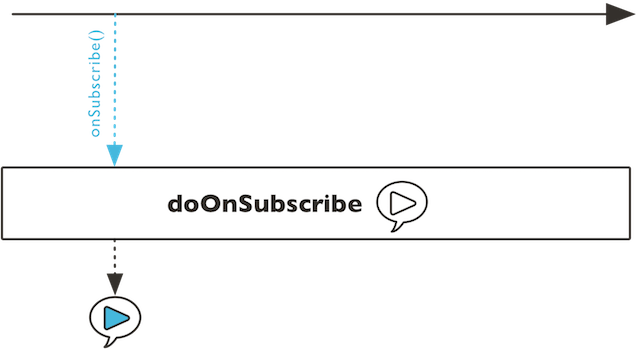

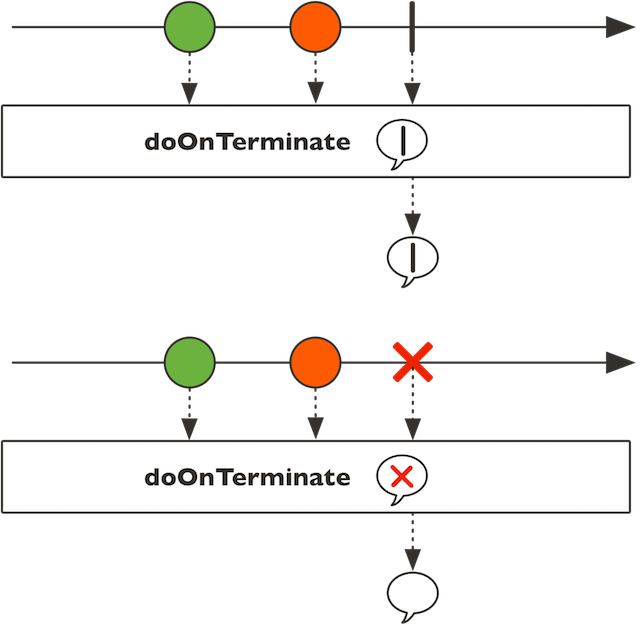

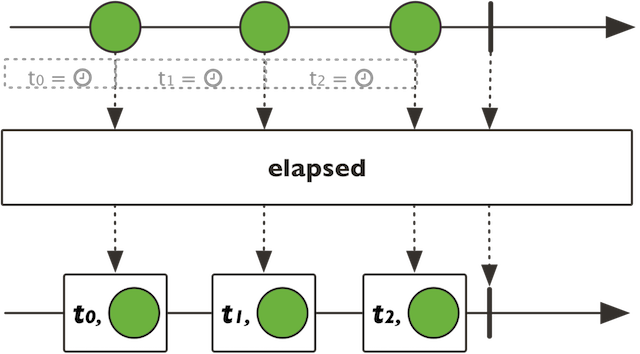

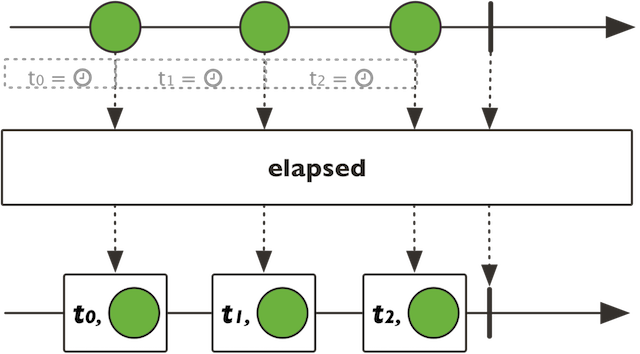

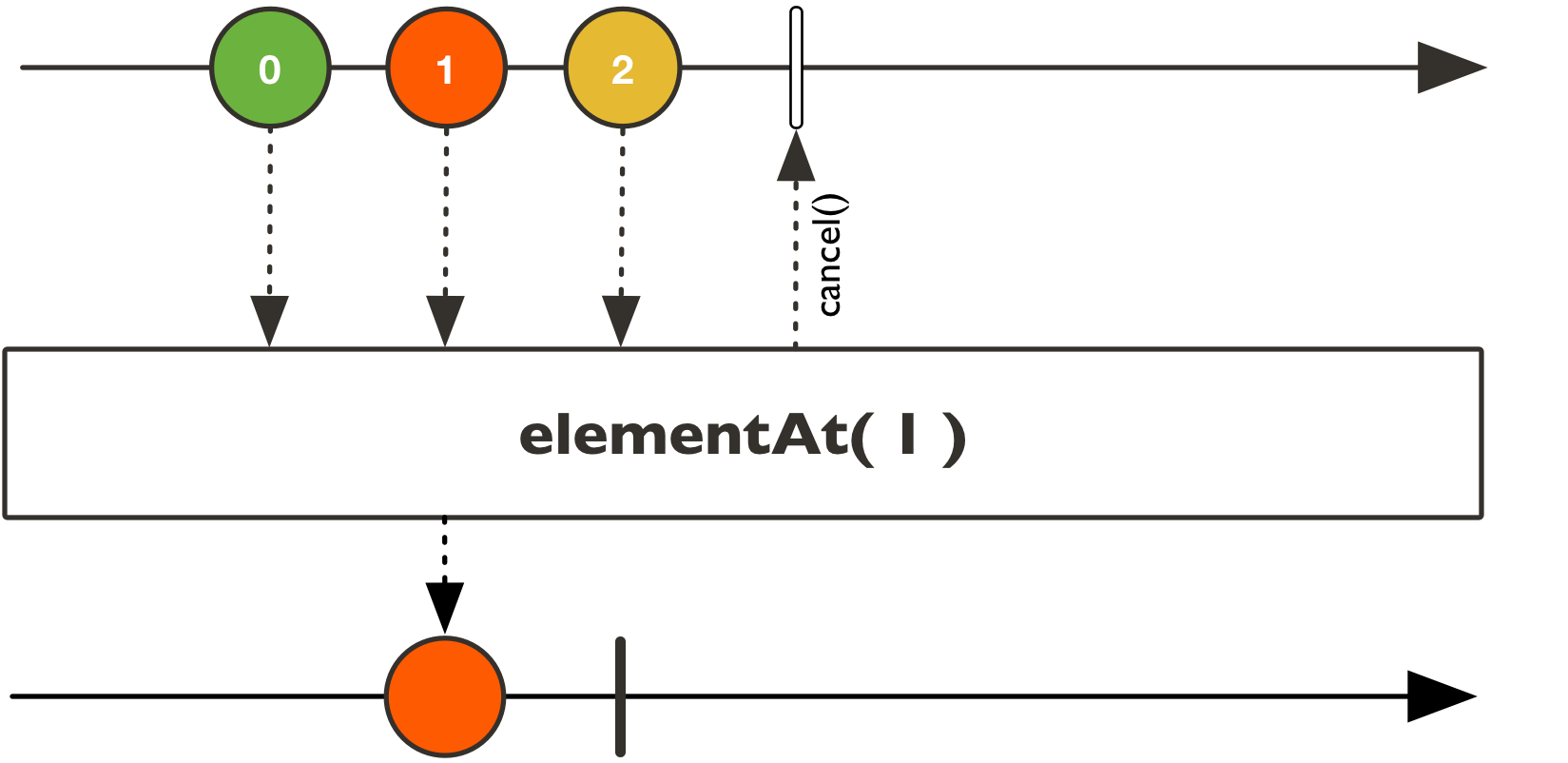

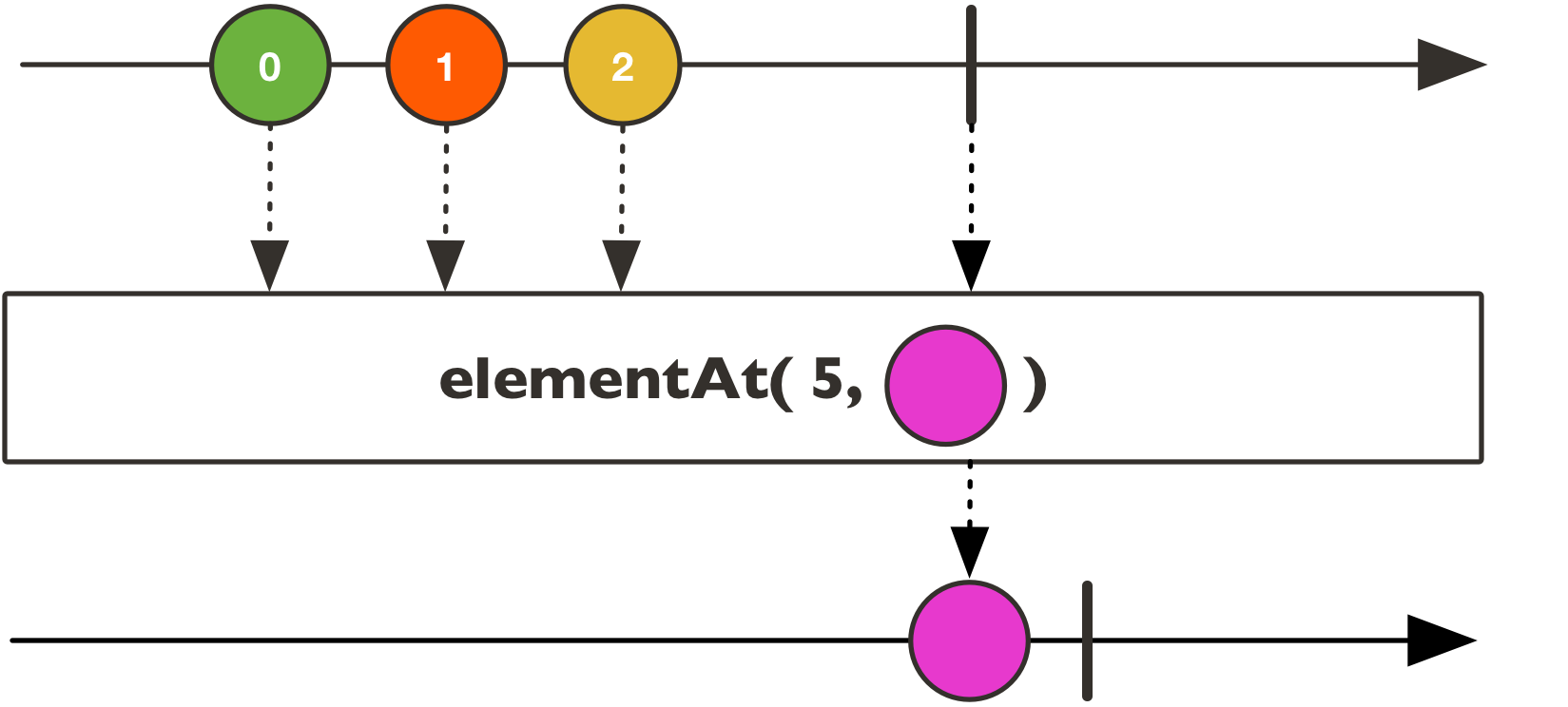

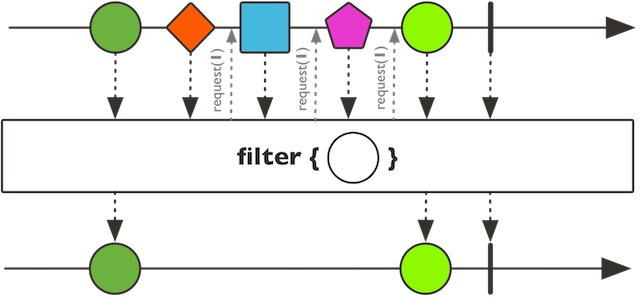

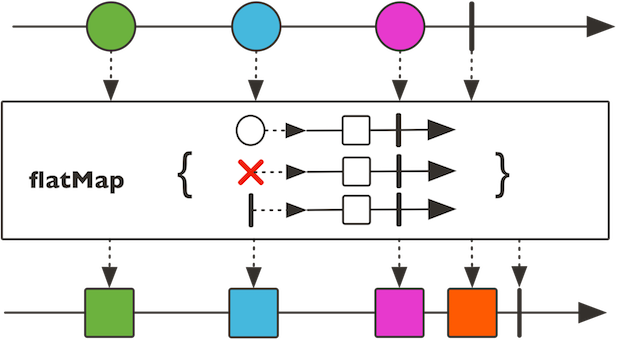

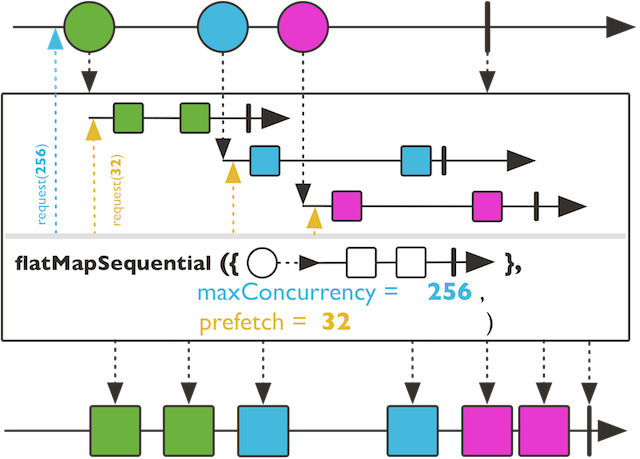

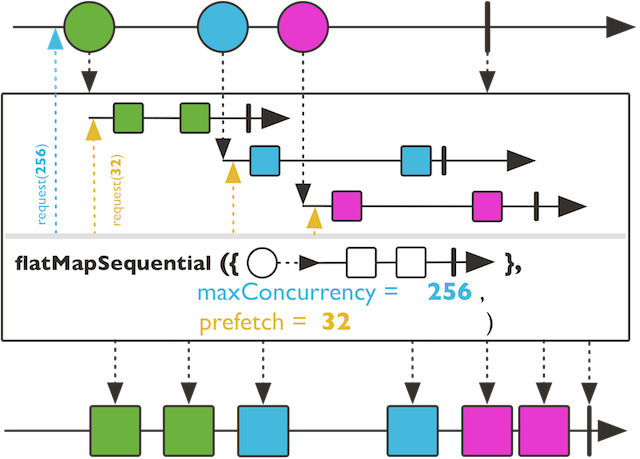

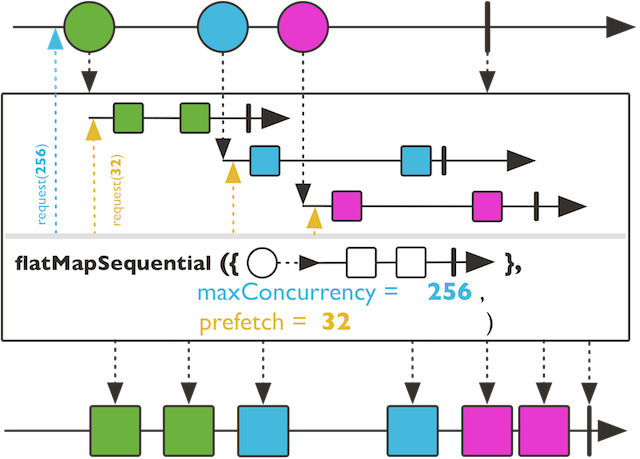

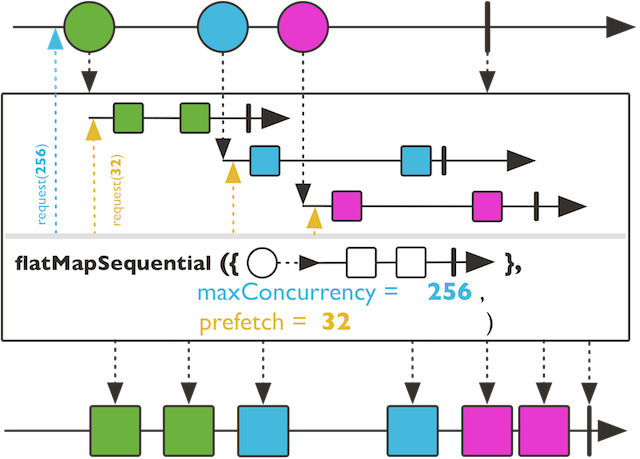

Flux<T>doOnError(Class<E> exceptionType, Consumer<? super E> onError)Add behavior (side-effect) triggered when theFluxcompletes with an error matching the given exception type.Flux<T>doOnError(Consumer<? super Throwable> onError)Add behavior (side-effect) triggered when theFluxcompletes with an error.Flux<T>doOnError(Predicate<? super Throwable> predicate, Consumer<? super Throwable> onError)Add behavior (side-effect) triggered when theFluxcompletes with an error matching the given exception.Flux<T>doOnNext(Consumer<? super T> onNext)Add behavior (side-effect) triggered when theFluxemits an item.Flux<T>doOnRequest(LongConsumer consumer)Add behavior (side-effect) triggering aLongConsumerwhen thisFluxreceives any request.Flux<T>doOnSubscribe(Consumer<? super Subscription> onSubscribe)Add behavior (side-effect) triggered when theFluxis subscribed.Flux<T>doOnTerminate(Runnable onTerminate)Add behavior (side-effect) triggered when theFluxterminates, either by completing successfully or with an error.Flux<Tuple2<Long,T>>elapsed()Map thisFluxintoTuple2<Long, T>of timemillis and source data.Flux<Tuple2<Long,T>>elapsed(Scheduler scheduler)Map thisFluxintoTuple2<Long, T>of timemillis and source data.Mono<T>elementAt(int index)Emit only the element at the given index position orIndexOutOfBoundsExceptionif the sequence is shorter.Mono<T>elementAt(int index, T defaultValue)Emit only the element at the given index position or fall back to a default value if the sequence is shorter.static <T> Flux<T>empty()Create aFluxthat completes without emitting any item.static <T> Flux<T>error(Throwable error)Create aFluxthat terminates with the specified error immediately after being subscribed to.static <O> Flux<O>error(Throwable throwable, boolean whenRequested)Create aFluxthat terminates with the specified error, either immediately after being subscribed to or after being first requested.Flux<T>expand(Function<? super T,? extends Publisher<? extends T>> expander)Recursively expand elements into a graph and emit all the resulting element using a breadth-first traversal strategy.Flux<T>expand(Function<? super T,? extends Publisher<? extends T>> expander, int capacityHint)Recursively expand elements into a graph and emit all the resulting element using a breadth-first traversal strategy.Flux<T>expandDeep(Function<? super T,? extends Publisher<? extends T>> expander)Recursively expand elements into a graph and emit all the resulting element, in a depth-first traversal order.Flux<T>expandDeep(Function<? super T,? extends Publisher<? extends T>> expander, int capacityHint)Recursively expand elements into a graph and emit all the resulting element, in a depth-first traversal order.Flux<T>filter(Predicate<? super T> p)Evaluate each source value against the givenPredicate.Flux<T>filterWhen(Function<? super T,? extends Publisher<Boolean>> asyncPredicate)Test each value emitted by thisFluxasynchronously using a generatedPublisher<Boolean>test.Flux<T>filterWhen(Function<? super T,? extends Publisher<Boolean>> asyncPredicate, int bufferSize)Test each value emitted by thisFluxasynchronously using a generatedPublisher<Boolean>test.static <I> Flux<I>first(Iterable<? extends Publisher<? extends I>> sources)static <I> Flux<I>first(Publisher<? extends I>... sources)<R> Flux<R>flatMap(Function<? super T,? extends Publisher<? extends R>> mapper)<R> Flux<R>flatMap(Function<? super T,? extends Publisher<? extends R>> mapperOnNext, Function<? super Throwable,? extends Publisher<? extends R>> mapperOnError, Supplier<? extends Publisher<? extends R>> mapperOnComplete)<V> Flux<V>flatMap(Function<? super T,? extends Publisher<? extends V>> mapper, int concurrency)<V> Flux<V>flatMap(Function<? super T,? extends Publisher<? extends V>> mapper, int concurrency, int prefetch)<V> Flux<V>flatMapDelayError(Function<? super T,? extends Publisher<? extends V>> mapper, int concurrency, int prefetch)<R> Flux<R>flatMapIterable(Function<? super T,? extends Iterable<? extends R>> mapper)<R> Flux<R>flatMapIterable(Function<? super T,? extends Iterable<? extends R>> mapper, int prefetch)<R> Flux<R>flatMapSequential(Function<? super T,? extends Publisher<? extends R>> mapper)<R> Flux<R>flatMapSequential(Function<? super T,? extends Publisher<? extends R>> mapper, int maxConcurrency)<R> Flux<R>flatMapSequential(Function<? super T,? extends Publisher<? extends R>> mapper, int maxConcurrency, int prefetch)<R> Flux<R>flatMapSequentialDelayError(Function<? super T,? extends Publisher<? extends R>> mapper, int maxConcurrency, int prefetch)static <T> Flux<T>from(Publisher<? extends T> source)static <T> Flux<T>fromArray(T[] array)Create aFluxthat emits the items contained in the provided array.static <T> Flux<T>fromIterable(Iterable<? extends T> it)static <T> Flux<T>fromStream(Stream<? extends T> s)static <T> Flux<T>fromStream(Supplier<Stream<? extends T>> streamSupplier)static <T,S> Flux<T>generate(Callable<S> stateSupplier, BiFunction<S,SynchronousSink<T>,S> generator)Programmatically create aFluxby generating signals one-by-one via a consumer callback and some state.static <T,S> Flux<T>generate(Callable<S> stateSupplier, BiFunction<S,SynchronousSink<T>,S> generator, Consumer<? super S> stateConsumer)Programmatically create aFluxby generating signals one-by-one via a consumer callback and some state, with a final cleanup callback.static <T> Flux<T>generate(Consumer<SynchronousSink<T>> generator)Programmatically create aFluxby generating signals one-by-one via a consumer callback.intgetPrefetch()The prefetch configuration of theFlux<K> Flux<GroupedFlux<K,T>>groupBy(Function<? super T,? extends K> keyMapper)<K,V> Flux<GroupedFlux<K,V>>groupBy(Function<? super T,? extends K> keyMapper, Function<? super T,? extends V> valueMapper)<K,V> Flux<GroupedFlux<K,V>>groupBy(Function<? super T,? extends K> keyMapper, Function<? super T,? extends V> valueMapper, int prefetch)<K> Flux<GroupedFlux<K,T>>groupBy(Function<? super T,? extends K> keyMapper, int prefetch)<TRight,TLeftEnd,TRightEnd,R>

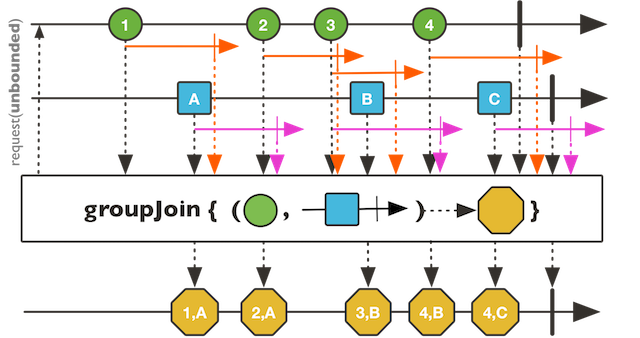

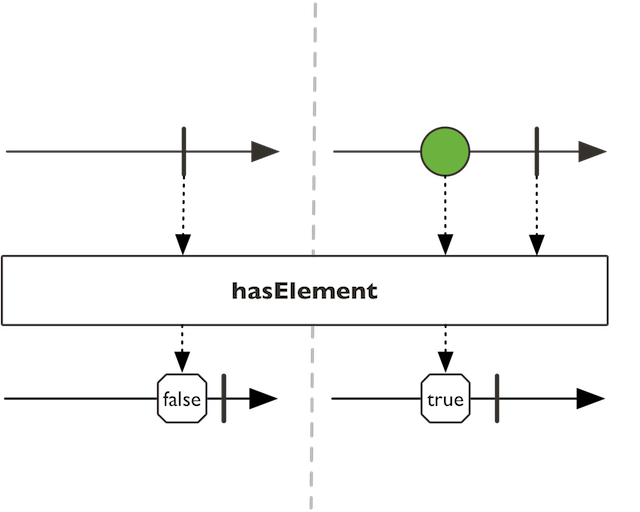

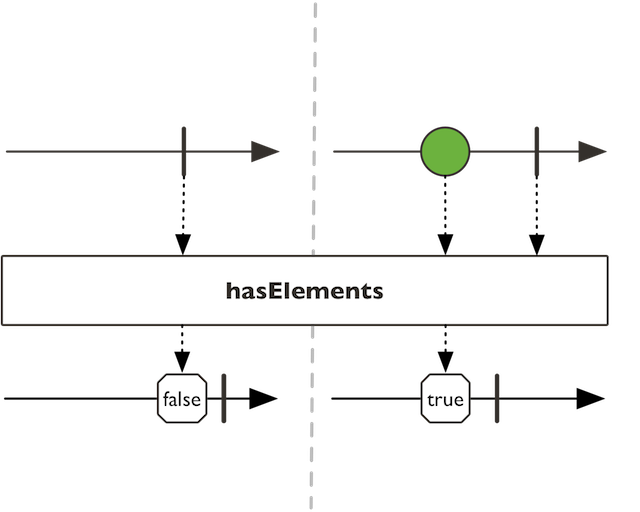

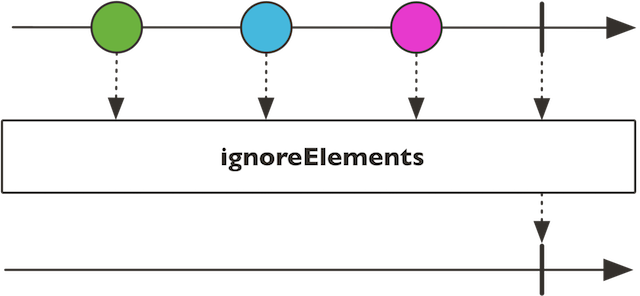

Flux<R>groupJoin(Publisher<? extends TRight> other, Function<? super T,? extends Publisher<TLeftEnd>> leftEnd, Function<? super TRight,? extends Publisher<TRightEnd>> rightEnd, BiFunction<? super T,? super Flux<TRight>,? extends R> resultSelector)Map values from two Publishers into time windows and emit combination of values in case their windows overlap.<R> Flux<R>handle(BiConsumer<? super T,SynchronousSink<R>> handler)Handle the items emitted by thisFluxby calling a biconsumer with the output sink for each onNext.Mono<Boolean>hasElement(T value)Emit a single boolean true if any of the elements of thisFluxsequence is equal to the provided value.Mono<Boolean>hasElements()Emit a single boolean true if thisFluxsequence has at least one element.Flux<T>hide()Hides the identities of thisFluxinstance.Mono<T>ignoreElements()Ignores onNext signals (dropping them) and only propagate termination events.Flux<Tuple2<Long,T>>index()Keep information about the order in which source values were received by indexing them with a 0-based incrementing long, returning aFluxofTuple2<(index, value)>.<I> Flux<I>index(BiFunction<? super Long,? super T,? extends I> indexMapper)Keep information about the order in which source values were received by indexing them internally with a 0-based incrementing long then combining this information with the source value into aIusing the providedBiFunction, returning aFlux<I>.static Flux<Long>interval(Duration period)Create aFluxthat emits long values starting with 0 and incrementing at specified time intervals on the global timer.static Flux<Long>interval(Duration delay, Duration period)Create aFluxthat emits long values starting with 0 and incrementing at specified time intervals, after an initial delay, on the global timer.static Flux<Long>interval(Duration delay, Duration period, Scheduler timer)static Flux<Long>interval(Duration period, Scheduler timer)<TRight,TLeftEnd,TRightEnd,R>

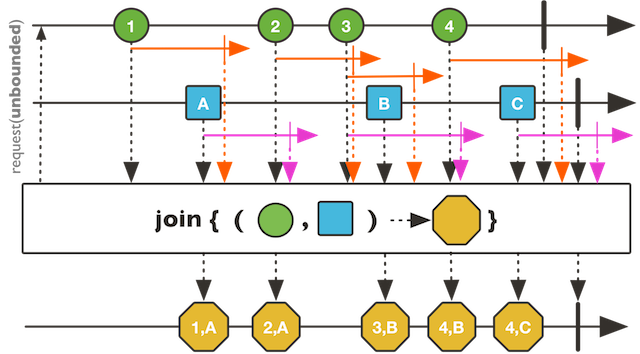

Flux<R>join(Publisher<? extends TRight> other, Function<? super T,? extends Publisher<TLeftEnd>> leftEnd, Function<? super TRight,? extends Publisher<TRightEnd>> rightEnd, BiFunction<? super T,? super TRight,? extends R> resultSelector)Map values from two Publishers into time windows and emit combination of values in case their windows overlap.static <T> Flux<T>just(T... data)Create aFluxthat emits the provided elements and then completes.static <T> Flux<T>just(T data)Create a newFluxthat will only emit a single element then onComplete.Mono<T>last()Emit the last element observed before complete signal as aMono, or emitNoSuchElementExceptionerror if the source was empty.Mono<T>last(T defaultValue)Emit the last element observed before complete signal as aMono, or emit thedefaultValueif the source was empty.Flux<T>limitRate(int prefetchRate)Ensure that backpressure signals from downstream subscribers are split into batches capped at the providedprefetchRatewhen propagated upstream, effectively rate limiting the upstreamPublisher.Flux<T>limitRate(int highTide, int lowTide)Ensure that backpressure signals from downstream subscribers are split into batches capped at the providedhighTidefirst, then replenishing at the providedlowTide, effectively rate limiting the upstreamPublisher.Flux<T>limitRequest(long requestCap)Ensure that the total amount requested upstream is capped atcap.Flux<T>log()Observe all Reactive Streams signals and trace them usingLoggersupport.Flux<T>log(Logger logger)Observe Reactive Streams signals matching the passed filteroptionsand trace them using a specific user-providedLogger, atLevel.INFOlevel.Flux<T>log(Logger logger, Level level, boolean showOperatorLine, SignalType... options)Flux<T>log(String category)Observe all Reactive Streams signals and trace them usingLoggersupport.Flux<T>log(String category, Level level, boolean showOperatorLine, SignalType... options)Observe Reactive Streams signals matching the passed filteroptionsand trace them usingLoggersupport.Flux<T>log(String category, Level level, SignalType... options)Observe Reactive Streams signals matching the passed filteroptionsand trace them usingLoggersupport.<V> Flux<V>map(Function<? super T,? extends V> mapper)Transform the items emitted by thisFluxby applying a synchronous function to each item.Flux<Signal<T>>materialize()Transform incoming onNext, onError and onComplete signals intoSignalinstances, materializing these signals.static <I> Flux<I>merge(int prefetch, Publisher<? extends I>... sources)Merge data fromPublishersequences contained in an array / vararg into an interleaved merged sequence.static <I> Flux<I>merge(Iterable<? extends Publisher<? extends I>> sources)static <I> Flux<I>merge(Publisher<? extends I>... sources)Merge data fromPublishersequences contained in an array / vararg into an interleaved merged sequence.static <T> Flux<T>merge(Publisher<? extends Publisher<? extends T>> source)static <T> Flux<T>merge(Publisher<? extends Publisher<? extends T>> source, int concurrency)static <T> Flux<T>merge(Publisher<? extends Publisher<? extends T>> source, int concurrency, int prefetch)static <I> Flux<I>mergeDelayError(int prefetch, Publisher<? extends I>... sources)Merge data fromPublishersequences contained in an array / vararg into an interleaved merged sequence.static <T> Flux<T>mergeOrdered(Comparator<? super T> comparator, Publisher<? extends T>... sources)Merge data from providedPublishersequences into an ordered merged sequence, by picking the smallest values from each source (as defined by the providedComparator).static <T> Flux<T>mergeOrdered(int prefetch, Comparator<? super T> comparator, Publisher<? extends T>... sources)Merge data from providedPublishersequences into an ordered merged sequence, by picking the smallest values from each source (as defined by the providedComparator).static <I extends Comparable<? super I>>

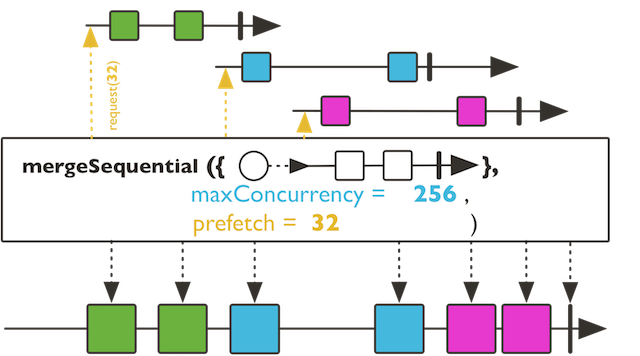

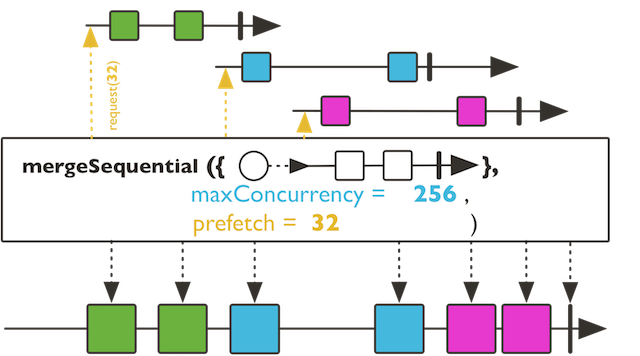

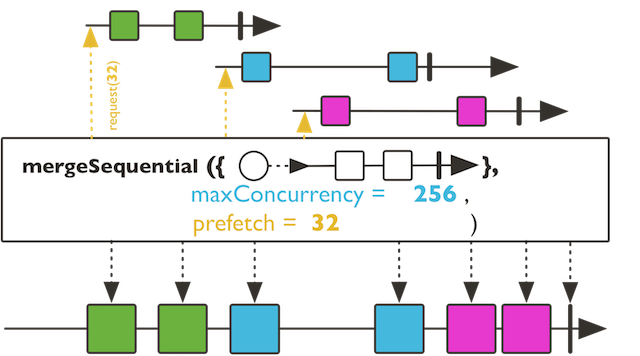

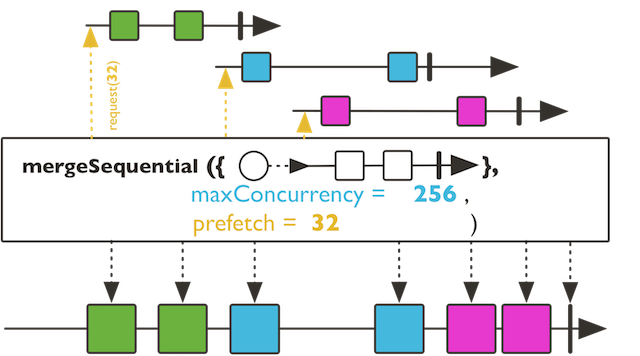

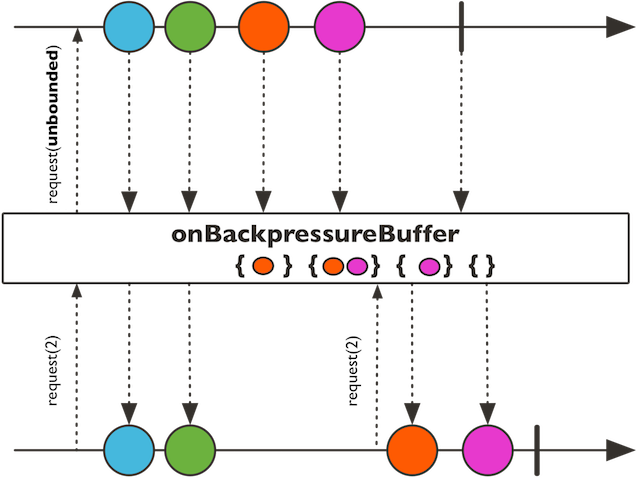

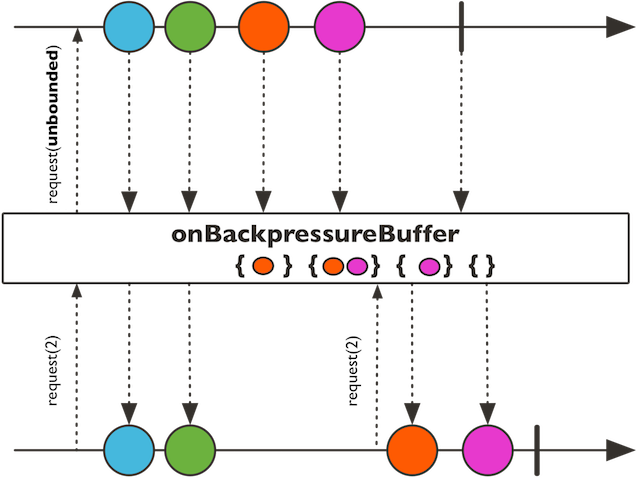

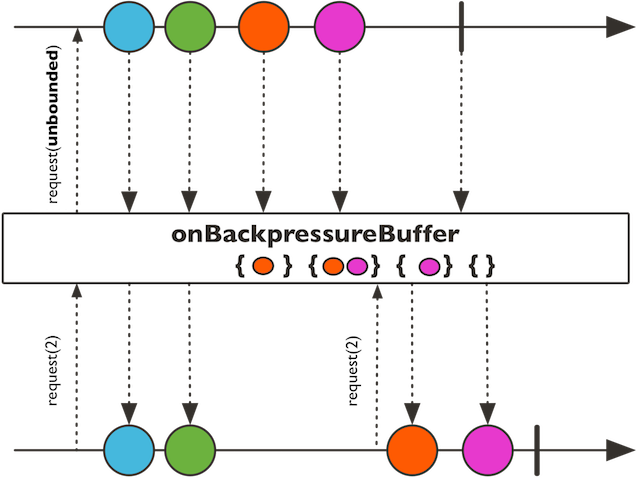

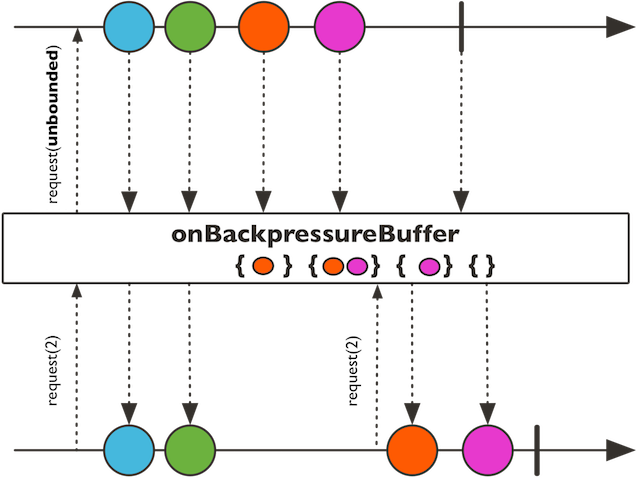

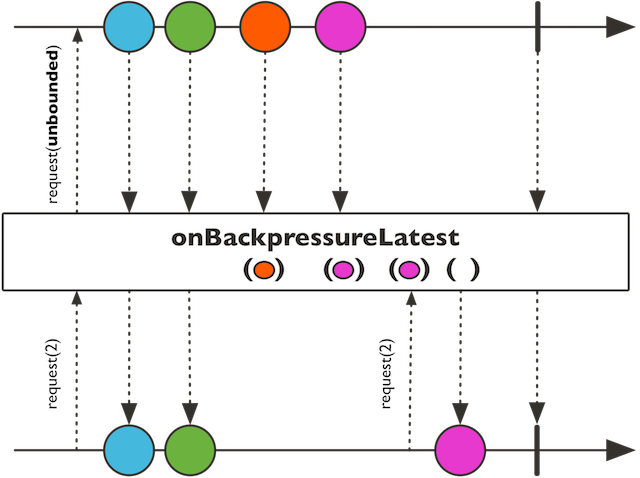

Flux<I>mergeOrdered(Publisher<? extends I>... sources)Merge data from providedPublishersequences into an ordered merged sequence, by picking the smallest values from each source (as defined by their natural order).Flux<T>mergeOrderedWith(Publisher<? extends T> other, Comparator<? super T> otherComparator)Merge data from thisFluxand aPublisherinto a reordered merge sequence, by picking the smallest value from each sequence as defined by a providedComparator.static <I> Flux<I>mergeSequential(int prefetch, Publisher<? extends I>... sources)Merge data fromPublishersequences provided in an array/vararg into an ordered merged sequence.static <I> Flux<I>mergeSequential(Iterable<? extends Publisher<? extends I>> sources)static <I> Flux<I>mergeSequential(Iterable<? extends Publisher<? extends I>> sources, int maxConcurrency, int prefetch)static <I> Flux<I>mergeSequential(Publisher<? extends I>... sources)Merge data fromPublishersequences provided in an array/vararg into an ordered merged sequence.static <T> Flux<T>mergeSequential(Publisher<? extends Publisher<? extends T>> sources)static <T> Flux<T>mergeSequential(Publisher<? extends Publisher<? extends T>> sources, int maxConcurrency, int prefetch)static <I> Flux<I>mergeSequentialDelayError(int prefetch, Publisher<? extends I>... sources)Merge data fromPublishersequences provided in an array/vararg into an ordered merged sequence.static <I> Flux<I>mergeSequentialDelayError(Iterable<? extends Publisher<? extends I>> sources, int maxConcurrency, int prefetch)static <T> Flux<T>mergeSequentialDelayError(Publisher<? extends Publisher<? extends T>> sources, int maxConcurrency, int prefetch)Flux<T>mergeWith(Publisher<? extends T> other)Flux<T>name(String name)Give a name to this sequence, which can be retrieved usingScannable.name()as long as this is the first reachableScannable.parents().static <T> Flux<T>never()Create aFluxthat will never signal any data, error or completion signal.Mono<T>next()<U> Flux<U>ofType(Class<U> clazz)Evaluate each accepted value against the givenClasstype.protected static <T> ConnectableFlux<T>onAssembly(ConnectableFlux<T> source)To be used by custom operators: invokes assemblyHookspointcut given aConnectableFlux, potentially returning a newConnectableFlux.protected static <T> Flux<T>onAssembly(Flux<T> source)Flux<T>onBackpressureBuffer()Request an unbounded demand and push to the returnedFlux, or park the observed elements if not enough demand is requested downstream.Flux<T>onBackpressureBuffer(Duration ttl, int maxSize, Consumer<? super T> onBufferEviction)Request an unbounded demand and push to the returnedFlux, or park the observed elements if not enough demand is requested downstream, within amaxSizelimit and for a maximumDurationofttl(as measured on theelastic Scheduler).Flux<T>onBackpressureBuffer(Duration ttl, int maxSize, Consumer<? super T> onBufferEviction, Scheduler scheduler)Flux<T>onBackpressureBuffer(int maxSize)Request an unbounded demand and push to the returnedFlux, or park the observed elements if not enough demand is requested downstream.Flux<T>onBackpressureBuffer(int maxSize, BufferOverflowStrategy bufferOverflowStrategy)Request an unbounded demand and push to the returnedFlux, or park the observed elements if not enough demand is requested downstream, within amaxSizelimit.Flux<T>onBackpressureBuffer(int maxSize, Consumer<? super T> onOverflow)Request an unbounded demand and push to the returnedFlux, or park the observed elements if not enough demand is requested downstream.Flux<T>onBackpressureBuffer(int maxSize, Consumer<? super T> onBufferOverflow, BufferOverflowStrategy bufferOverflowStrategy)Request an unbounded demand and push to the returnedFlux, or park the observed elements if not enough demand is requested downstream, within amaxSizelimit.Flux<T>onBackpressureDrop()Request an unbounded demand and push to the returnedFlux, or drop the observed elements if not enough demand is requested downstream.Flux<T>onBackpressureDrop(Consumer<? super T> onDropped)Flux<T>onBackpressureError()Request an unbounded demand and push to the returnedFlux, or emit onError fomExceptions.failWithOverflow()if not enough demand is requested downstream.Flux<T>onBackpressureLatest()Request an unbounded demand and push to the returnedFlux, or only keep the most recent observed item if not enough demand is requested downstream.<E extends Throwable>

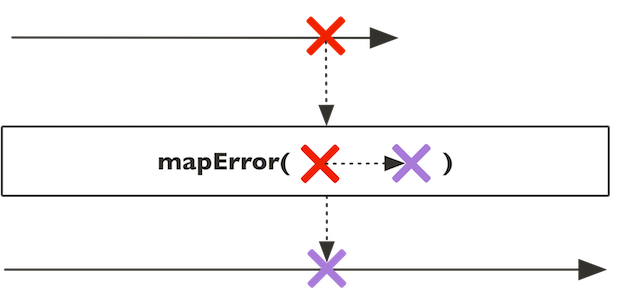

Flux<T>onErrorMap(Class<E> type, Function<? super E,? extends Throwable> mapper)Transform an error emitted by thisFluxby synchronously applying a function to it if the error matches the given type.Flux<T>onErrorMap(Function<? super Throwable,? extends Throwable> mapper)Transform any error emitted by thisFluxby synchronously applying a function to it.Flux<T>onErrorMap(Predicate<? super Throwable> predicate, Function<? super Throwable,? extends Throwable> mapper)Transform an error emitted by thisFluxby synchronously applying a function to it if the error matches the given predicate.<E extends Throwable>

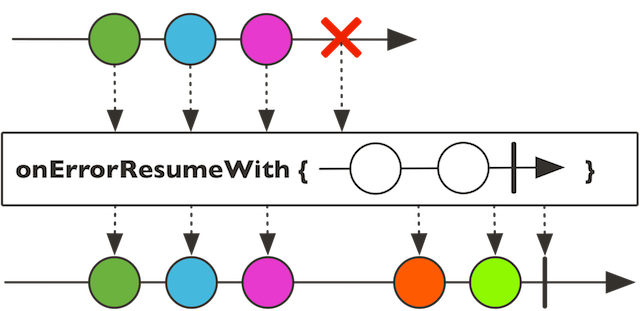

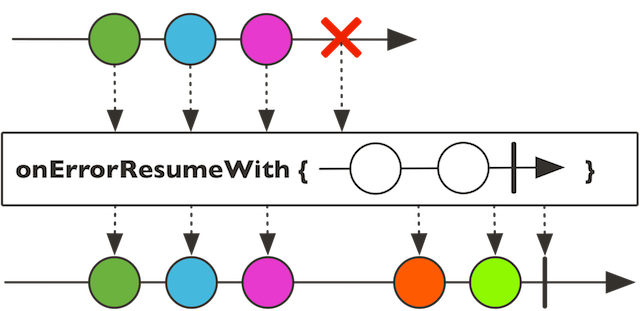

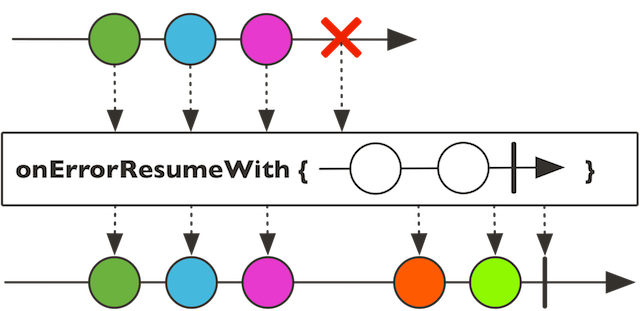

Flux<T>onErrorResume(Class<E> type, Function<? super E,? extends Publisher<? extends T>> fallback)Subscribe to a fallback publisher when an error matching the given type occurs, using a function to choose the fallback depending on the error.Flux<T>onErrorResume(Function<? super Throwable,? extends Publisher<? extends T>> fallback)Subscribe to a returned fallback publisher when any error occurs, using a function to choose the fallback depending on the error.Flux<T>onErrorResume(Predicate<? super Throwable> predicate, Function<? super Throwable,? extends Publisher<? extends T>> fallback)Subscribe to a fallback publisher when an error matching a given predicate occurs.<E extends Throwable>

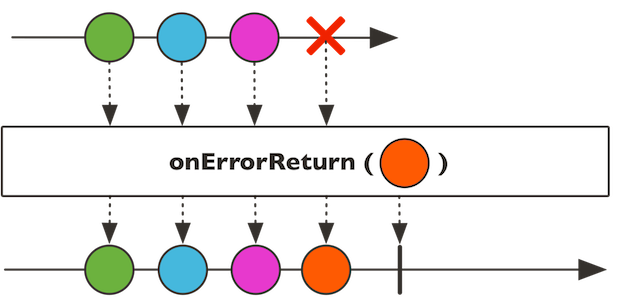

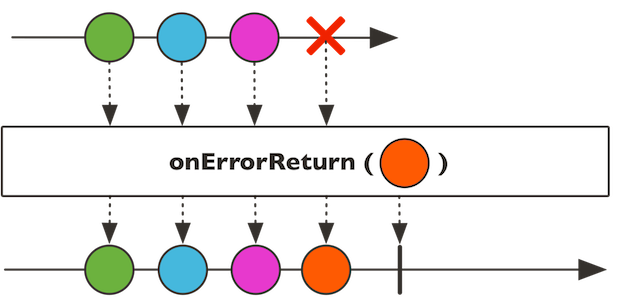

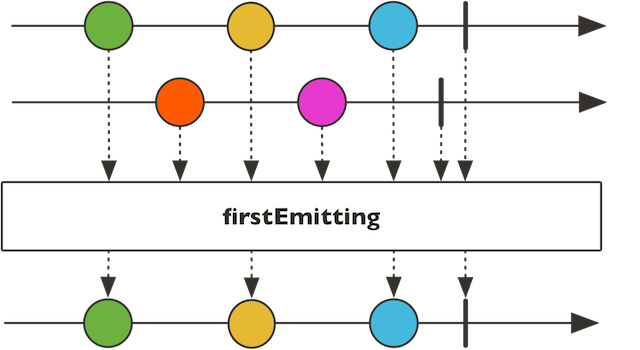

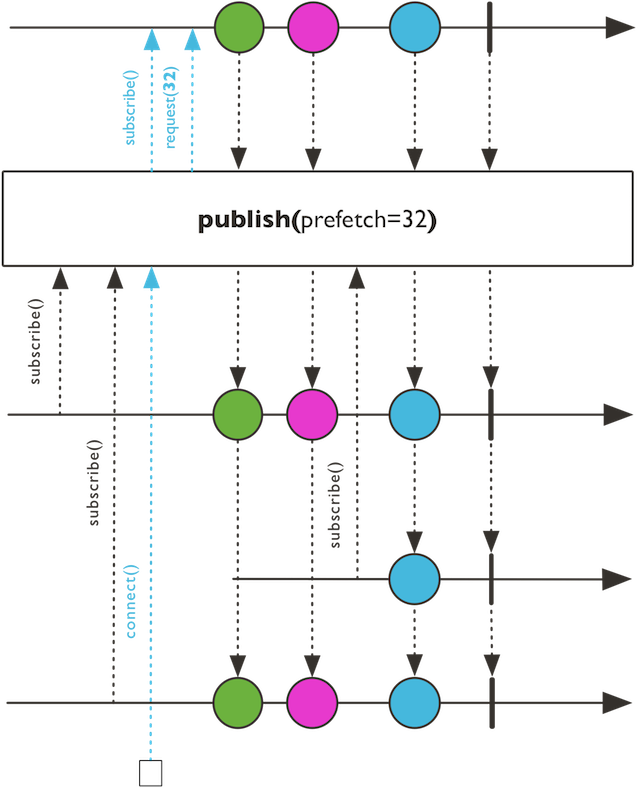

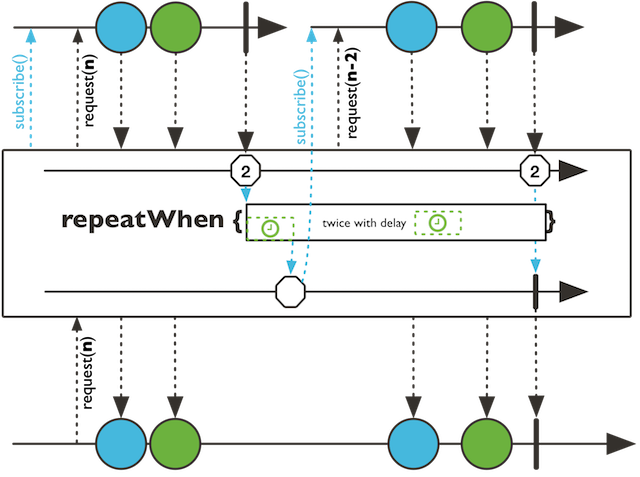

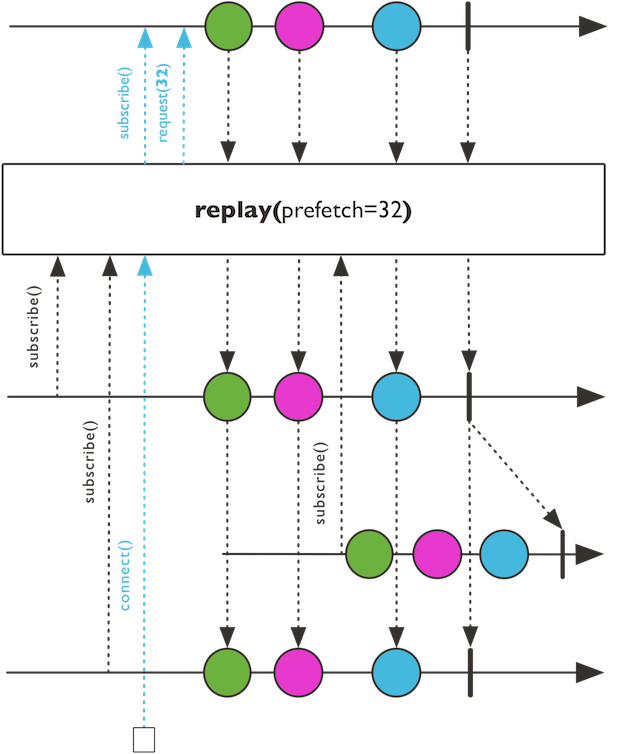

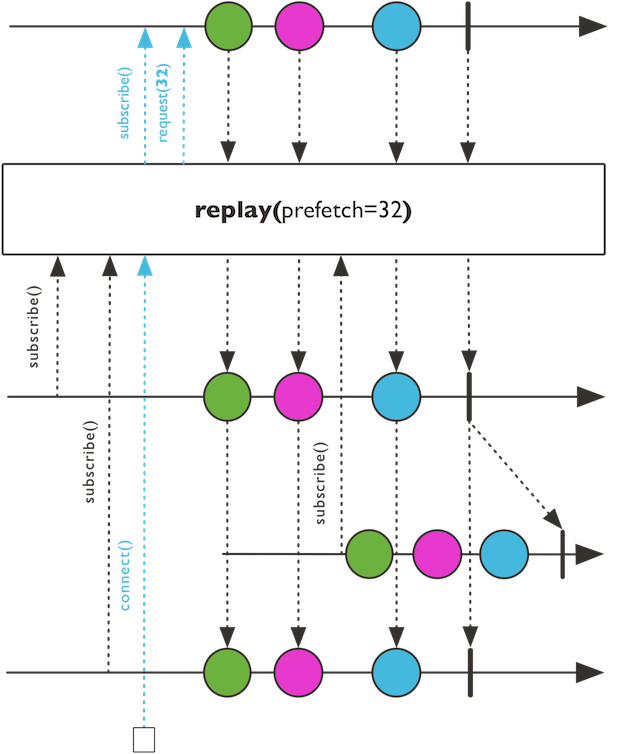

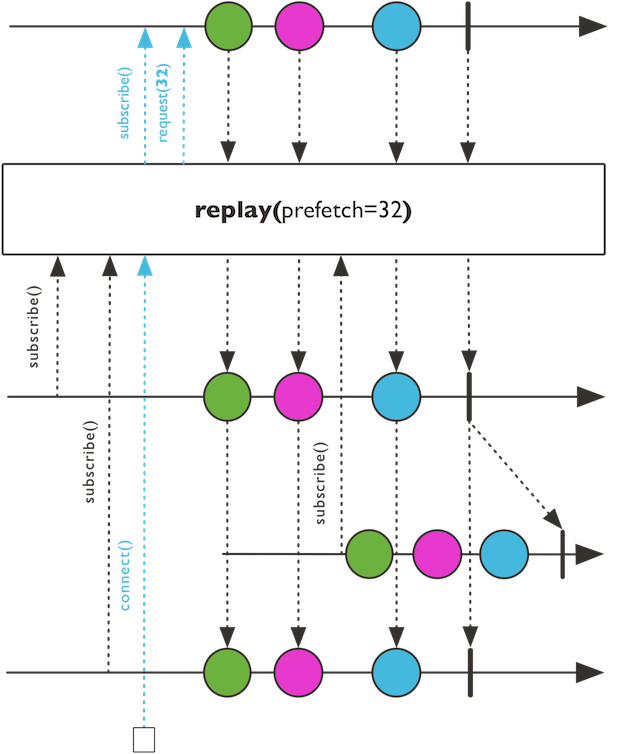

Flux<T>onErrorReturn(Class<E> type, T fallbackValue)Simply emit a captured fallback value when an error of the specified type is observed on thisFlux.Flux<T>onErrorReturn(Predicate<? super Throwable> predicate, T fallbackValue)Simply emit a captured fallback value when an error matching the given predicate is observed on thisFlux.Flux<T>onErrorReturn(T fallbackValue)Simply emit a captured fallback value when any error is observed on thisFlux.protected static <T> Flux<T>onLastAssembly(Flux<T> source)Flux<T>onTerminateDetach()Detaches both the childSubscriberand theSubscriptionon termination or cancellation.Flux<T>or(Publisher<? extends T> other)ParallelFlux<T>parallel()Prepare thisFluxby dividing data on a number of 'rails' matching the number of CPU cores, in a round-robin fashion.ParallelFlux<T>parallel(int parallelism)Prepare thisFluxby dividing data on a number of 'rails' matching the providedparallelismparameter, in a round-robin fashion.ParallelFlux<T>parallel(int parallelism, int prefetch)ConnectableFlux<T>publish()Prepare aConnectableFluxwhich shares thisFluxsequence and dispatches values to subscribers in a backpressure-aware manner.<R> Flux<R>publish(Function<? super Flux<T>,? extends Publisher<? extends R>> transform)Shares a sequence for the duration of a function that may transform it and consume it as many times as necessary without causing multiple subscriptions to the upstream.<R> Flux<R>publish(Function<? super Flux<T>,? extends Publisher<? extends R>> transform, int prefetch)Shares a sequence for the duration of a function that may transform it and consume it as many times as necessary without causing multiple subscriptions to the upstream.ConnectableFlux<T>publish(int prefetch)Prepare aConnectableFluxwhich shares thisFluxsequence and dispatches values to subscribers in a backpressure-aware manner.Mono<T>publishNext()Flux<T>publishOn(Scheduler scheduler)Flux<T>publishOn(Scheduler scheduler, boolean delayError, int prefetch)Run onNext, onComplete and onError on a suppliedSchedulerScheduler.Worker.Flux<T>publishOn(Scheduler scheduler, int prefetch)Run onNext, onComplete and onError on a suppliedSchedulerScheduler.Worker.static <T> Flux<T>push(Consumer<? super FluxSink<T>> emitter)static <T> Flux<T>push(Consumer<? super FluxSink<T>> emitter, FluxSink.OverflowStrategy backpressure)static Flux<Integer>range(int start, int count)<A> Mono<A>reduce(A initial, BiFunction<A,? super T,A> accumulator)Reduce the values from thisFluxsequence into an single object matching the type of a seed value.Mono<T>reduce(BiFunction<T,T,T> aggregator)Reduce the values from thisFluxsequence into an single object of the same type than the emitted items.<A> Mono<A>reduceWith(Supplier<A> initial, BiFunction<A,? super T,A> accumulator)Reduce the values from thisFluxsequence into an single object matching the type of a lazily supplied seed value.Flux<T>repeat()Repeatedly and indefinitely subscribe to the source upon completion of the previous subscription.Flux<T>repeat(BooleanSupplier predicate)Repeatedly subscribe to the source if the predicate returns true after completion of the previous subscription.Flux<T>repeat(long numRepeat)Repeatedly subscribe to the source numRepeat times.Flux<T>repeat(long numRepeat, BooleanSupplier predicate)Repeatedly subscribe to the source if the predicate returns true after completion of the previous subscription.Flux<T>repeatWhen(Function<Flux<Long>,? extends Publisher<?>> repeatFactory)Repeatedly subscribe to thisFluxwhen a companion sequence emits elements in response to the flux completion signal.ConnectableFlux<T>replay()Turn thisFluxinto a hot source and cache last emitted signals for furtherSubscriber.ConnectableFlux<T>replay(Duration ttl)Turn thisFluxinto a connectable hot source and cache last emitted signals for furtherSubscriber.ConnectableFlux<T>replay(Duration ttl, Scheduler timer)Turn thisFluxinto a connectable hot source and cache last emitted signals for furtherSubscriber.ConnectableFlux<T>replay(int history)Turn thisFluxinto a connectable hot source and cache last emitted signals for furtherSubscriber.ConnectableFlux<T>replay(int history, Duration ttl)Turn thisFluxinto a connectable hot source and cache last emitted signals for furtherSubscriber.ConnectableFlux<T>replay(int history, Duration ttl, Scheduler timer)Turn thisFluxinto a connectable hot source and cache last emitted signals for furtherSubscriber.Flux<T>retry()Re-subscribes to thisFluxsequence if it signals any error, indefinitely.Flux<T>retry(long numRetries)Re-subscribes to thisFluxsequence if it signals any error, for a fixed number of times.Flux<T>retry(long numRetries, Predicate<? super Throwable> retryMatcher)Flux<T>retry(Predicate<? super Throwable> retryMatcher)Flux<T>retryWhen(Function<Flux<Throwable>,? extends Publisher<?>> whenFactory)Flux<T>sample(Duration timespan)<U> Flux<T>sample(Publisher<U> sampler)Flux<T>sampleFirst(Duration timespan)Repeatedly take a value from thisFluxthen skip the values that follow within a given duration.<U> Flux<T>sampleFirst(Function<? super T,? extends Publisher<U>> samplerFactory)<U> Flux<T>sampleTimeout(Function<? super T,? extends Publisher<U>> throttlerFactory)<U> Flux<T>sampleTimeout(Function<? super T,? extends Publisher<U>> throttlerFactory, int maxConcurrency)<A> Flux<A>scan(A initial, BiFunction<A,? super T,A> accumulator)Reduce thisFluxvalues with an accumulatorBiFunctionand also emit the intermediate results of this function.Flux<T>scan(BiFunction<T,T,T> accumulator)Reduce thisFluxvalues with an accumulatorBiFunctionand also emit the intermediate results of this function.<A> Flux<A>scanWith(Supplier<A> initial, BiFunction<A,? super T,A> accumulator)Reduce thisFluxvalues with the help of an accumulatorBiFunctionand also emits the intermediate results.Flux<T>share()Mono<T>single()Expect and emit a single item from thisFluxsource or signalNoSuchElementExceptionfor an empty source, orIndexOutOfBoundsExceptionfor a source with more than one element.Mono<T>single(T defaultValue)Expect and emit a single item from thisFluxsource and emit a default value for an empty source, but signal anIndexOutOfBoundsExceptionfor a source with more than one element.Mono<T>singleOrEmpty()Expect and emit a single item from thisFluxsource, and accept an empty source but signal anIndexOutOfBoundsExceptionfor a source with more than one element.Flux<T>skip(Duration timespan)Skip elements from thisFluxemitted within the specified initial duration.Flux<T>skip(Duration timespan, Scheduler timer)Flux<T>skip(long skipped)Skip the specified number of elements from the beginning of thisFluxthen emit the remaining elements.Flux<T>skipLast(int n)Skip a specified number of elements at the end of thisFluxsequence.Flux<T>skipUntil(Predicate<? super T> untilPredicate)Flux<T>skipUntilOther(Publisher<?> other)Flux<T>skipWhile(Predicate<? super T> skipPredicate)Flux<T>sort()Sort elements from thisFluxby collecting and sorting them in the background then emitting the sorted sequence once this sequence completes.Flux<T>sort(Comparator<? super T> sortFunction)Sort elements from thisFluxusing aComparatorfunction, by collecting and sorting elements in the background then emitting the sorted sequence once this sequence completes.Flux<T>startWith(Iterable<? extends T> iterable)Flux<T>startWith(Publisher<? extends T> publisher)Flux<T>startWith(T... values)Prepend the given values before thisFluxsequence.Disposablesubscribe()Subscribe to thisFluxand request unbounded demand.Disposablesubscribe(Consumer<? super T> consumer)Disposablesubscribe(Consumer<? super T> consumer, Consumer<? super Throwable> errorConsumer)Disposablesubscribe(Consumer<? super T> consumer, Consumer<? super Throwable> errorConsumer, Runnable completeConsumer)Disposablesubscribe(Consumer<? super T> consumer, Consumer<? super Throwable> errorConsumer, Runnable completeConsumer, Consumer<? super Subscription> subscriptionConsumer)abstract voidsubscribe(CoreSubscriber<? super T> actual)An internalPublisher.subscribe(Subscriber)that will bypassHooks.onLastOperator(Function)pointcut.voidsubscribe(Subscriber<? super T> actual)Flux<T>subscribeOn(Scheduler scheduler)Run subscribe, onSubscribe and request on a specifiedScheduler'sScheduler.Worker.Flux<T>subscribeOn(Scheduler scheduler, boolean requestOnSeparateThread)Run subscribe and onSubscribe on a specifiedScheduler'sScheduler.Worker.Flux<T>subscriberContext(Context mergeContext)Flux<T>subscriberContext(Function<Context,Context> doOnContext)<E extends Subscriber<? super T>>

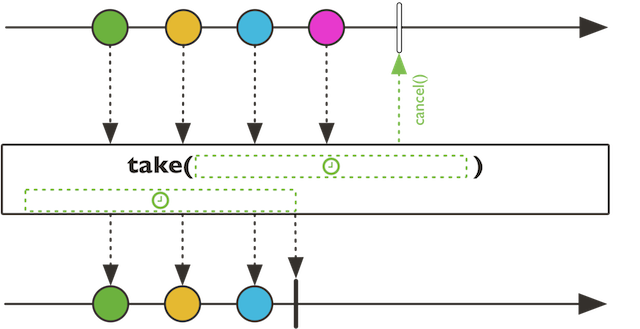

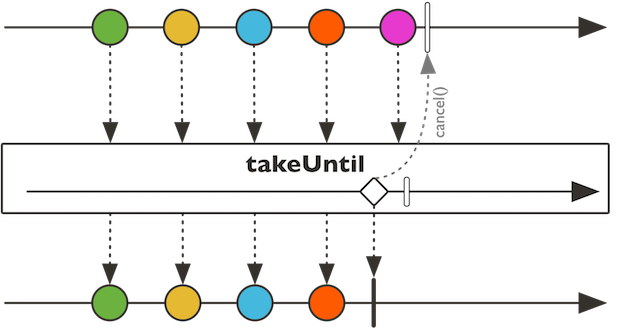

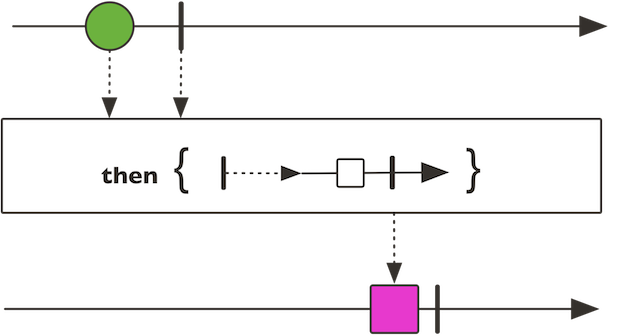

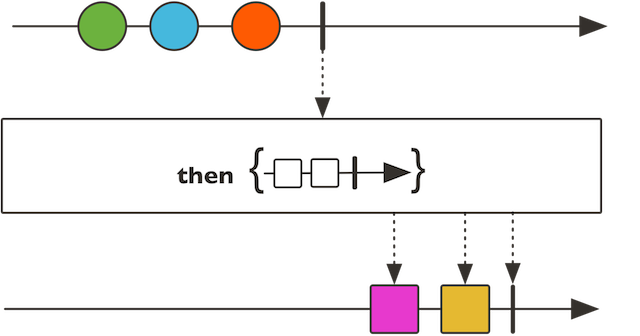

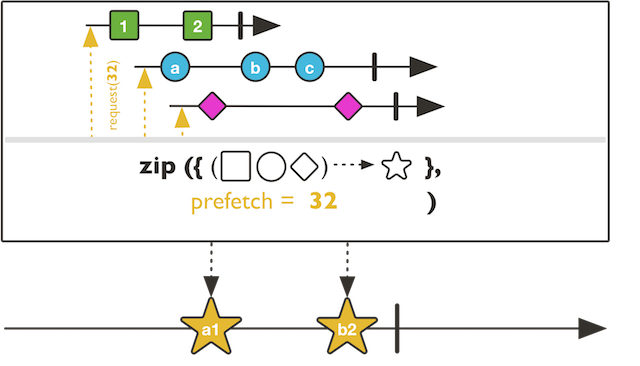

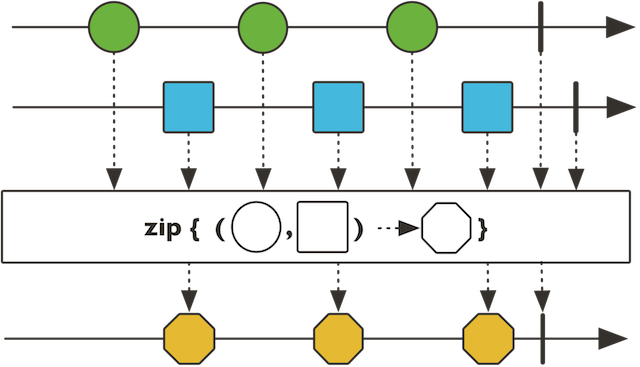

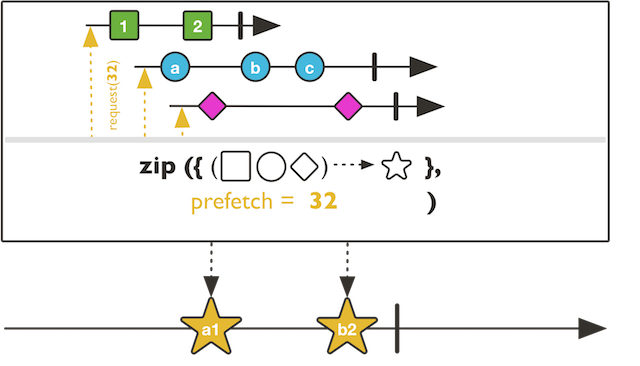

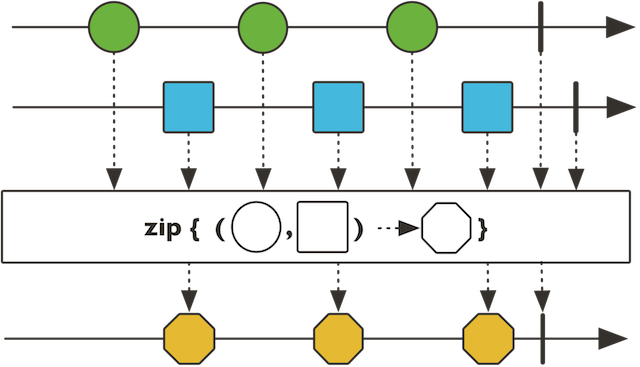

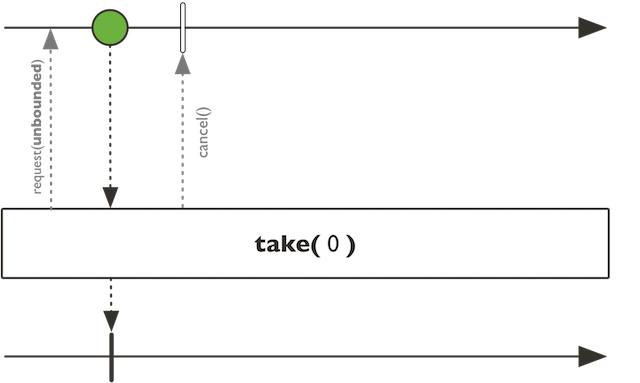

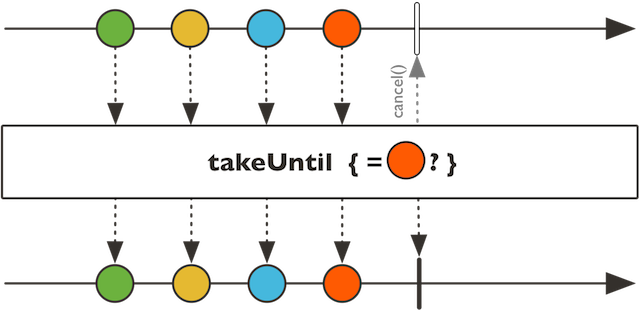

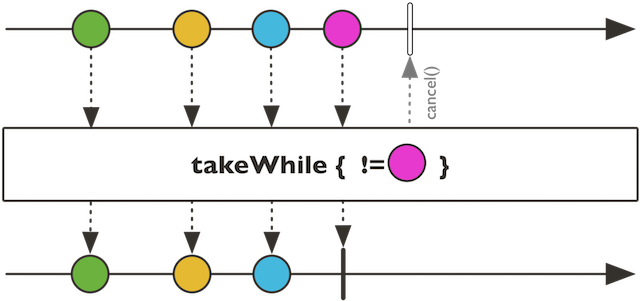

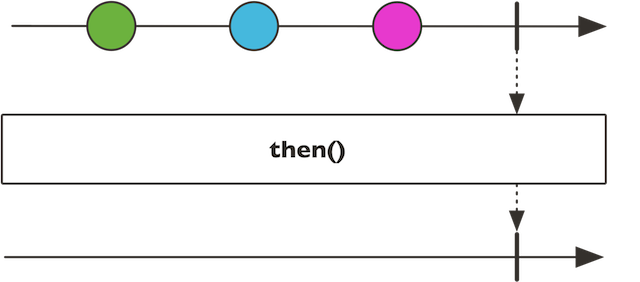

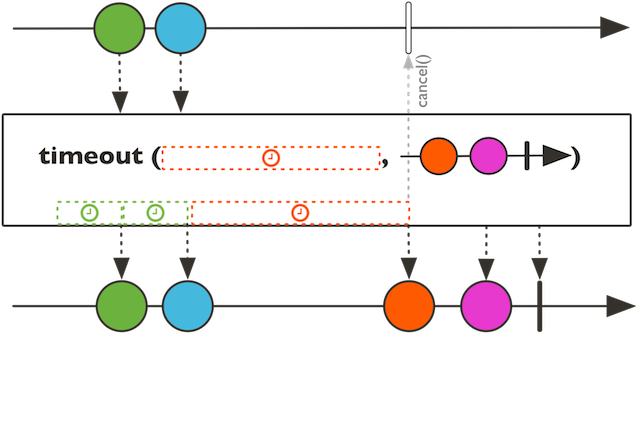

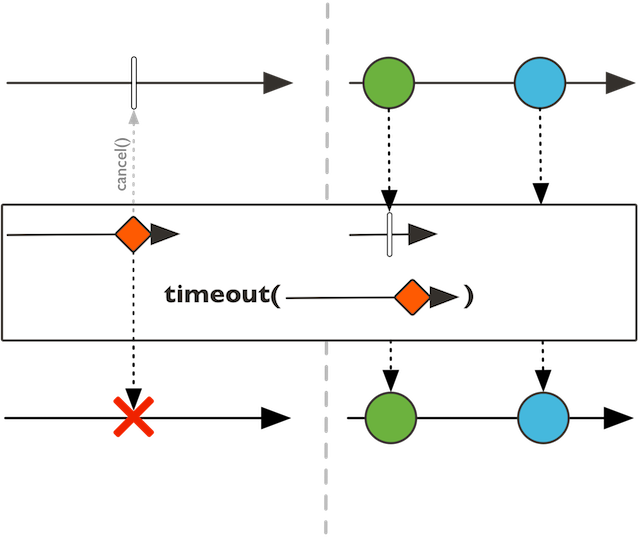

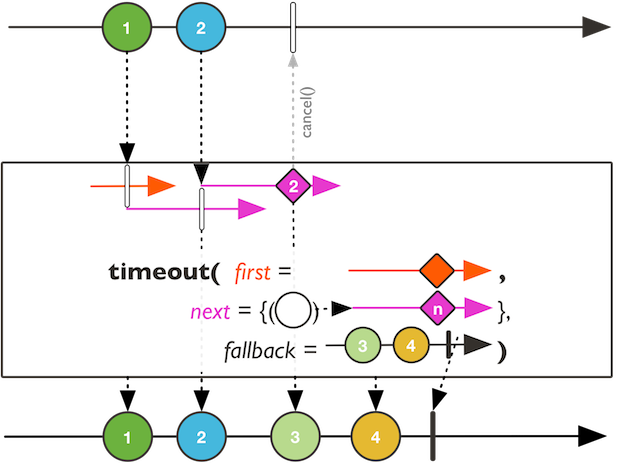

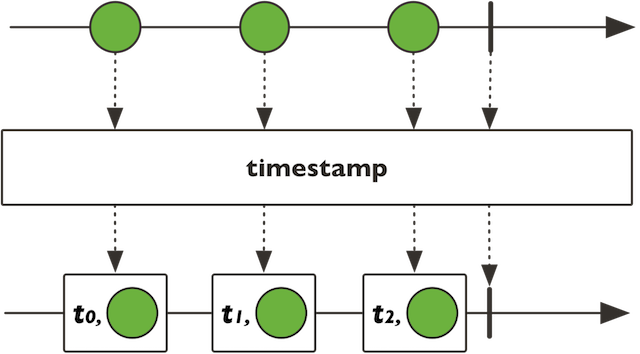

EsubscribeWith(E subscriber)Flux<T>switchIfEmpty(Publisher<? extends T> alternate)Switch to an alternativePublisherif this sequence is completed without any data.<V> Flux<V>switchMap(Function<? super T,Publisher<? extends V>> fn)<V> Flux<V>switchMap(Function<? super T,Publisher<? extends V>> fn, int prefetch)static <T> Flux<T>switchOnNext(Publisher<? extends Publisher<? extends T>> mergedPublishers)static <T> Flux<T>switchOnNext(Publisher<? extends Publisher<? extends T>> mergedPublishers, int prefetch)Flux<T>tag(String key, String value)Tag this flux with a key/value pair.Flux<T>take(Duration timespan)Flux<T>take(Duration timespan, Scheduler timer)Flux<T>take(long n)Take only the first N values from thisFlux, if available.Flux<T>takeLast(int n)Emit the last N values thisFluxemitted before its completion.Flux<T>takeUntil(Predicate<? super T> predicate)Flux<T>takeUntilOther(Publisher<?> other)Flux<T>takeWhile(Predicate<? super T> continuePredicate)Relay values from thisFluxwhile a predicate returns TRUE for the values (checked before each value is delivered).Mono<Void>then()Return aMono<Void>that completes when thisFluxcompletes.<V> Mono<V>then(Mono<V> other)Mono<Void>thenEmpty(Publisher<Void> other)Return aMono<Void>that waits for thisFluxto complete then for a suppliedPublisher<Void>to also complete.<V> Flux<V>thenMany(Publisher<V> other)Flux<T>timeout(Duration timeout)Propagate aTimeoutExceptionas soon as no item is emitted within the givenDurationfrom the previous emission (or the subscription for the first item).Flux<T>timeout(Duration timeout, Publisher<? extends T> fallback)Flux<T>timeout(Duration timeout, Publisher<? extends T> fallback, Scheduler timer)Flux<T>timeout(Duration timeout, Scheduler timer)Propagate aTimeoutExceptionas soon as no item is emitted within the givenDurationfrom the previous emission (or the subscription for the first item), as measured by the specifiedScheduler.<U> Flux<T>timeout(Publisher<U> firstTimeout)Signal aTimeoutExceptionin case the first item from thisFluxhas not been emitted before the givenPublisheremits.<U,V> Flux<T>timeout(Publisher<U> firstTimeout, Function<? super T,? extends Publisher<V>> nextTimeoutFactory)Signal aTimeoutExceptionin case the first item from thisFluxhas not been emitted before thefirstTimeoutPublisheremits, and whenever each subsequent elements is not emitted before aPublishergenerated from the latest element signals.<U,V> Flux<T>timeout(Publisher<U> firstTimeout, Function<? super T,? extends Publisher<V>> nextTimeoutFactory, Publisher<? extends T> fallback)Flux<Tuple2<Long,T>>timestamp()Flux<Tuple2<Long,T>>timestamp(Scheduler scheduler)Iterable<T>toIterable()Iterable<T>toIterable(int batchSize)Iterable<T>toIterable(int batchSize, Supplier<Queue<T>> queueProvider)Stream<T>toStream()Stream<T>toStream(int batchSize)StringtoString()<V> Flux<V>transform(Function<? super Flux<T>,? extends Publisher<V>> transformer)static <T,D> Flux<T>using(Callable<? extends D> resourceSupplier, Function<? super D,? extends Publisher<? extends T>> sourceSupplier, Consumer<? super D> resourceCleanup)Uses a resource, generated by a supplier for each individual Subscriber, while streaming the values from a Publisher derived from the same resource and makes sure the resource is released if the sequence terminates or the Subscriber cancels.static <T,D> Flux<T>using(Callable<? extends D> resourceSupplier, Function<? super D,? extends Publisher<? extends T>> sourceSupplier, Consumer<? super D> resourceCleanup, boolean eager)Uses a resource, generated by a supplier for each individual Subscriber, while streaming the values from a Publisher derived from the same resource and makes sure the resource is released if the sequence terminates or the Subscriber cancels.Flux<Flux<T>>window(Duration timespan)Flux<Flux<T>>window(Duration timespan, Duration timeshift)Flux<Flux<T>>window(Duration timespan, Duration timeshift, Scheduler timer)Flux<Flux<T>>window(Duration timespan, Scheduler timer)Flux<Flux<T>>window(int maxSize)Flux<Flux<T>>window(int maxSize, int skip)Flux<Flux<T>>window(Publisher<?> boundary)Flux<Flux<T>>windowTimeout(int maxSize, Duration timespan)Flux<Flux<T>>windowTimeout(int maxSize, Duration timespan, Scheduler timer)Flux<Flux<T>>windowUntil(Predicate<T> boundaryTrigger)Flux<Flux<T>>windowUntil(Predicate<T> boundaryTrigger, boolean cutBefore)Flux<Flux<T>>windowUntil(Predicate<T> boundaryTrigger, boolean cutBefore, int prefetch)<U,V> Flux<Flux<T>>windowWhen(Publisher<U> bucketOpening, Function<? super U,? extends Publisher<V>> closeSelector)Flux<Flux<T>>windowWhile(Predicate<T> inclusionPredicate)Flux<Flux<T>>windowWhile(Predicate<T> inclusionPredicate, int prefetch)<U,R> Flux<R>withLatestFrom(Publisher<? extends U> other, BiFunction<? super T,? super U,? extends R> resultSelector)Combine the most recently emitted values from both thisFluxand anotherPublisherthrough aBiFunctionand emits the result.static <I,O> Flux<O>zip(Function<? super Object[],? extends O> combinator, int prefetch, Publisher<? extends I>... sources)Zip multiple sources together, that is to say wait for all the sources to emit one element and combine these elements once into an output value (constructed by the provided combinator).static <I,O> Flux<O>zip(Function<? super Object[],? extends O> combinator, Publisher<? extends I>... sources)Zip multiple sources together, that is to say wait for all the sources to emit one element and combine these elements once into an output value (constructed by the provided combinator).static <O> Flux<O>zip(Iterable<? extends Publisher<?>> sources, Function<? super Object[],? extends O> combinator)Zip multiple sources together, that is to say wait for all the sources to emit one element and combine these elements once into an output value (constructed by the provided combinator).static <O> Flux<O>zip(Iterable<? extends Publisher<?>> sources, int prefetch, Function<? super Object[],? extends O> combinator)Zip multiple sources together, that is to say wait for all the sources to emit one element and combine these elements once into an output value (constructed by the provided combinator).static <TUPLE extends Tuple2,V>

Flux<V>zip(Publisher<? extends Publisher<?>> sources, Function<? super TUPLE,? extends V> combinator)Zip multiple sources together, that is to say wait for all the sources to emit one element and combine these elements once into an output value (constructed by the provided combinator).static <T1,T2> Flux<Tuple2<T1,T2>>zip(Publisher<? extends T1> source1, Publisher<? extends T2> source2)Zip two sources together, that is to say wait for all the sources to emit one element and combine these elements once into aTuple2.static <T1,T2,O> Flux<O>zip(Publisher<? extends T1> source1, Publisher<? extends T2> source2, BiFunction<? super T1,? super T2,? extends O> combinator)Zip two sources together, that is to say wait for all the sources to emit one element and combine these elements once into an output value (constructed by the provided combinator).static <T1,T2,T3> Flux<Tuple3<T1,T2,T3>>zip(Publisher<? extends T1> source1, Publisher<? extends T2> source2, Publisher<? extends T3> source3)Zip three sources together, that is to say wait for all the sources to emit one element and combine these elements once into aTuple3.static <T1,T2,T3,T4>

Flux<Tuple4<T1,T2,T3,T4>>zip(Publisher<? extends T1> source1, Publisher<? extends T2> source2, Publisher<? extends T3> source3, Publisher<? extends T4> source4)Zip four sources together, that is to say wait for all the sources to emit one element and combine these elements once into aTuple4.static <T1,T2,T3,T4,T5>

Flux<Tuple5<T1,T2,T3,T4,T5>>zip(Publisher<? extends T1> source1, Publisher<? extends T2> source2, Publisher<? extends T3> source3, Publisher<? extends T4> source4, Publisher<? extends T5> source5)Zip five sources together, that is to say wait for all the sources to emit one element and combine these elements once into aTuple5.static <T1,T2,T3,T4,T5,T6>

Flux<Tuple6<T1,T2,T3,T4,T5,T6>>zip(Publisher<? extends T1> source1, Publisher<? extends T2> source2, Publisher<? extends T3> source3, Publisher<? extends T4> source4, Publisher<? extends T5> source5, Publisher<? extends T6> source6)Zip six sources together, that is to say wait for all the sources to emit one element and combine these elements once into aTuple6.<T2> Flux<Tuple2<T,T2>>zipWith(Publisher<? extends T2> source2)<T2,V> Flux<V>zipWith(Publisher<? extends T2> source2, BiFunction<? super T,? super T2,? extends V> combinator)Zip thisFluxwith anotherPublishersource, that is to say wait for both to emit one element and combine these elements using acombinatorBiFunctionThe operator will continue doing so until any of the sources completes.<T2> Flux<Tuple2<T,T2>>zipWith(Publisher<? extends T2> source2, int prefetch)<T2,V> Flux<V>zipWith(Publisher<? extends T2> source2, int prefetch, BiFunction<? super T,? super T2,? extends V> combinator)Zip thisFluxwith anotherPublishersource, that is to say wait for both to emit one element and combine these elements using acombinatorBiFunctionThe operator will continue doing so until any of the sources completes.<T2> Flux<Tuple2<T,T2>>zipWithIterable(Iterable<? extends T2> iterable)<T2,V> Flux<V>zipWithIterable(Iterable<? extends T2> iterable, BiFunction<? super T,? super T2,? extends V> zipper)Zip elements from thisFluxwith the content of anIterable, that is to say combine one element from each, pairwise, using the given zipperBiFunction.

-

-

-

Method Detail

-

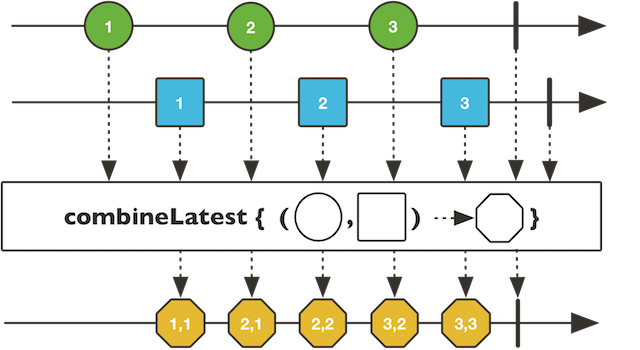

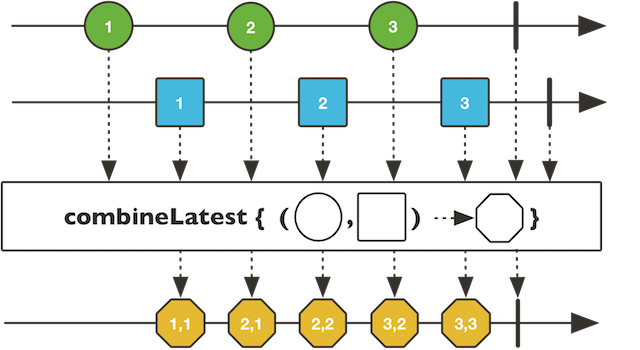

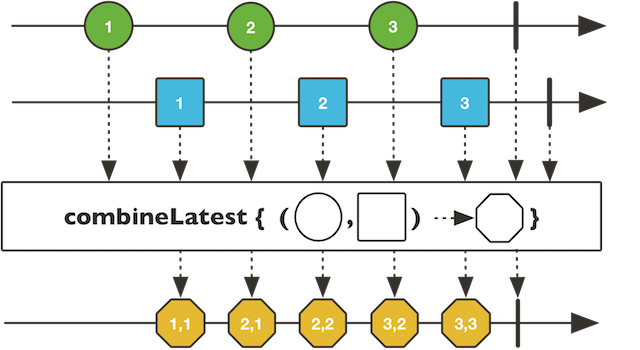

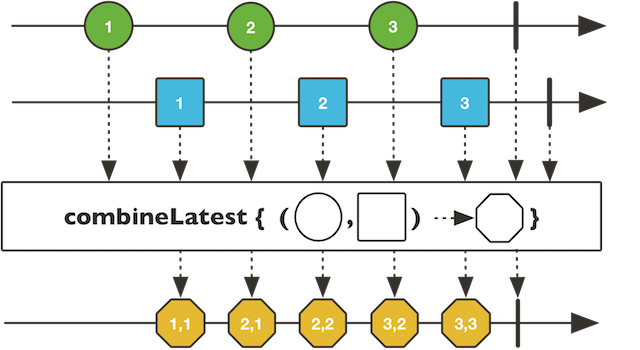

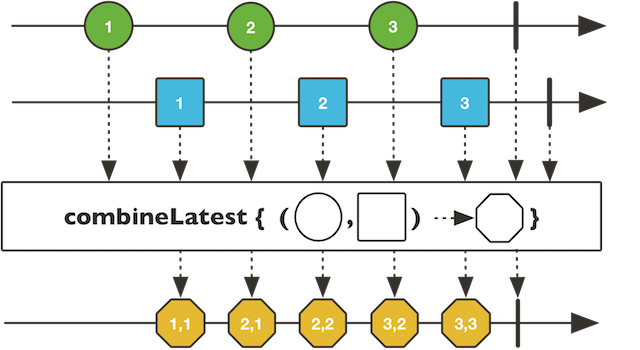

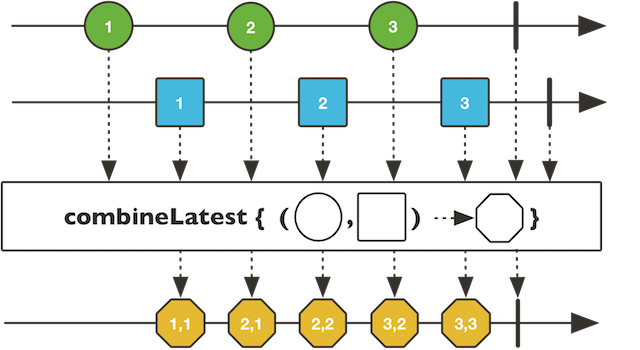

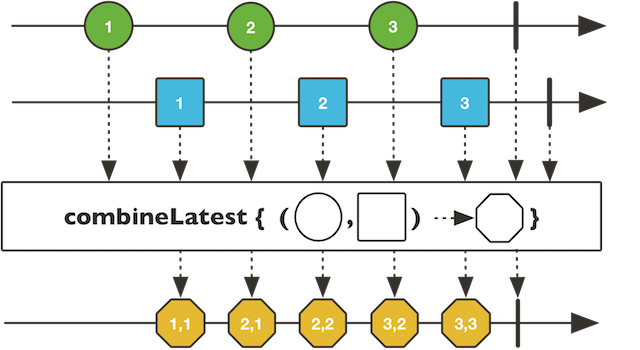

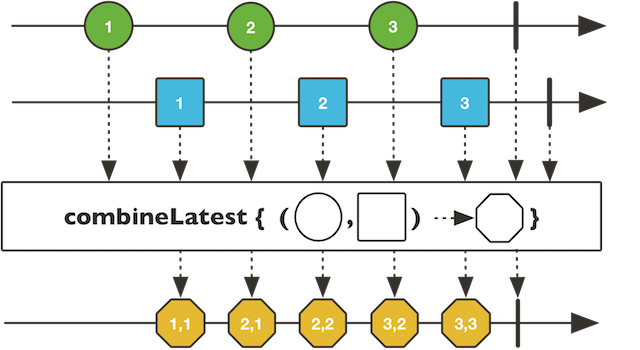

combineLatest

@SafeVarargs public static <T,V> Flux<V> combineLatest(Function<Object[],V> combinator, Publisher<? extends T>... sources)

Build aFluxwhose data are generated by the combination of the most recently published value from each of thePublishersources.

- Type Parameters:

T- type of the value from sourcesV- The produced output after transformation by the given combinator- Parameters:

sources- ThePublishersources to combine values fromcombinator- The aggregate function that will receive the latest value from each upstream and return the value to signal downstream- Returns:

- a

Fluxbased on the produced combinations

-

combineLatest

@SafeVarargs public static <T,V> Flux<V> combineLatest(Function<Object[],V> combinator, int prefetch, Publisher<? extends T>... sources)

Build aFluxwhose data are generated by the combination of the most recently published value from each of thePublishersources.

- Type Parameters:

T- type of the value from sourcesV- The produced output after transformation by the given combinator- Parameters:

sources- ThePublishersources to combine values fromprefetch- The demand sent to each combined sourcePublishercombinator- The aggregate function that will receive the latest value from each upstream and return the value to signal downstream- Returns:

- a

Fluxbased on the produced combinations

-

combineLatest

public static <T1,T2,V> Flux<V> combineLatest(Publisher<? extends T1> source1, Publisher<? extends T2> source2, BiFunction<? super T1,? super T2,? extends V> combinator)

Build aFluxwhose data are generated by the combination of the most recently published value from each of twoPublishersources.

- Type Parameters:

T1- type of the value from source1T2- type of the value from source2V- The produced output after transformation by the given combinator- Parameters:

source1- The firstPublishersource to combine values fromsource2- The secondPublishersource to combine values fromcombinator- The aggregate function that will receive the latest value from each upstream and return the value to signal downstream- Returns:

- a

Fluxbased on the produced combinations

-

combineLatest

public static <T1,T2,T3,V> Flux<V> combineLatest(Publisher<? extends T1> source1, Publisher<? extends T2> source2, Publisher<? extends T3> source3, Function<Object[],V> combinator)

Build aFluxwhose data are generated by the combination of the most recently published value from each of threePublishersources.

- Type Parameters:

T1- type of the value from source1T2- type of the value from source2T3- type of the value from source3V- The produced output after transformation by the given combinator- Parameters:

source1- The firstPublishersource to combine values fromsource2- The secondPublishersource to combine values fromsource3- The thirdPublishersource to combine values fromcombinator- The aggregate function that will receive the latest value from each upstream and return the value to signal downstream- Returns:

- a

Fluxbased on the produced combinations

-

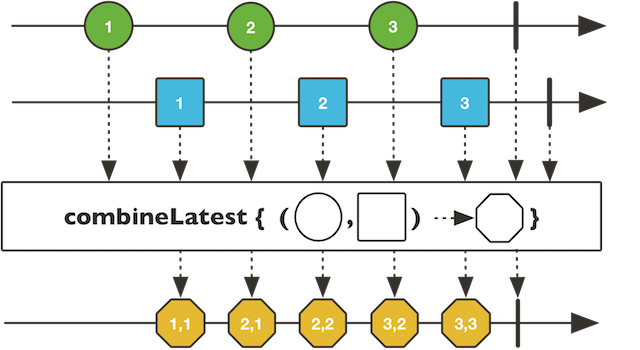

combineLatest

public static <T1,T2,T3,T4,V> Flux<V> combineLatest(Publisher<? extends T1> source1, Publisher<? extends T2> source2, Publisher<? extends T3> source3, Publisher<? extends T4> source4, Function<Object[],V> combinator)

Build aFluxwhose data are generated by the combination of the most recently published value from each of fourPublishersources.

- Type Parameters:

T1- type of the value from source1T2- type of the value from source2T3- type of the value from source3T4- type of the value from source4V- The produced output after transformation by the given combinator- Parameters:

source1- The firstPublishersource to combine values fromsource2- The secondPublishersource to combine values fromsource3- The thirdPublishersource to combine values fromsource4- The fourthPublishersource to combine values fromcombinator- The aggregate function that will receive the latest value from each upstream and return the value to signal downstream- Returns:

- a

Fluxbased on the produced combinations

-

combineLatest

public static <T1,T2,T3,T4,T5,V> Flux<V> combineLatest(Publisher<? extends T1> source1, Publisher<? extends T2> source2, Publisher<? extends T3> source3, Publisher<? extends T4> source4, Publisher<? extends T5> source5, Function<Object[],V> combinator)

Build aFluxwhose data are generated by the combination of the most recently published value from each of fivePublishersources.

- Type Parameters:

T1- type of the value from source1T2- type of the value from source2T3- type of the value from source3T4- type of the value from source4T5- type of the value from source5V- The produced output after transformation by the given combinator- Parameters:

source1- The firstPublishersource to combine values fromsource2- The secondPublishersource to combine values fromsource3- The thirdPublishersource to combine values fromsource4- The fourthPublishersource to combine values fromsource5- The fifthPublishersource to combine values fromcombinator- The aggregate function that will receive the latest value from each upstream and return the value to signal downstream- Returns:

- a

Fluxbased on the produced combinations

-

combineLatest

public static <T1,T2,T3,T4,T5,T6,V> Flux<V> combineLatest(Publisher<? extends T1> source1, Publisher<? extends T2> source2, Publisher<? extends T3> source3, Publisher<? extends T4> source4, Publisher<? extends T5> source5, Publisher<? extends T6> source6, Function<Object[],V> combinator)

Build aFluxwhose data are generated by the combination of the most recently published value from each of sixPublishersources.

- Type Parameters:

T1- type of the value from source1T2- type of the value from source2T3- type of the value from source3T4- type of the value from source4T5- type of the value from source5T6- type of the value from source6V- The produced output after transformation by the given combinator- Parameters:

source1- The firstPublishersource to combine values fromsource2- The secondPublishersource to combine values fromsource3- The thirdPublishersource to combine values fromsource4- The fourthPublishersource to combine values fromsource5- The fifthPublishersource to combine values fromsource6- The sixthPublishersource to combine values fromcombinator- The aggregate function that will receive the latest value from each upstream and return the value to signal downstream- Returns:

- a

Fluxbased on the produced combinations

-

combineLatest

public static <T,V> Flux<V> combineLatest(Iterable<? extends Publisher<? extends T>> sources, Function<Object[],V> combinator)

Build aFluxwhose data are generated by the combination of the most recently published value from each of thePublishersources provided in anIterable.

- Type Parameters:

T- The common base type of the values from sourcesV- The produced output after transformation by the given combinator- Parameters:

sources- The list ofPublishersources to combine values fromcombinator- The aggregate function that will receive the latest value from each upstream and return the value to signal downstream- Returns:

- a

Fluxbased on the produced combinations

-

combineLatest

public static <T,V> Flux<V> combineLatest(Iterable<? extends Publisher<? extends T>> sources, int prefetch, Function<Object[],V> combinator)

Build aFluxwhose data are generated by the combination of the most recently published value from each of thePublishersources provided in anIterable.

- Type Parameters:

T- The common base type of the values from sourcesV- The produced output after transformation by the given combinator- Parameters:

sources- The list ofPublishersources to combine values fromprefetch- demand produced to each combined sourcePublishercombinator- The aggregate function that will receive the latest value from each upstream and return the value to signal downstream- Returns:

- a

Fluxbased on the produced combinations

-

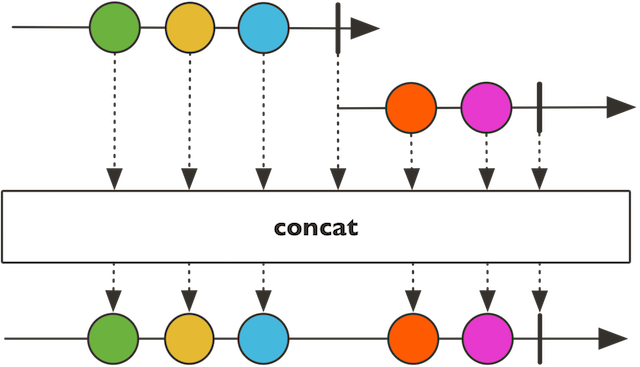

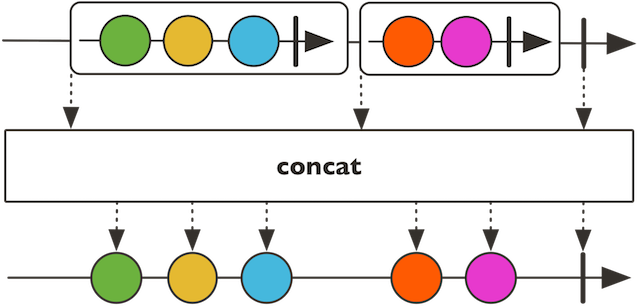

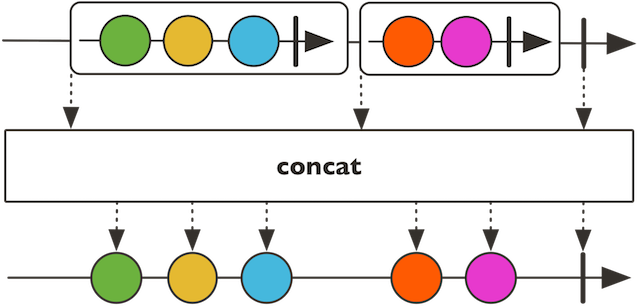

concat

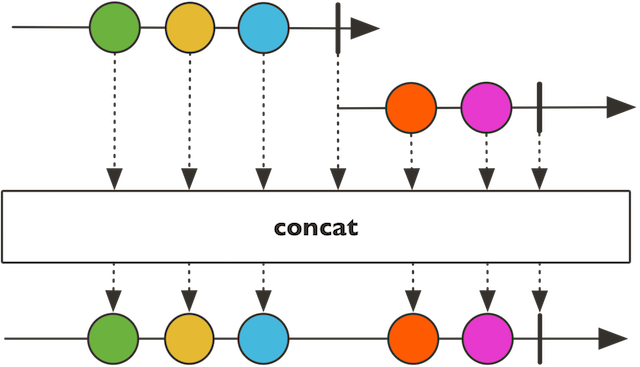

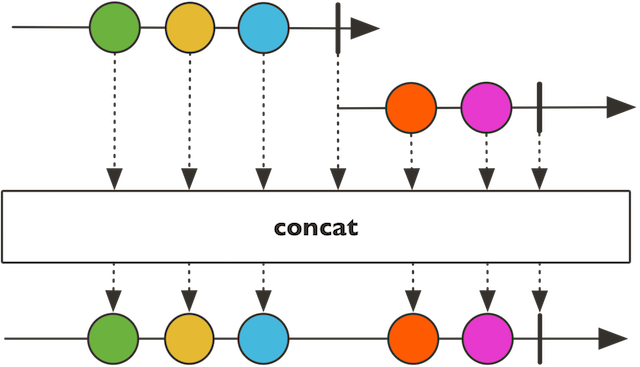

public static <T> Flux<T> concat(Iterable<? extends Publisher<? extends T>> sources)

Concatenate all sources provided in anIterable, forwarding elements emitted by the sources downstream.Concatenation is achieved by sequentially subscribing to the first source then waiting for it to complete before subscribing to the next, and so on until the last source completes. Any error interrupts the sequence immediately and is forwarded downstream.

-

concatWithValues

@SafeVarargs public final Flux<T> concatWithValues(T... values)

Concatenates the values to the end of theFlux- Parameters:

values- The values to concatenate- Returns:

- a new

Fluxconcatenating all source sequences

-

concat

public static <T> Flux<T> concat(Publisher<? extends Publisher<? extends T>> sources)

Concatenate all sources emitted as an onNext signal from a parentPublisher, forwarding elements emitted by the sources downstream.Concatenation is achieved by sequentially subscribing to the first source then waiting for it to complete before subscribing to the next, and so on until the last source completes. Any error interrupts the sequence immediately and is forwarded downstream.

-

concat

public static <T> Flux<T> concat(Publisher<? extends Publisher<? extends T>> sources, int prefetch)

Concatenate all sources emitted as an onNext signal from a parentPublisher, forwarding elements emitted by the sources downstream.Concatenation is achieved by sequentially subscribing to the first source then waiting for it to complete before subscribing to the next, and so on until the last source completes. Any error interrupts the sequence immediately and is forwarded downstream.

-

concat

@SafeVarargs public static <T> Flux<T> concat(Publisher<? extends T>... sources)

Concatenate all sources provided as a vararg, forwarding elements emitted by the sources downstream.Concatenation is achieved by sequentially subscribing to the first source then waiting for it to complete before subscribing to the next, and so on until the last source completes. Any error interrupts the sequence immediately and is forwarded downstream.

-

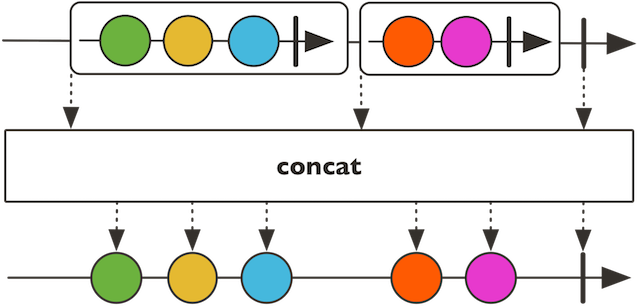

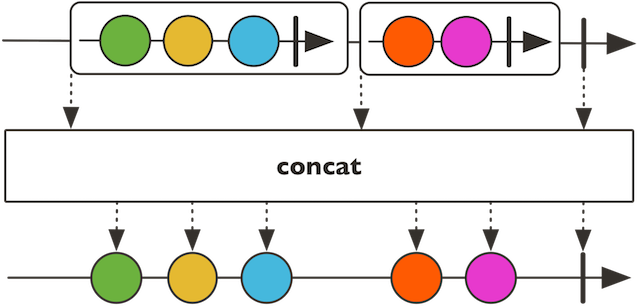

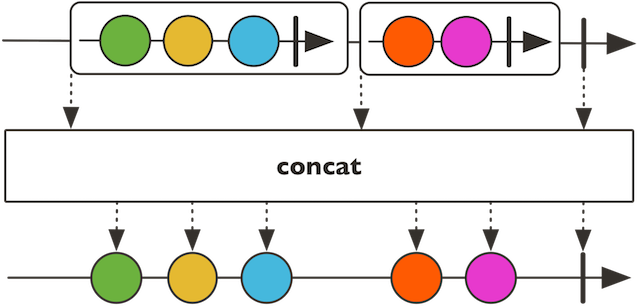

concatDelayError

public static <T> Flux<T> concatDelayError(Publisher<? extends Publisher<? extends T>> sources)

Concatenate all sources emitted as an onNext signal from a parentPublisher, forwarding elements emitted by the sources downstream.Concatenation is achieved by sequentially subscribing to the first source then waiting for it to complete before subscribing to the next, and so on until the last source completes. Errors do not interrupt the main sequence but are propagated after the rest of the sources have had a chance to be concatenated.

-

concatDelayError

public static <T> Flux<T> concatDelayError(Publisher<? extends Publisher<? extends T>> sources, int prefetch)

Concatenate all sources emitted as an onNext signal from a parentPublisher, forwarding elements emitted by the sources downstream.Concatenation is achieved by sequentially subscribing to the first source then waiting for it to complete before subscribing to the next, and so on until the last source completes. Errors do not interrupt the main sequence but are propagated after the rest of the sources have had a chance to be concatenated.

-

concatDelayError

public static <T> Flux<T> concatDelayError(Publisher<? extends Publisher<? extends T>> sources, boolean delayUntilEnd, int prefetch)

Concatenate all sources emitted as an onNext signal from a parentPublisher, forwarding elements emitted by the sources downstream.Concatenation is achieved by sequentially subscribing to the first source then waiting for it to complete before subscribing to the next, and so on until the last source completes.

Errors do not interrupt the main sequence but are propagated after the current concat backlog if

delayUntilEndis false or after all sources have had a chance to be concatenated ifdelayUntilEndis true.

- Type Parameters:

T- The type of values in both source and output sequences- Parameters:

sources- ThePublisherofPublisherto concatenatedelayUntilEnd- delay error until all sources have been consumed instead of after the current sourceprefetch- the inner source request size- Returns:

- a new

Fluxconcatenating all inner sources sequences until complete or error

-

concatDelayError

@SafeVarargs public static <T> Flux<T> concatDelayError(Publisher<? extends T>... sources)

Concatenate all sources provided as a vararg, forwarding elements emitted by the sources downstream.Concatenation is achieved by sequentially subscribing to the first source then waiting for it to complete before subscribing to the next, and so on until the last source completes. Errors do not interrupt the main sequence but are propagated after the rest of the sources have had a chance to be concatenated.

-

create

public static <T> Flux<T> create(Consumer<? super FluxSink<T>> emitter)

Programmatically create aFluxwith the capability of emitting multiple elements in a synchronous or asynchronous manner through theFluxSinkAPI.This Flux factory is useful if one wants to adapt some other multi-valued async API and not worry about cancellation and backpressure (which is handled by buffering all signals if the downstream can't keep up).

For example:

Flux.<String>create(emitter -> { ActionListener al = e -> { emitter.next(textField.getText()); }; // without cleanup support: button.addActionListener(al); // with cleanup support: button.addActionListener(al); emitter.onDispose(() -> { button.removeListener(al); }); });

-

create

public static <T> Flux<T> create(Consumer<? super FluxSink<T>> emitter, FluxSink.OverflowStrategy backpressure)

Programmatically create aFluxwith the capability of emitting multiple elements in a synchronous or asynchronous manner through theFluxSinkAPI.This Flux factory is useful if one wants to adapt some other multi-valued async API and not worry about cancellation and backpressure (which is handled by buffering all signals if the downstream can't keep up).

For example:

Flux.<String>create(emitter -> { ActionListener al = e -> { emitter.next(textField.getText()); }; // without cleanup support: button.addActionListener(al); // with cleanup support: button.addActionListener(al); emitter.onDispose(() -> { button.removeListener(al); }); }, FluxSink.OverflowStrategy.LATEST);- Type Parameters:

T- The type of values in the sequence- Parameters:

backpressure- the backpressure mode, seeFluxSink.OverflowStrategyfor the available backpressure modesemitter- Consume theFluxSinkprovided per-subscriber by Reactor to generate signals.- Returns:

- a

Flux

-

push

public static <T> Flux<T> push(Consumer<? super FluxSink<T>> emitter)

Programmatically create aFluxwith the capability of emitting multiple elements from a single-threaded producer through theFluxSinkAPI.This Flux factory is useful if one wants to adapt some other single-threaded multi-valued async API and not worry about cancellation and backpressure (which is handled by buffering all signals if the downstream can't keep up).

For example:

Flux.<String>push(emitter -> { ActionListener al = e -> { emitter.next(textField.getText()); }; // without cleanup support: button.addActionListener(al); // with cleanup support: button.addActionListener(al); emitter.onDispose(() -> { button.removeListener(al); }); }, FluxSink.OverflowStrategy.LATEST);

-

push

public static <T> Flux<T> push(Consumer<? super FluxSink<T>> emitter, FluxSink.OverflowStrategy backpressure)

Programmatically create aFluxwith the capability of emitting multiple elements from a single-threaded producer through theFluxSinkAPI.This Flux factory is useful if one wants to adapt some other single-threaded multi-valued async API and not worry about cancellation and backpressure (which is handled by buffering all signals if the downstream can't keep up).

For example:

Flux.<String>push(emitter -> { ActionListener al = e -> { emitter.next(textField.getText()); }; // without cleanup support: button.addActionListener(al); // with cleanup support: button.addActionListener(al); emitter.onDispose(() -> { button.removeListener(al); }); }, FluxSink.OverflowStrategy.LATEST);- Type Parameters:

T- The type of values in the sequence- Parameters:

backpressure- the backpressure mode, seeFluxSink.OverflowStrategyfor the available backpressure modesemitter- Consume theFluxSinkprovided per-subscriber by Reactor to generate signals.- Returns:

- a

Flux

-

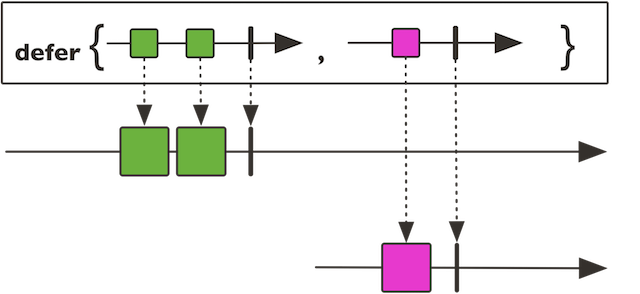

defer

public static <T> Flux<T> defer(Supplier<? extends Publisher<T>> supplier)

Lazily supply aPublisherevery time aSubscriptionis made on the resultingFlux, so the actual source instantiation is deferred until each subscribe and theSuppliercan create a subscriber-specific instance. If the supplier doesn't generate a new instance however, this operator will effectively behave likefrom(Publisher).

-

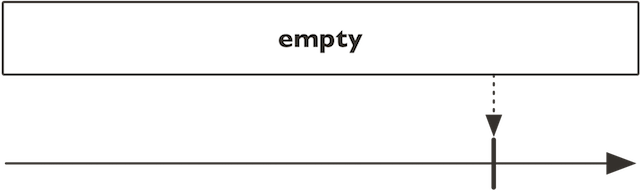

empty

public static <T> Flux<T> empty()

Create aFluxthat completes without emitting any item.

- Type Parameters:

T- the reified type of the targetSubscriber- Returns:

- an empty

Flux

-

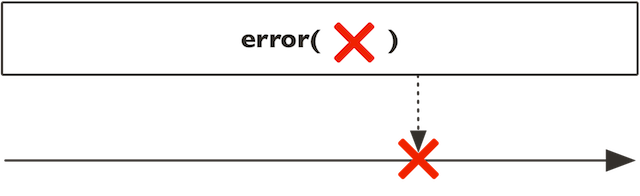

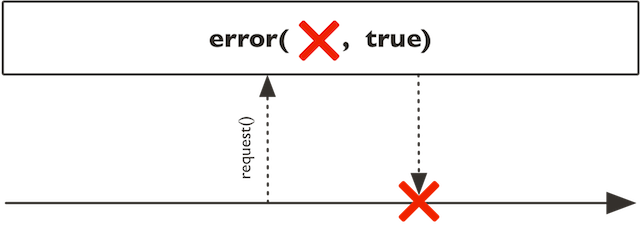

error

public static <T> Flux<T> error(Throwable error)

Create aFluxthat terminates with the specified error immediately after being subscribed to.

- Type Parameters:

T- the reified type of the targetSubscriber- Parameters:

error- the error to signal to eachSubscriber- Returns:

- a new failed

Flux

-

error

public static <O> Flux<O> error(Throwable throwable, boolean whenRequested)

Create aFluxthat terminates with the specified error, either immediately after being subscribed to or after being first requested.

- Type Parameters:

O- the reified type of the targetSubscriber- Parameters:

throwable- the error to signal to eachSubscriberwhenRequested- if true, will onError on the first request instead of subscribe().- Returns:

- a new failed

Flux

-

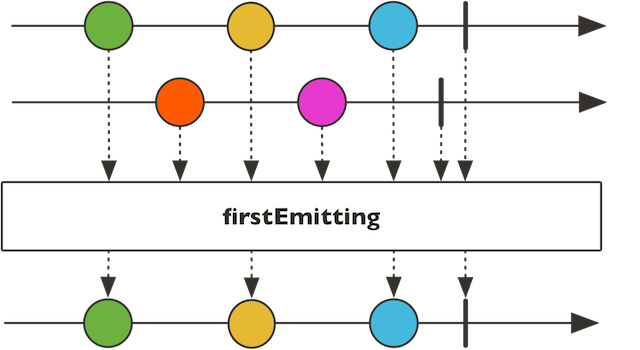

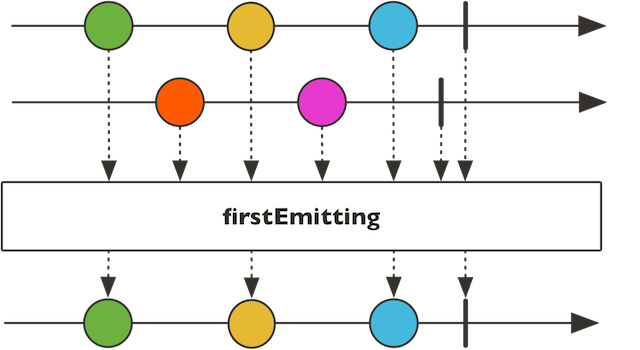

first

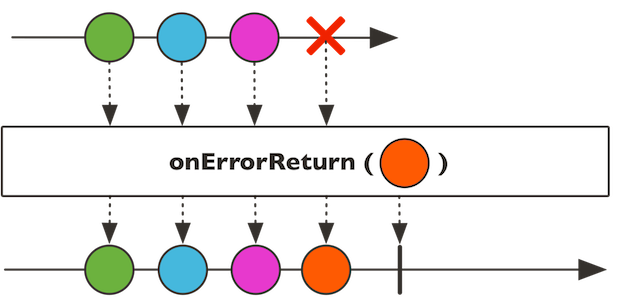

@SafeVarargs public static <I> Flux<I> first(Publisher<? extends I>... sources)

Pick the firstPublisherto emit any signal (onNext/onError/onComplete) and replay all signals from thatPublisher, effectively behaving like the fastest of these competing sources.

- Type Parameters:

I- The type of values in both source and output sequences- Parameters:

sources- The competing source publishers- Returns:

- a new

Fluxbehaving like the fastest of its sources

-

first

public static <I> Flux<I> first(Iterable<? extends Publisher<? extends I>> sources)

Pick the firstPublisherto emit any signal (onNext/onError/onComplete) and replay all signals from thatPublisher, effectively behaving like the fastest of these competing sources.

- Type Parameters:

I- The type of values in both source and output sequences- Parameters:

sources- The competing source publishers- Returns:

- a new

Fluxbehaving like the fastest of its sources

-

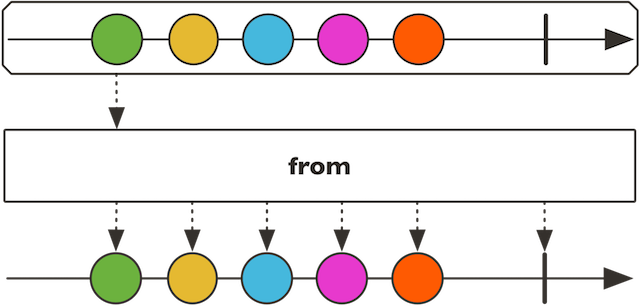

from

public static <T> Flux<T> from(Publisher<? extends T> source)

- Type Parameters:

T- The type of values in both source and output sequences- Parameters:

source- the source to decorate- Returns:

- a new

Flux

-

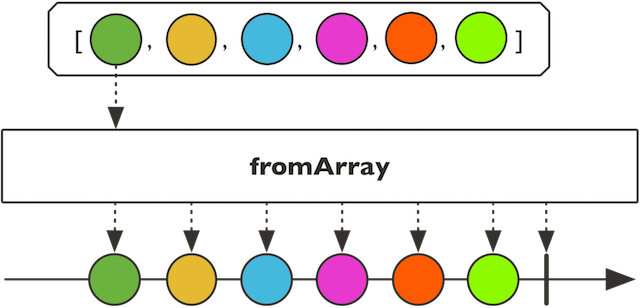

fromArray

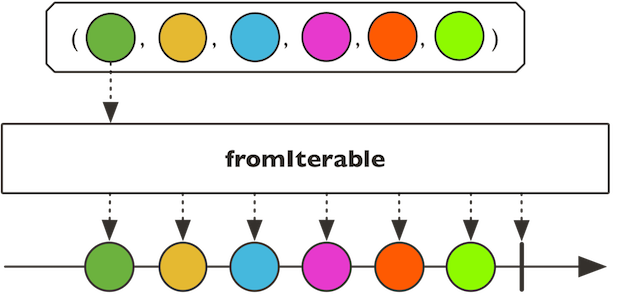

public static <T> Flux<T> fromArray(T[] array)

Create aFluxthat emits the items contained in the provided array.

- Type Parameters:

T- The type of values in the source array and resulting Flux- Parameters:

array- the array to read data from- Returns:

- a new

Flux

-

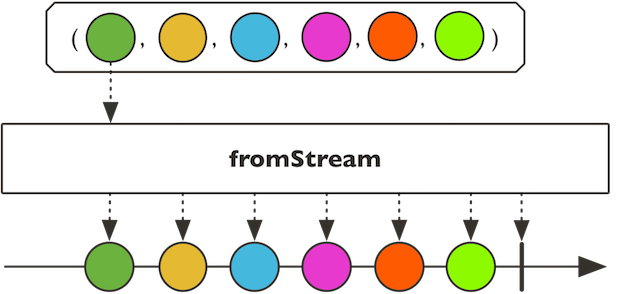

fromStream

public static <T> Flux<T> fromStream(Stream<? extends T> s)

Create aFluxthat emits the items contained in the providedStream. Keep in mind that aStreamcannot be re-used, which can be problematic in case of multiple subscriptions or re-subscription (like withrepeat()orretry()). TheStreamisclosedautomatically by the operator on cancellation, error or completion.

-

generate

public static <T> Flux<T> generate(Consumer<SynchronousSink<T>> generator)

Programmatically create aFluxby generating signals one-by-one via a consumer callback.

- Type Parameters:

T- the value type emitted- Parameters:

generator- Consume theSynchronousSinkprovided per-subscriber by Reactor to generate a single signal on each pass.- Returns:

- a

Flux

-

generate

public static <T,S> Flux<T> generate(Callable<S> stateSupplier, BiFunction<S,SynchronousSink<T>,S> generator)

Programmatically create aFluxby generating signals one-by-one via a consumer callback and some state. ThestateSuppliermay return null.

- Type Parameters:

T- the value type emittedS- the per-subscriber custom state type- Parameters:

stateSupplier- called for each incoming Subscriber to provide the initial state for the generator bifunctiongenerator- Consume theSynchronousSinkprovided per-subscriber by Reactor as well as the current state to generate a single signal on each pass and return a (new) state.- Returns:

- a

Flux

-

generate

public static <T,S> Flux<T> generate(Callable<S> stateSupplier, BiFunction<S,SynchronousSink<T>,S> generator, Consumer<? super S> stateConsumer)

Programmatically create aFluxby generating signals one-by-one via a consumer callback and some state, with a final cleanup callback. ThestateSuppliermay return null but your cleanupstateConsumerwill need to handle the null case.

- Type Parameters:

T- the value type emittedS- the per-subscriber custom state type- Parameters:

stateSupplier- called for each incoming Subscriber to provide the initial state for the generator bifunctiongenerator- Consume theSynchronousSinkprovided per-subscriber by Reactor as well as the current state to generate a single signal on each pass and return a (new) state.stateConsumer- called after the generator has terminated or the downstream cancelled, receiving the last state to be handled (i.e., release resources or do other cleanup).- Returns:

- a

Flux

-

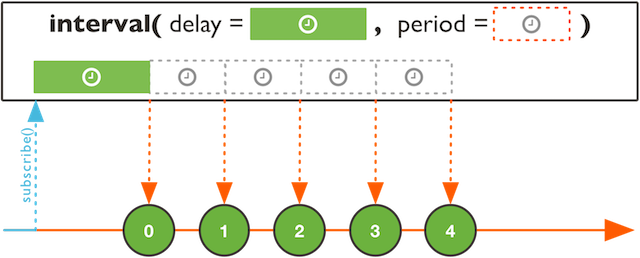

interval

public static Flux<Long> interval(Duration period)

Create aFluxthat emits long values starting with 0 and incrementing at specified time intervals on the global timer. If demand is not produced in time, an onError will be signalled with anoverflowIllegalStateExceptiondetailing the tick that couldn't be emitted. In normal conditions, theFluxwill never complete.Runs on the

Schedulers.parallel()Scheduler.

-

interval

public static Flux<Long> interval(Duration delay, Duration period)

Create aFluxthat emits long values starting with 0 and incrementing at specified time intervals, after an initial delay, on the global timer. If demand is not produced in time, an onError will be signalled with anoverflowIllegalStateExceptiondetailing the tick that couldn't be emitted. In normal conditions, theFluxwill never complete.Runs on the

Schedulers.parallel()Scheduler.

-

interval

public static Flux<Long> interval(Duration period, Scheduler timer)

Create aFluxthat emits long values starting with 0 and incrementing at specified time intervals, on the specifiedScheduler. If demand is not produced in time, an onError will be signalled with anoverflowIllegalStateExceptiondetailing the tick that couldn't be emitted. In normal conditions, theFluxwill never complete.

-

interval

public static Flux<Long> interval(Duration delay, Duration period, Scheduler timer)

Create aFluxthat emits long values starting with 0 and incrementing at specified time intervals, after an initial delay, on the specifiedScheduler. If demand is not produced in time, an onError will be signalled with anoverflowIllegalStateExceptiondetailing the tick that couldn't be emitted. In normal conditions, theFluxwill never complete.

-

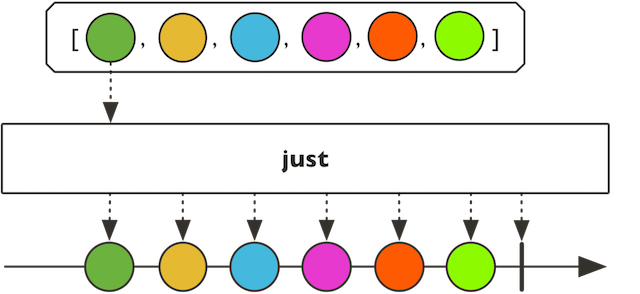

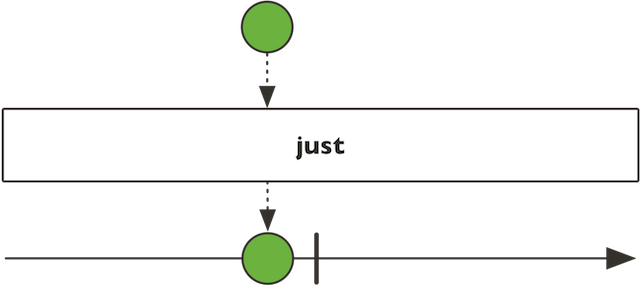

just

@SafeVarargs public static <T> Flux<T> just(T... data)

Create aFluxthat emits the provided elements and then completes.

- Type Parameters:

T- the emitted data type- Parameters:

data- the elements to emit, as a vararg- Returns:

- a new

Flux

-

just

public static <T> Flux<T> just(T data)

Create a newFluxthat will only emit a single element then onComplete.

- Type Parameters:

T- the emitted data type- Parameters:

data- the single element to emit- Returns:

- a new

Flux

-

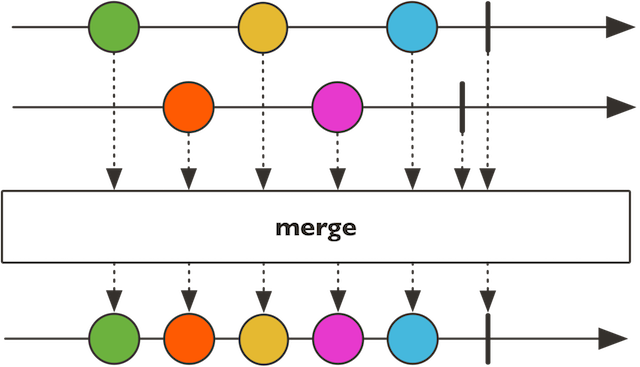

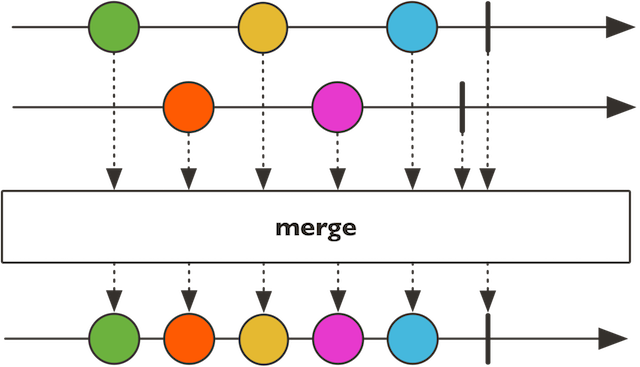

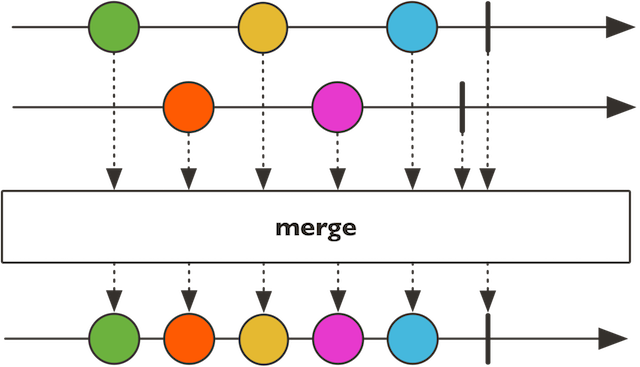

merge

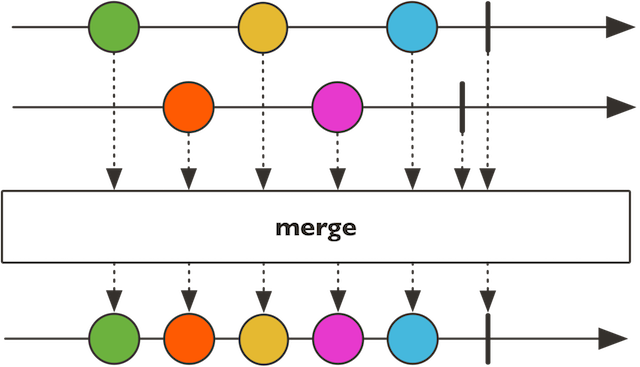

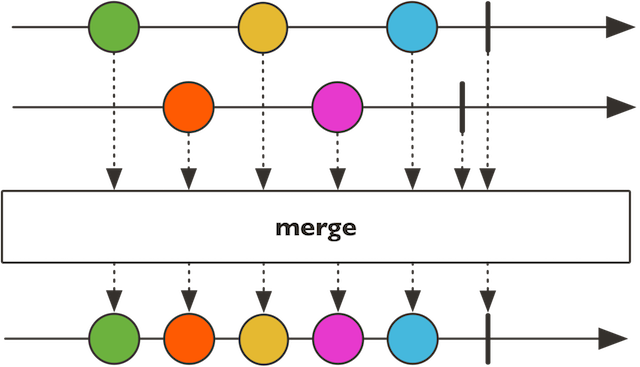

public static <T> Flux<T> merge(Publisher<? extends Publisher<? extends T>> source)

Merge data fromPublishersequences emitted by the passedPublisherinto an interleaved merged sequence. Unlikeconcat, inner sources are subscribed to eagerly.

Note that merge is tailored to work with asynchronous sources or finite sources. When dealing with an infinite source that doesn't already publish on a dedicated Scheduler, you must isolate that source in its own Scheduler, as merge would otherwise attempt to drain it before subscribing to another source.

-

merge

public static <T> Flux<T> merge(Publisher<? extends Publisher<? extends T>> source, int concurrency)

Merge data fromPublishersequences emitted by the passedPublisherinto an interleaved merged sequence. Unlikeconcat, inner sources are subscribed to eagerly (but at mostconcurrencysources are subscribed to at the same time).

Note that merge is tailored to work with asynchronous sources or finite sources. When dealing with an infinite source that doesn't already publish on a dedicated Scheduler, you must isolate that source in its own Scheduler, as merge would otherwise attempt to drain it before subscribing to another source.

-

merge

public static <T> Flux<T> merge(Publisher<? extends Publisher<? extends T>> source, int concurrency, int prefetch)

Merge data fromPublishersequences emitted by the passedPublisherinto an interleaved merged sequence. Unlikeconcat, inner sources are subscribed to eagerly (but at mostconcurrencysources are subscribed to at the same time).

Note that merge is tailored to work with asynchronous sources or finite sources. When dealing with an infinite source that doesn't already publish on a dedicated Scheduler, you must isolate that source in its own Scheduler, as merge would otherwise attempt to drain it before subscribing to another source.

-

merge

public static <I> Flux<I> merge(Iterable<? extends Publisher<? extends I>> sources)

Merge data fromPublishersequences contained in anIterableinto an interleaved merged sequence. Unlikeconcat, inner sources are subscribed to eagerly. A newIteratorwill be created for each subscriber.

Note that merge is tailored to work with asynchronous sources or finite sources. When dealing with an infinite source that doesn't already publish on a dedicated Scheduler, you must isolate that source in its own Scheduler, as merge would otherwise attempt to drain it before subscribing to another source.

-

merge

@SafeVarargs public static <I> Flux<I> merge(Publisher<? extends I>... sources)

Merge data fromPublishersequences contained in an array / vararg into an interleaved merged sequence. Unlikeconcat, sources are subscribed to eagerly.

Note that merge is tailored to work with asynchronous sources or finite sources. When dealing with an infinite source that doesn't already publish on a dedicated Scheduler, you must isolate that source in its own Scheduler, as merge would otherwise attempt to drain it before subscribing to another source.

-

merge

@SafeVarargs public static <I> Flux<I> merge(int prefetch, Publisher<? extends I>... sources)

Merge data fromPublishersequences contained in an array / vararg into an interleaved merged sequence. Unlikeconcat, sources are subscribed to eagerly.

Note that merge is tailored to work with asynchronous sources or finite sources. When dealing with an infinite source that doesn't already publish on a dedicated Scheduler, you must isolate that source in its own Scheduler, as merge would otherwise attempt to drain it before subscribing to another source.

-

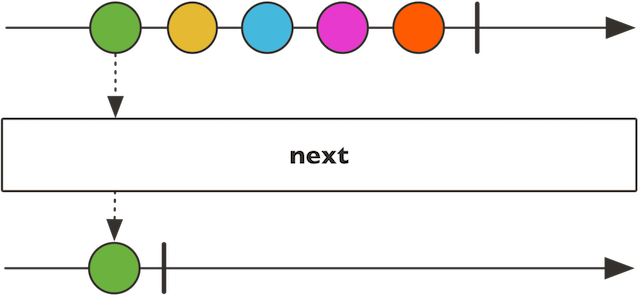

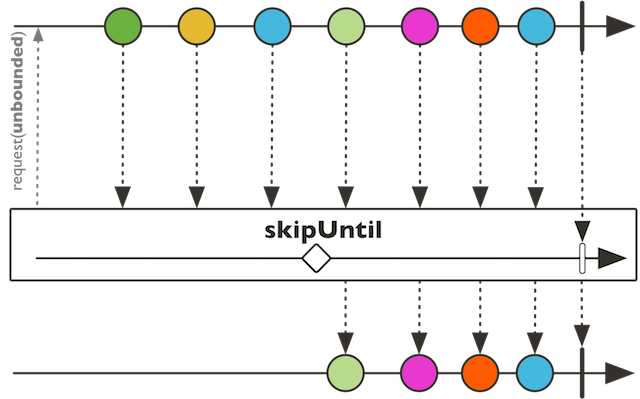

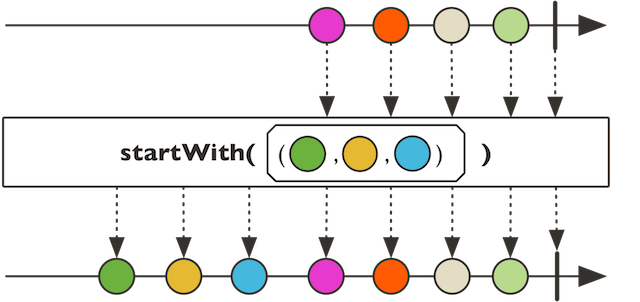

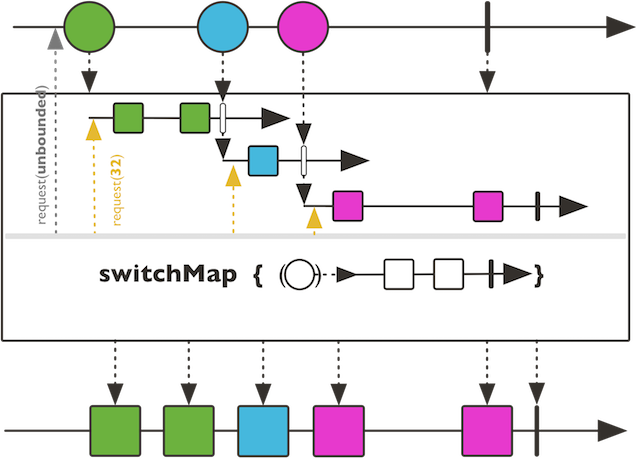

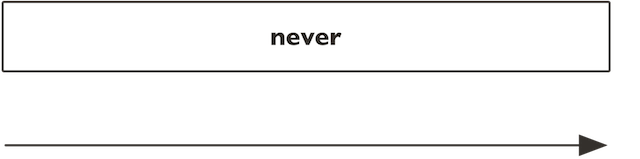

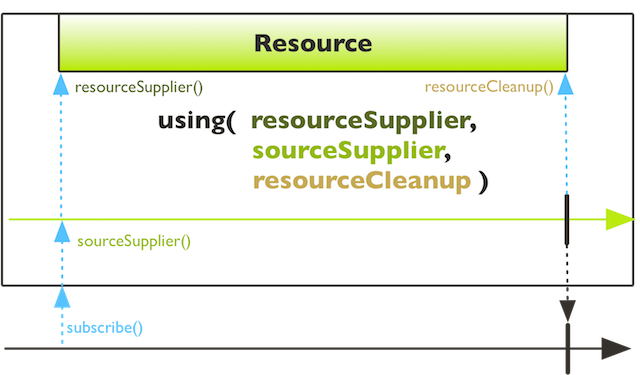

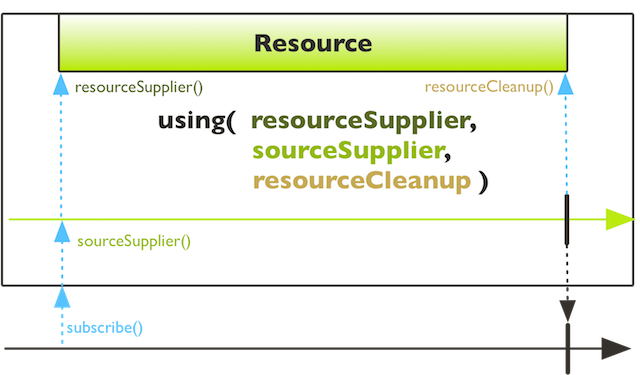

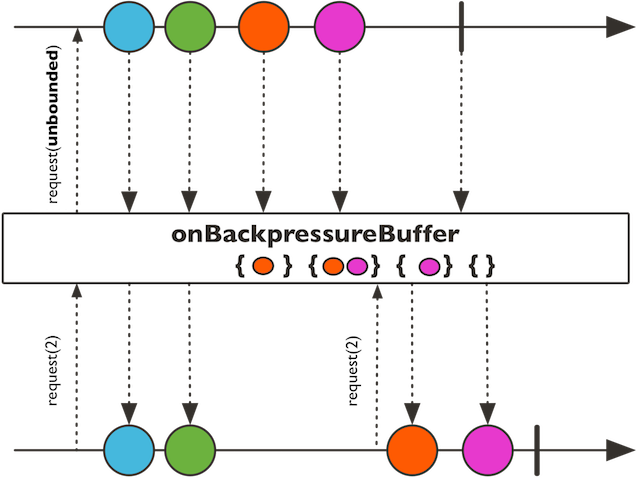

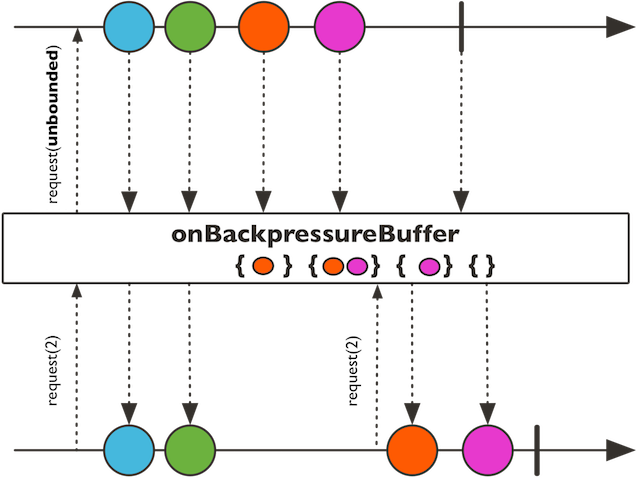

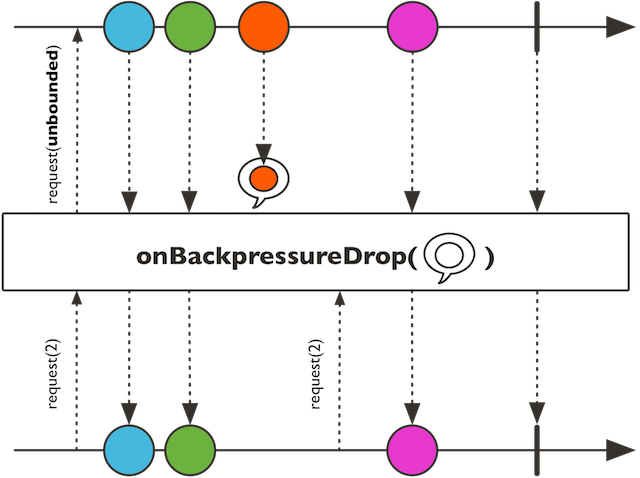

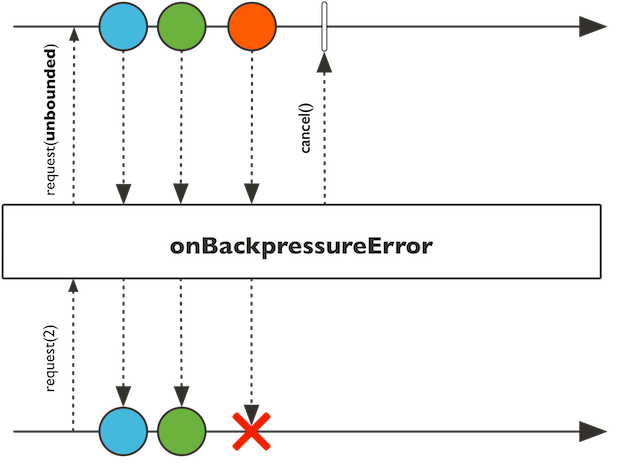

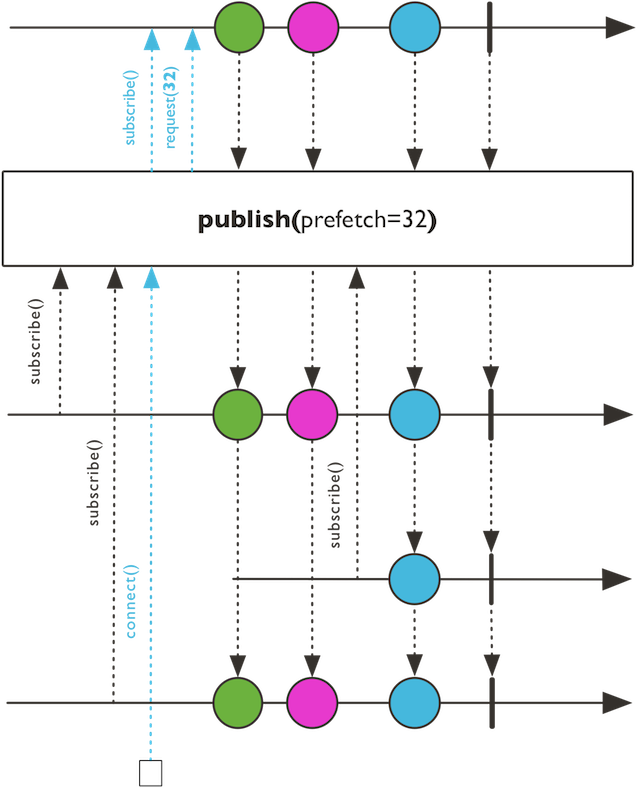

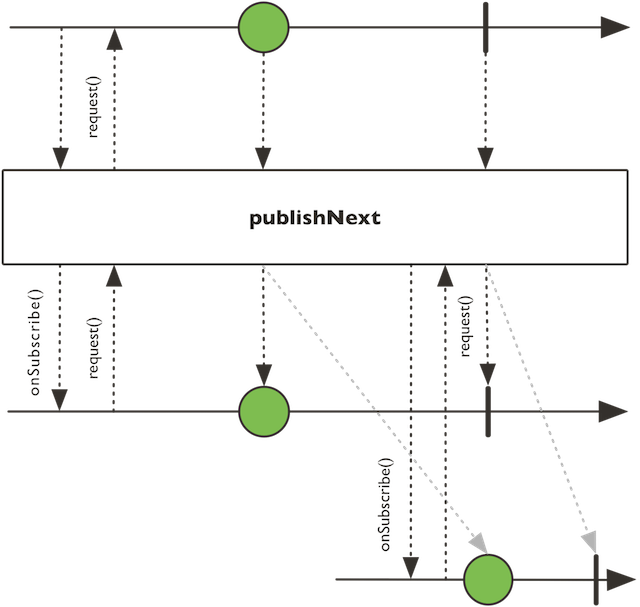

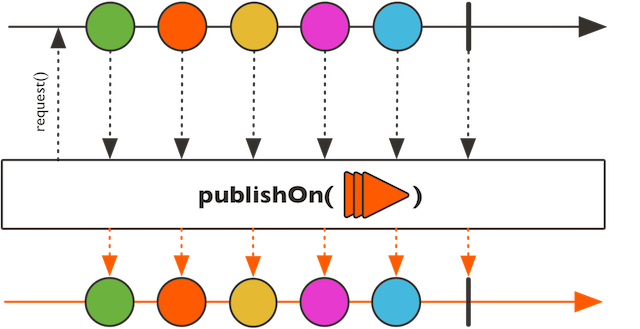

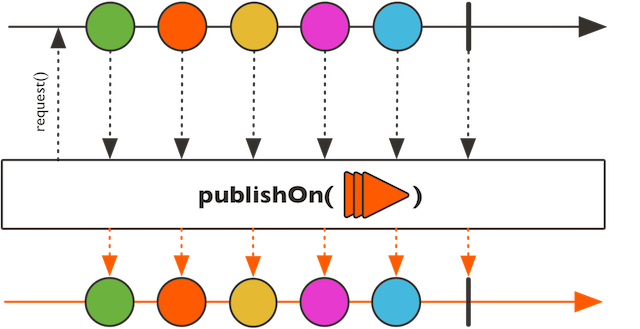

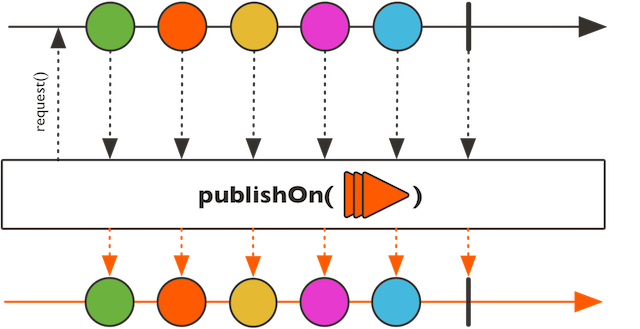

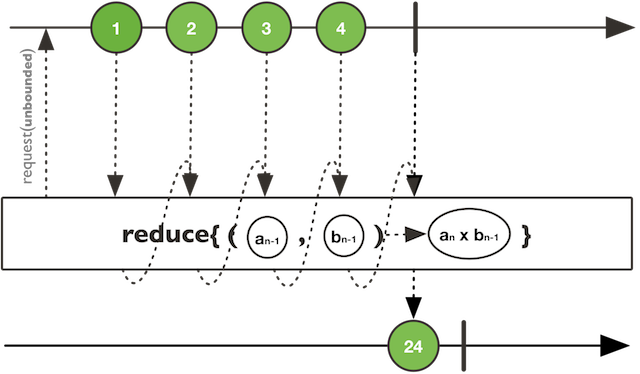

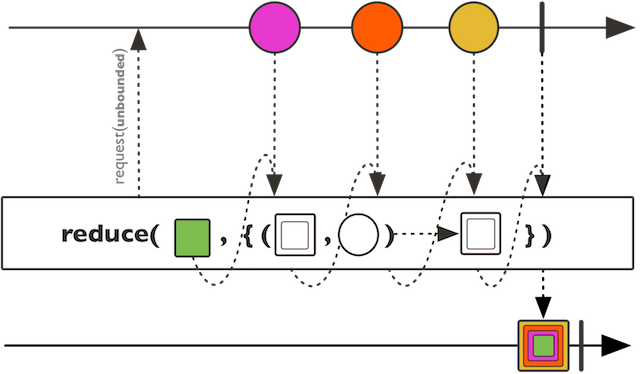

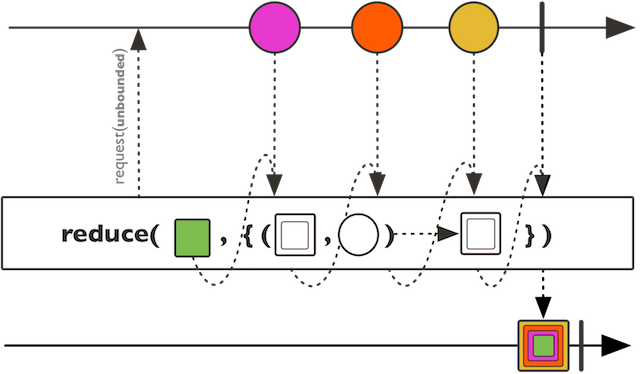

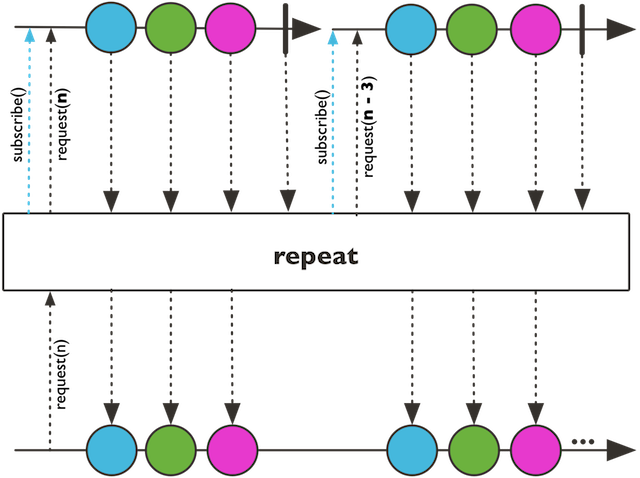

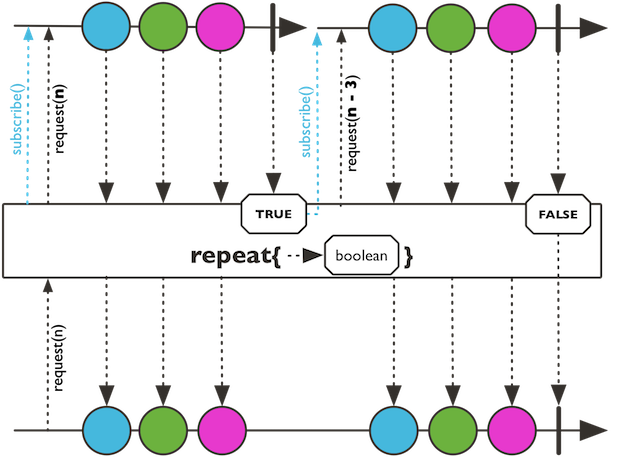

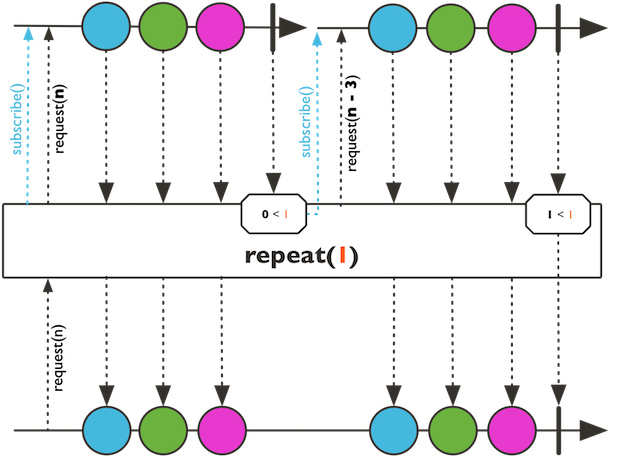

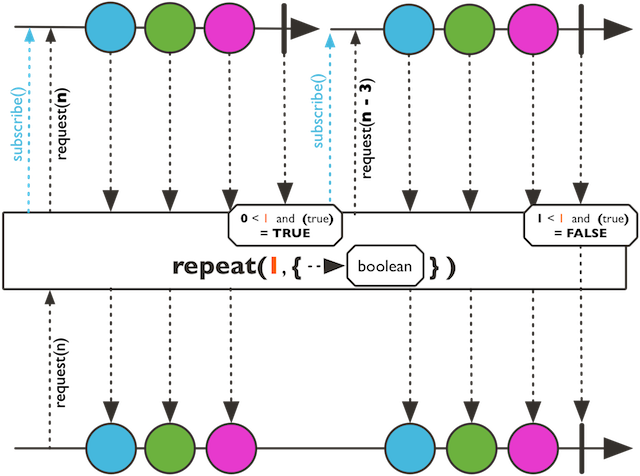

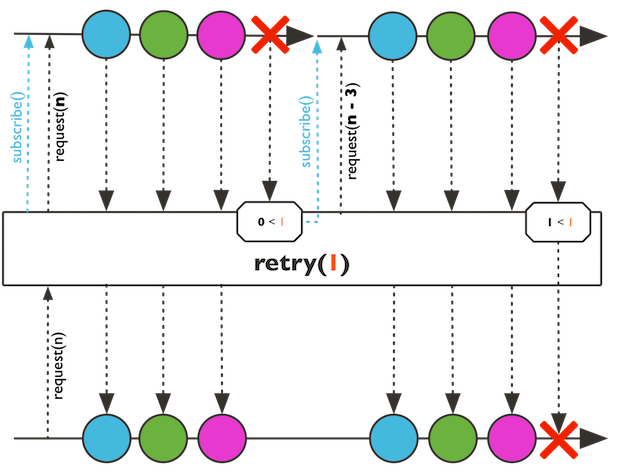

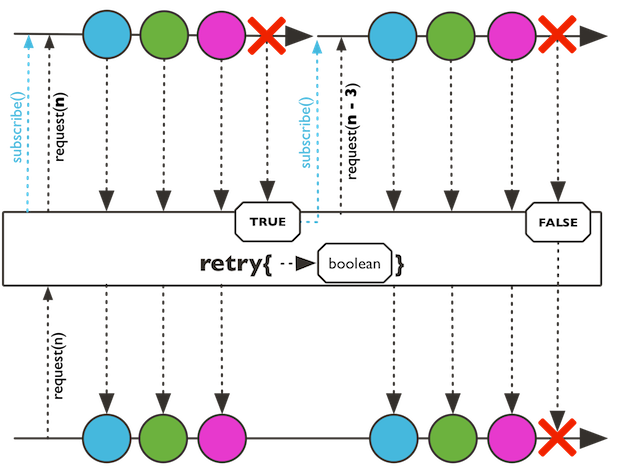

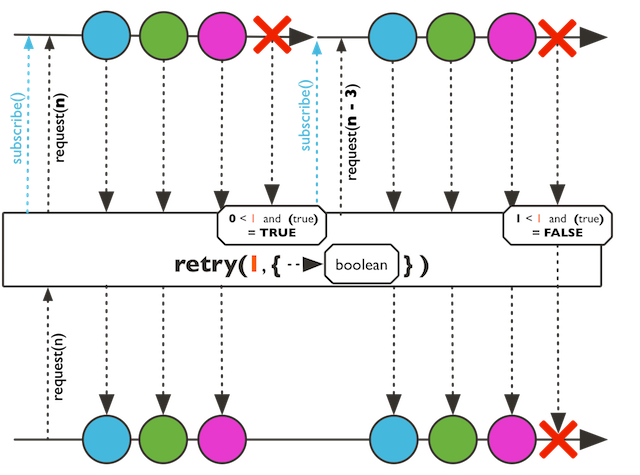

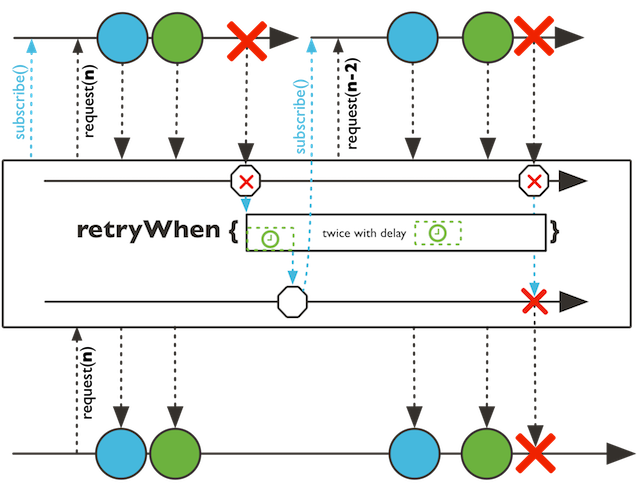

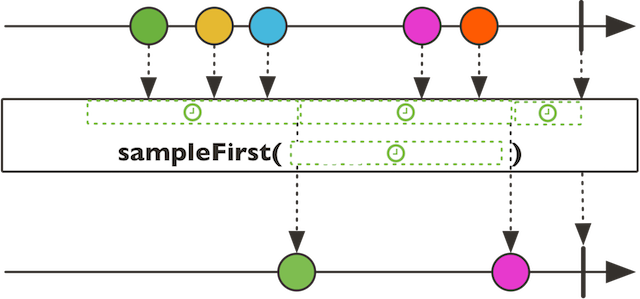

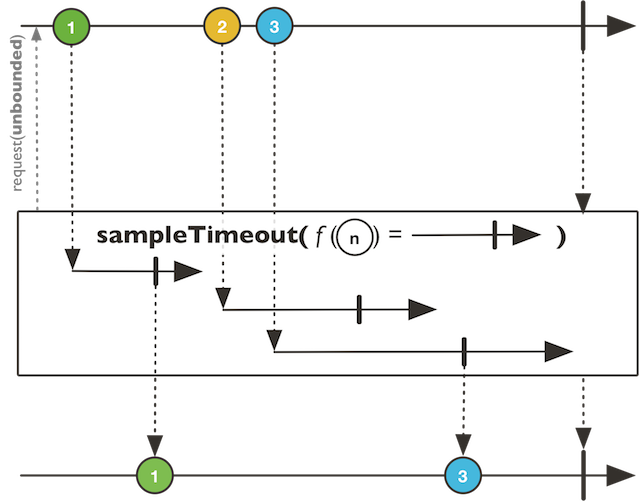

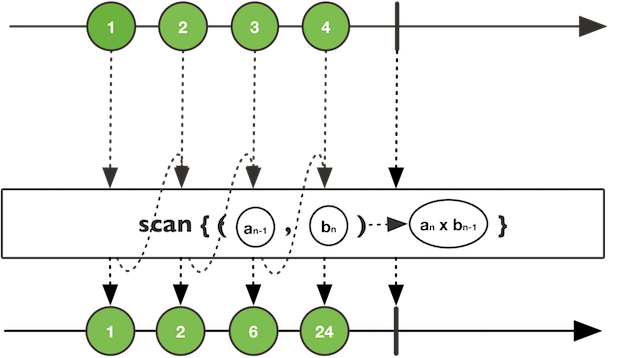

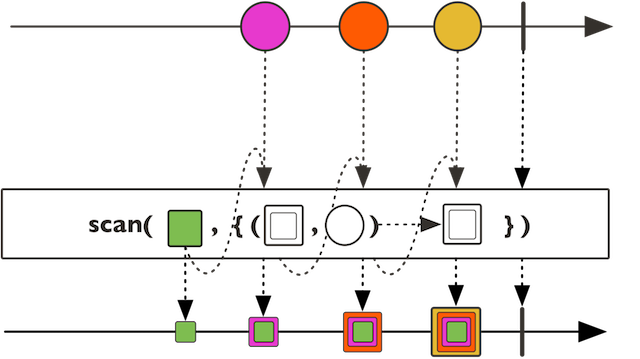

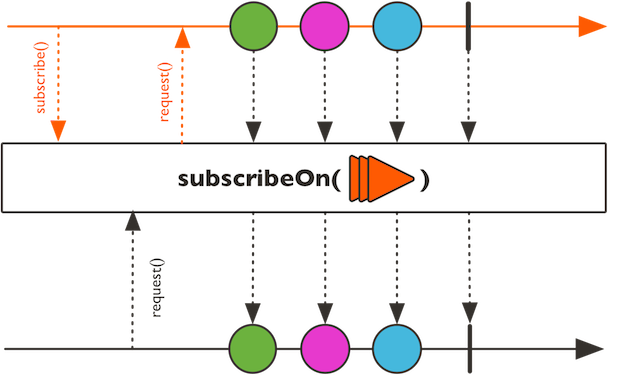

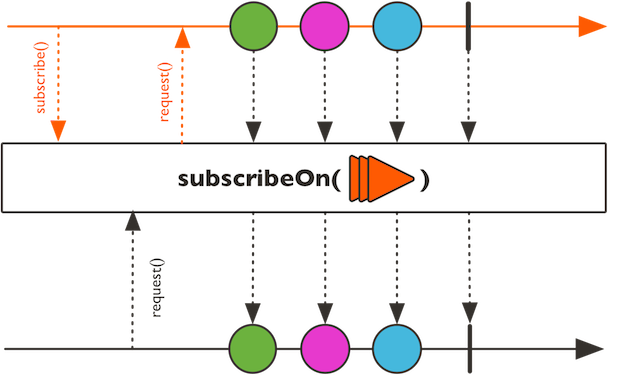

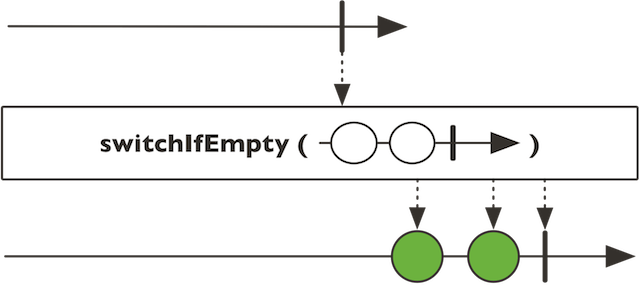

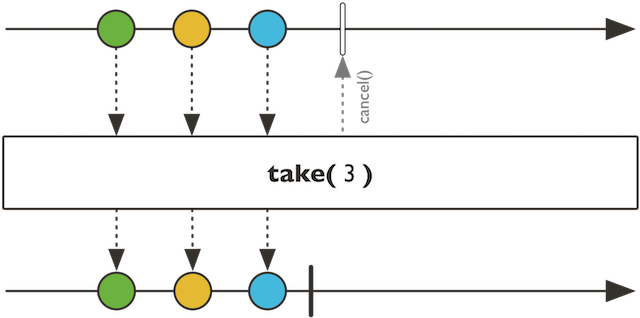

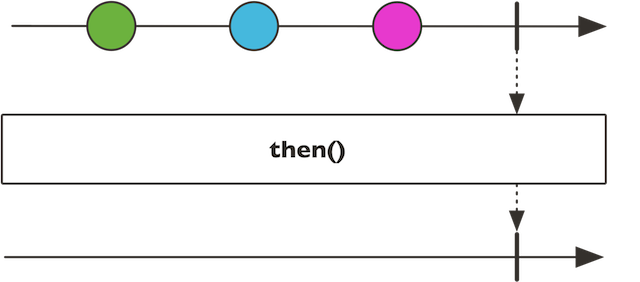

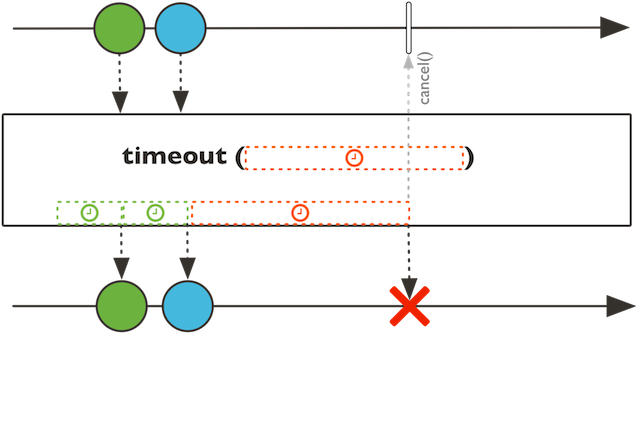

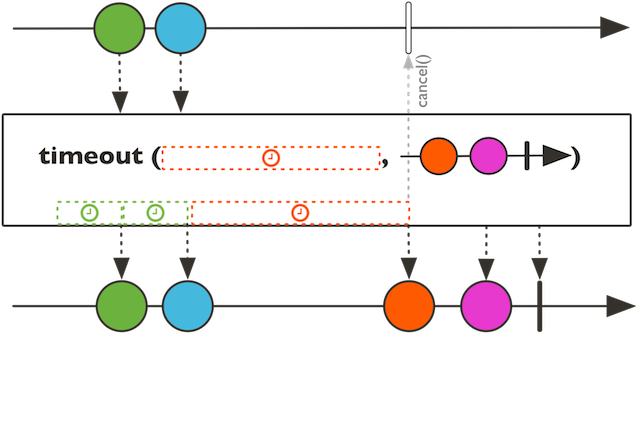

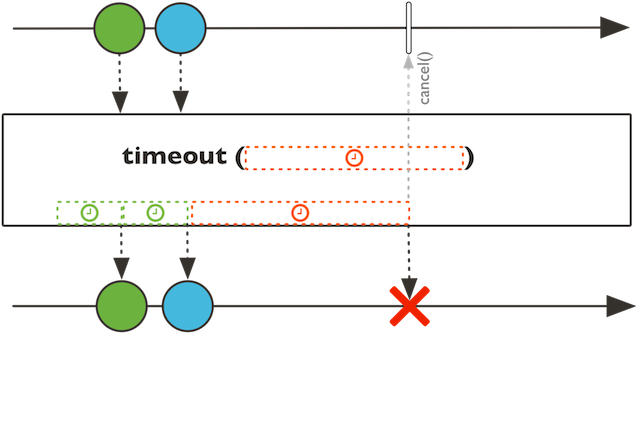

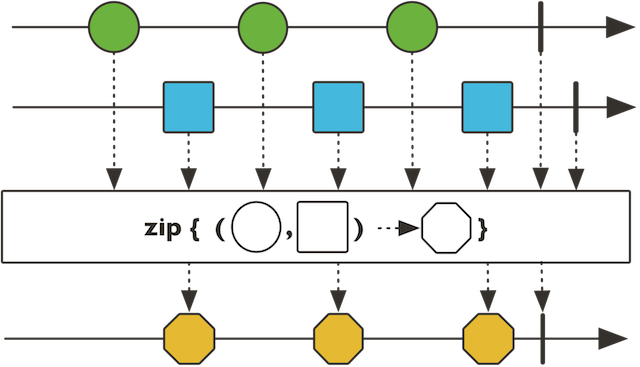

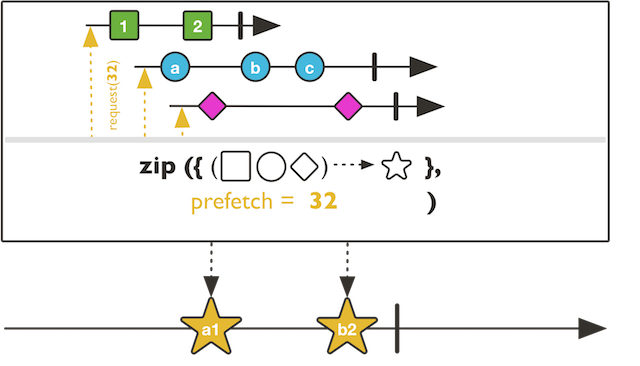

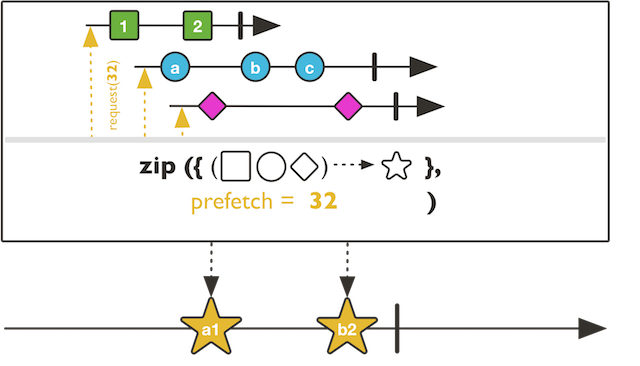

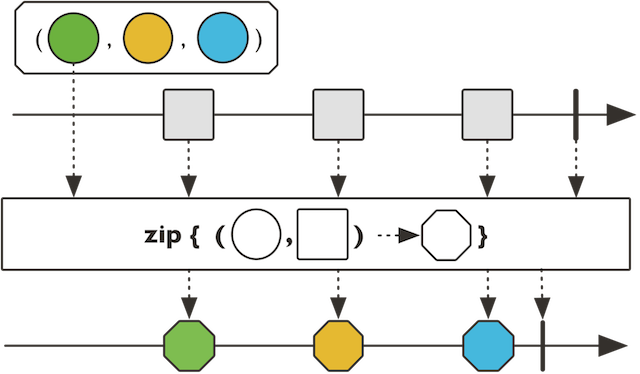

mergeDelayError